|

by Rich Brueckner on (#49APY)

In this video from the 2019 Stanford HPC Conference, Usha Upadhyayula & Tom Krueger from Intel present: Introduction to Intel Optane Data Center Persistent Memory. For decades, developers had to balance data in memory for performance with data in storage for persistence. The emergence of data-intensive applications in various market segments is stretching the existing […]The post Video: Introduction to Intel Optane Data Center Persistent Memory appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 03:30 |

|

by Rich Brueckner on (#49942)

DK Panda from Ohio State University gave this talk at the 2019 Stanford HPC Conference. "This talk will provide an overview of challenges in designing convergent HPC and BigData software stacks on modern HPC clusters. An overview of RDMA-based designs for Hadoop (HDFS, MapReduce, RPC and HBase), Spark, Memcached, Swift, and Kafka using native RDMA support for InfiniBand and RoCE will be presented. Enhanced designs for these components to exploit HPC scheduler (SLURM), parallel file systems (Lustre) and NVM-based in-memory technology will also be presented. Benefits of these designs on various cluster configurations using the publicly available RDMA-enabled packages from the OSU HiBD project will be shown."The post Designing Convergent HPC and Big Data Software Stacks: An Overview of the HiBD Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#49943)

The University of Chicago Center for Research Informatics is seeking an HPC Systems Administrator in our Job of the Week. "This position will work with the Lead HPC Systems Administrator to build and maintain the BSD High Performance Computing environment, assist life-sciences researchers to utilize the HPC resources, work with stakeholders and research partners to successfully troubleshoot computational applications, handle customer requests, and respond to suggestions for improvements and enhancements from end-users."The post Job of the Week: HPC Systems Administrator at the University of Chicago Center for Research Informatics appeared first on insideHPC.

|

|

by staff on (#497ER)

The European High Performance Computing Joint Undertaking (EuroHPC JU) has launched its first calls for expressions of interest, to select the sites that will host the Joint Undertaking’s first supercomputers (petascale and precursor to exascale machines) in 2020. "Deciding where Europe will host its most powerful petascale and precursor to exascale machines is only the first step in this great European initiative on high performance computing," said Mariya Gabriel, Commissioner for Digital Economy and Society. "Regardless of where users are located in Europe, these supercomputers will be used in more than 800 scientific and industrial application fields for the benefit of European citizens."The post EuroHPC Takes First Steps Towards Exascale appeared first on insideHPC.

|

|

by staff on (#494D6)

The San Diego Supercomputer Center (SDSC) and Sylabs have teamed up to bring the Community its first ever meeting of the Singularity User Group (SUG). Singularity has become what it is today through the engagement of users, developers, and providers that have collectively developed a sense of community around the software and its ecosystem. Read on as Ian Lumb, Technical Writer at Sylabs, shares the origins of the new annual Singularity User Group Meeting.The post The Inaugural Singularity User Group Meeting: Registration Open Now appeared first on insideHPC.

|

|

by staff on (#497AC)

In this podcast, the Radio Free HPC team looks at how Lawrence Livermore National Lab is working to simulate and help modernize the electric grid. They discuss how the ‘new grid’ will need to be two-way, both delivering and accepting electricity. The new grid will also have to communicate with smart homes and other buildings in order to predict demand and adjust real time pricing.The post Podcast: Modernizing the Electric Grid with HPC appeared first on insideHPC.

|

|

by staff on (#497AD)

Students at Virginia Tech are using HPC for their creative work to build robust project portfolios. "The School of Visual Arts in Virginia Tech’s College of Architecture and Urban Studies offers an advanced rendering class for students in design-based programs, including architecture, industrial design, and interior design. Students in the school’s graduate and undergraduate creative technologies programs also take the course. Rendering involves converting 3D wireframe models into still or animated 2D images that can be displayed on a screen. It is in this class where students hone their skills learning advanced techniques to create complex animations."The post HPC Boosts Art and Design at Virginia Tech appeared first on insideHPC.

|

|

by Rich Brueckner on (#4975X)

Exascale computing is only a few years away. Today the Exascale Computing Project (ECP) put out the second release of their Extreme-Scale Scientific Software Stack. The E4S Release 0.2 includes a subset of ECP ST software products, and demonstrates the target approach for future delivery of the full ECP ST software stack. Also available are […]The post Exascale Computing Project updates Extreme-Scale Scientific Software Stack appeared first on insideHPC.

|

|

by Rich Brueckner on (#4975Y)

In this video from the 2019 Stanford HPC Conference, Naoki Shibata from XTREME-D presents: XTREME-Stargate: The New Era of HPC Cloud Platforms. "XTREME-D is an award-winning, funded Japanese startup whose mission is to make HPC cloud computing access easy, fast, efficient, and economical for every customer. The company recently introduced XTREME-Stargate, which was developed as a cloud-based bare-metal appliance specifically for high-performance computations, optimized for AI data analysis and conventional supercomputer usage."The post XTREME-Stargate: The New Era of HPC Cloud Platforms appeared first on insideHPC.

|

|

by staff on (#494PW)

Over at the SC19 Blog, Charity Plata continues the HPC is Now series of interviews with Enrico Rinaldi, a physicist and special postdoctoral fellow with the Riken BNL Research Center. This month, Rinaldi discusses why HPC is the right tool for physics and shares the best formula for garnering a Gordon Bell Award nomination. "Sierra and Summit are incredible machines, and we were lucky to be among the first teams to use them to produce new scientific results. The impact on my lattice QCD research was tremendous, as demonstrated by the Gordon Bell paper submission."The post Interview: Why HPC is the Right Tool for Physics appeared first on insideHPC.

|

|

by staff on (#494D4)

In this special guest feature from Scientific Computing World, Robert Roe reports on the Gordon Bell Prize finalists for 2018. "The finalist’s research ranges from AI to mixed precision workloads, with some taking advantage of the Tensor Cores available in the latest generation of Nvidia GPUs. This highlights the impact of AI and GPU technologies, which are opening up not only new applications to HPC users but also the opportunity to accelerate mixed precision workloads on large scale HPC systems."The post Gordon Bell Prize Highlights the Impact of Ai appeared first on insideHPC.

|

|

by staff on (#494D8)

Atos will soon deploy a Bull supercomputer at the Centro Nacional de Análisis Genómico (CNAG-CRG) in Barcelona for large-scale DNA sequencing and analysis. To support the vast process and calculation demands needed for this analysis, CNAG-CRG worked with Atos to build this custom-made analytics platform, which helps drive new insights ten times faster than its previous HPC system. "Atos helped us to set up a robust platform to conduct in-depth high-performance data analytics on genome sequences, which is the perfect complement to our outstanding sequencing platformâ€, stated Ivo Gut, CNAG-CRG Director.The post Custom Atos Supercomputer to Speed Genome Analysis at CNAG-CRG in Barcelona appeared first on insideHPC.

|

|

by staff on (#492M3)

Today GigaIO, announced that that the company's new FabreX product was successfully used in the Student Cluster Competition at SC18. Students from the University of Warsaw incorporated GigaIO’s alpha product into their self-designed supercomputing cluster, marking the team’s fourth cluster competition event. "GigaIO offers cutting-edge high-performance computing technology, so it was a privilege to be among the first group to experiment with their newest product, FabreX.â€The post GigaIO Technology Boosts Performance at the SC18 Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#492M5)

Today WekaIO announced the results of the independent SPEC SFS 2014 benchmark testing of its flagship WekaIO Matrix product. "Having established itself in the number one position for the SPEC SFS 2014 software build in January 2019, WekaIO has now posted winning results for all remaining benchmarks in the SPEC test suite. In addition to unbeatable performance and scalability, WekaIO has demonstrated extraordinarily low latencies across the benchmark suite, reaffirming Matrix is the world’s fastest parallel file system."The post WekaIO Matrix Cluster Excels on SPEC SFS 2014 Benchmark appeared first on insideHPC.

|

|

by Richard Friedman on (#491YX)

OpenVINO is a single toolkit, optimized for Intel hardware, that the data scientist and AI software developer can use for quickly developing high-performance applications that employ neural network inference and deep learning to emulate human vision over various platforms. "This toolkit supports heterogeneous execution across CPUs and computer vision accelerators including GPUs, Intel® Movidius™ hardware, and FPGAs."The post Putting Computer Vision to Work with OpenVINO appeared first on insideHPC.

|

|

by Rich Brueckner on (#491YZ)

Koen Bertels from Delft University of Technology gave this talk at HiPEAC 2019. "In my talk, I will introduce what quantum computers are but also how they can be used as a quantum accelerator. I will discuss why a quantum computer can be more powerful than any classical computer and what the components are of its system architecture. In this context, I will talk about our current research topics on quantum computing, what the main challenges are and what is available to our community."The post Quantum Computing: From Qubits to Quantum Accelerators appeared first on insideHPC.

|

|

by staff on (#48ZQR)

Today Lenovo announced TruScale Infrastructure Services, a subscription-based offering that allows customers to use and pay for data center hardware and services – on-premise or at a customer-preferred location – without having to purchase the equipment. "Lenovo’s TruScale as-a-Service offering is truly revolutionary, changing how IT departments procure and refresh their data center infrastructure. With our subscription-based model, customers pay for what they use, eliminating upfront capital purchase risk,†said Laura Laltrello, Vice President and General Manager of Services at Lenovo Data Center Group. “Our offering can be applied to any configuration that meets the customer’s needs – whether storage-rich, server-heavy, hyperconverged or high-performance compute – and can be scaled as business dictates.â€The post Lenovo Launches Cloud Hardware Subscriptions with TruScale Infrastructure Services appeared first on insideHPC.

|

|

by staff on (#48ZJE)

In this video, Computational biologist Laura Boykin describes the threat to lives and livelihoods the whitefly represents, the international effort to fight it, and how supercomputing flips the script on a once unwinnable war. "Cray supports visionaries like Laura and her scientific colleagues in East Africa in combining computation and creativity to change outcomes."The post Truly Inspiring: Fighting World Hunger with Cray Supercomputers appeared first on insideHPC.

|

|

by staff on (#48Z7T)

Across the globe, governments are acknowledging the potential of AI technologies to impact our daily lives, from how we make purchasing decisions to improving our healthcare. But there are several reasons why government agencies may be hesitant to adopt AI technologies. A new insideHPC Guide, courtesy of Dell EMC and NVIDIA, explores what's next for government AI, as well as already tangible results of AI and machine learning.The post Today’s Application of Ai Within Government appeared first on insideHPC.

|

|

by Rich Brueckner on (#48ZJG)

Today NVIDIA announced that NVIDIA Nsight Systems 2019.1 is now available for download. As a system-wide performance analysis tool. With it, developers can visualize application algorithms, identify large optimization opportunities, and tune/scale efficiently across CPUs and GPUs. "In this release, we introduce a wide range of new features, refinements, and fixes. The enhancements aim to improve a user’s ability to analyze neural network performance, locate graphical stutter, and increase pattern discoverability."The post NVIDIA steps up with Nsight Systems Performance Analysis Tool appeared first on insideHPC.

|

|

by Rich Brueckner on (#48ZDF)

In this podcast, the Radio Free HPC team asks whether a supercomputer can or cannot be a “AI Supercomputer.†The question came up after HPE announced a new AI system called Jean Zay that will double the capacity of French supercomputing. "So what are the differences between a traditional super and a AI super? According to Dan, it mostly comes down to how many GPUs the system is configured with, while Shahin and Henry think it has something to do with the datasets."The post Podcast: What is an Ai Supercomputer? appeared first on insideHPC.

|

|

by staff on (#48X11)

In this special guest feature, Paul Grun and Doug Ledford from the OpenFabrics Alliance describe the industry trends in the fabrics space, its state of affairs and emerging applications. "Originally, ‘high-performance fabrics’ were associated with large, exotic HPC machines. But in the modern world, these fabrics, which are based on technologies designed to improve application efficiency, performance, and scalability, are becoming more and more common in the commercial sphere because of the increasing demands being placed on commercial systems."The post The State of High-Performance Fabrics: A Chat with the OpenFabrics Alliance appeared first on insideHPC.

|

|

by staff on (#48X12)

Researchers at NERSC face the daunting task of moving 43 years worth of archival data across the network to new tape libraries, a whopping 120 Petabytes! "Even with all of this in place, it will still take about two years to move 43 years’ worth of NERSC data. Several factors contribute to this lengthy copy operation, including the extreme amount of data to be moved and the need to balance user access to the archive."The post Moving Mountains of Data at NERSC appeared first on insideHPC.

|

|

by staff on (#48WVV)

Dr. Omar Ghattas from the University of Texas at Austin has been selected as the recipient of the 2019 SIAM Geosciences Career Prize. He is being recognized for “groundbreaking contributions in analysis, methods, algorithms, and software for grand challenge computational problems in geosciences, and for exceptional influence as mentor, educator, and collaborator.â€The post Dr. Omar Ghattas Receives 2019 SIAM Geosciences Career Prize appeared first on insideHPC.

|

|

by staff on (#48WVX)

In this special guest feature, Wolfgang Gentzsch from The UberCloud writes that we’ve never been so close to ubiquitous computing for researchers and engineers. "High-performance computing continues to progress, but the next big step toward ubiquitous HPC is coming from software container technology based on Docker, facilitating software packaging and porting, ease of access and use, service stack automation and self-service, and simplifying software maintenance and support."The post HPC in the Hands of Every Engineer – With Software Containers appeared first on insideHPC.

|

|

by Rich Brueckner on (#48WQG)

Thomas Schwinge from Mentor gave this talk at FOSDEM'19. "Requiring only few changes to your existing source code, OpenACC allows for easy parallelization and code offloading to accelerators such as GPUs. We will present a short introduction of GCC and OpenACC, implementation status, examples, and performance results."The post Video: Speeding up Programs with OpenACC in GCC appeared first on insideHPC.

|

|

by staff on (#48V5N)

Over at Argonne, Nils Heinonen writes that Researchers are using the open source Singularity framework as a kind of Rosetta Stone for running supercomputing code almost anywhere. "Once a containerized workflow is defined, its image can be snapshotted, archived, and preserved for future use. The snapshot itself represents a boon for scientific provenance by detailing the exact conditions under which given data were generated: in theory, by providing the machine, the software stack, and the parameters, one’s work can be completely reproduced."The post Argonne Looks to Singularity for HPC Code Portability appeared first on insideHPC.

|

|

by Rich Brueckner on (#48V5Q)

In this video from Arm HPC Asia 2019, Elsie Wahlig leads a panel discussion on Frontiers of AI deployments in HPC on Arm. "Topics at the workshop covered all aspects of the Arm server ecosystem, from chip design, hardware, software architecture and standardization to performance tuning, and applications in biology, medicine, meteorology, astronomy, geography etc. It is exciting to see that Arm servers are being used in so many areas, contributing significantly to the global economy."The post Video: Frontiers of AI Deployments in HPC on Arm appeared first on insideHPC.

|

|

by Rich Brueckner on (#48SR2)

The Jülich Supercomputing Centre in Germany is seeking a Computer Scientist in our Job of the Week. "The institute of Bio- and Geosciences – Agrosphere contributes to an improved understanding and reliable prediction of hydrologic and biogeochemical processes in terrestrial systems. You will develop and apply advanced geoscientific simulation software in high-performance computing environments of the Jülich Supercomuting Centre."The post Job of the Week: Computer Scientist at the Jülich Supercomputing Centre appeared first on insideHPC.

|

|

by Rich Brueckner on (#48SMW)

Adrian Reber from Red Hat gave this talk at the FOSDEM'19 conference. "In this talk I want to give an introduction about the OpenHPC project. Why do we need something like OpenHPC? What are the goals of OpenHPC? Who is involved in OpenHPC and how is the project organized? What is the actual result of the OpenHPC project? It also has been some time (it was FOSDEM 2016) since OpenHPC was part of the HPC, Big Data and Data Science devroom, so that it seems a good opportunity for an OpenHPC status update and what has happened in the last three years."The post Video: OpenHPC Update appeared first on insideHPC.

|

|

by staff on (#48QSN)

Today the Barcelona Supercomputing Center announced it will foster the EUCANCan project to allow both research and cancer treatments to be shared and re-used by the European and Canadian scientific community. As demonstrated by earlier work, research that merges and reanalyzes biomedical data from different studies significantly increases the chances of new discoveries.The post BSC fosters EUCANCan Project to share and reuse cancer genomic data worldwide appeared first on insideHPC.

|

|

by Rich Brueckner on (#48QM2)

Christoph Angerer from NVIDIA gave this talk at FOSDEM'19. "The next big step in data science will combine the ease of use of common Python APIs, but with the power and scalability of GPU compute. The RAPIDS project is the first step in giving data scientists the ability to use familiar APIs and abstractions while taking advantage of the same technology that enables dramatic increases in speed in deep learning. This session highlights the progress that has been made on RAPIDS, discusses how you can get up and running doing data science on the GPU, and provides some use cases involving graph analytics as motivation."The post Rapids: Data Science on GPUs appeared first on insideHPC.

|

|

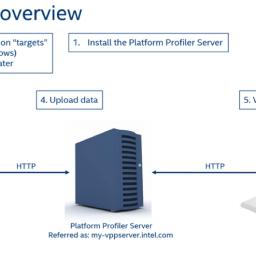

by Richard Friedman on (#48QM4)

The Intel VTune™ Amplifier Platform Profiler on Windows* and Linux* systems shows you critical data about the running platform that help identify common system configuration errors that may be causing performance issues and bottlenecks. Fixing these issues, or modifying the application to work around them, can greatly improve overall performance.The post Are Platform Configuration Problems Degrading Your Application’s Performance? appeared first on insideHPC.

|

|

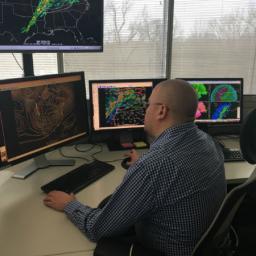

by staff on (#48QM6)

The United States is making exciting changes to how computer models will be developed in the future to support the nation’s weather and climate forecast system. NOAA and the National Center for Atmospheric Research (NCAR) have joined forces to help the nation’s weather and climate modeling scientists achieve mutual benefits through more strategic collaboration, shared resources and information.The post NOAA and NCAR team up for Weather and Climate Modeling appeared first on insideHPC.

|

|

by staff on (#48NH0)

Today Supermicro launched its Early Shipment Program for servers and storage systems that customers can validate using next-generation Intel Xeon Scalable processors which are expected to be officially released later this year. "Supermicro’s entire X11 portfolio is optimized to fully leverage the next-generation Intel Xeon Scalable processors and future innovations including Intel Optane DC persistent memory and Intel Deep Learning Boost technology to enable more efficient AI (artificial intelligence) acceleration."The post Supermicro Early Shipments Available: Intel Xeon Servers with Optane DC Persistent Memory appeared first on insideHPC.

|

|

by staff on (#48NBR)

The ISC 2019 conference has announced the teams that will compete in their Student Cluster Competition. The annual event takes place June 16-20 in Hamburg, Germany. "Now in its eighth year, the ISC HPCAI Advisory Council Student Cluster Competition enables international STEM teams to take part in a real-time contest focused on advancing STEM disciplines and HPC skills development. To take home top honors, twelve teams will have the opportunity to showcase systems of their own design, adhering to strict power constraints and achieve the highest performance across a series of standard HPC benchmarks and applications."The post ISC 2019 Announces Lineup for Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#48NBS)

Today IBM announced an ambitious plan to create a global research hub to develop next-generation AI hardware and expand their joint research efforts in nanotechnology. As part of a $3 billion commitment, the IBM Research AI Hardware Center will be the nucleus of a new ecosystem of research and commercial partners collaborating with IBM researchers to further accelerate the development of AI-optimized hardware innovations.The post IBM Research Ai Hardware Center to Drive Next-Generation AI Chips appeared first on insideHPC.

|

|

by staff on (#48N6S)

In this video, NEC’s Oliver Tennert and AMD’s Dan Bounds describe how the two companies are developing high density computing solutions using AMD EPYC processors and liquid cooling for the aerospace and automotive industries. "More and more datacenters put a lot of efforts into reducing their cooling costs even further by making the leap to direct hot-water cooling. In this concept the water has an inlet temperature of up to 45 degrees Celsius and directly cools CPU, memory and other equipment by elements that are part of a closed water circuit. NEC works together with AMD to enable the best solution for each datacenter."The post NEC Steps Up with AMD EPYC Servers for Aerospace and Automotive appeared first on insideHPC.

|

|

by staff on (#48N2F)

In this special guest feature, Cydney Ewald Stevens from the HPC Ai Advisory Council writes that the Stanford HPC Conference will focus using technology to build a better world. "AI and HPC practitioners share passions for cutting-edge technology and breakthrough R&D at Stanford University next week at the ninth annual Stanford Conference. The two-day conference will bring leaders from academia, government and industry together to share first-hand insights on innovative research, techniques, tools and technologies that are fueling economies, productivity and progress globally."The post Stanford HPC Conference: Innovating the Way to the Future appeared first on insideHPC.

|

|

by staff on (#48K3S)

Today ThinkParQ announced its Platinum Partnership with Pacific Teck in APAC. Founded in 2013, Pacific Teck brings cutting edge technology products from around the world to the APAC region and since 2017, Pacific Teck has been paramount in the success and growth of BeeGFS in the deployments in APAC.The post BGFS File System Shows Momentum in Asia with Pacific Teck appeared first on insideHPC.

|

|

by Rich Brueckner on (#48JYJ)

Eduardo Arango from Sylabs gave this talk at FOSDEM'19. "Singularity is the most widely used container solution in high-performance computing. Enterprise users interested in AI, Deep Learning, compute drive analytics, and IOT are increasingly demanding HPC-like resources. At runtime, Singularity blurs the lines between the container and the host system allowing users to read and write persistent data and leverage hardware like GPUs and Infiniband with ease."The post Video: Reproducible Science with Containers on HPC through Singularity appeared first on insideHPC.

|

|

by staff on (#48JYM)

Today WekaIO announced record performance on the IO-500 10 Node Challenge benchmark. The Virtual Institute for I/O has placed WekaIO Matrix in first place on the IO-500 10 Node Challenge list, beating out massive supercomputer installations. VI4IO republished the 10 Node Challenge list with the new rankings indicating Matrix as number one—31 percent faster than IBM Spectrum Scale and 85 percent faster than DDN Lustre.The post WekaIO Beats Big Systems on the IO-500 10 Node Challenge appeared first on insideHPC.

|

|

by staff on (#48JS1)

Professor Michela Taufer from the University of Tennessee has been selected to receive a 2019 IBM Faculty Award. "This $20,000 USD award is highly competitive and recognizes her leadership in High Performance Computing and its importance to the computing industry. As HPC and Ai converge, Dr. Taufer will lead work with IBM to bring the IBM Onsite Deep Learning Workshop to the UT campus."The post SC19 Chair Michela Taufer Selected to Receive 2019 IBM Faculty Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#48JS2)

The HPC Ai Advisory Council has posted the Agenda for the Stanford HPC Conference. The event takes place Feb. 14-15 in Palo Alto. "Focused on contributing to making the world a better place, our only US-based conference draws together renowned subject matter experts (SMEs) - from private and public sectors to startups and global giants, from across interests and industries in the valley and beyond - to explore the domains and disciplines fueling huge advances in new and ongoing research, innovation, and breakthrough discoveries."The post Agenda Posted for Stanford HPC Conference Next Week appeared first on insideHPC.

|

|

by Rich Brueckner on (#48GM9)

Damien Francois gave this talk at FOSDEM'19. "The world of HPC and the world of BigData are slowly, but surely, converging. The HPC world realizes that there are more to data storage than just files and that 'self-service' ideas are tempting. In the meantime, the BigData world realizes that co-processors and fast networks can really speedup analytics. And indeed, all major public Cloud services now have an HPC offering. And many academic HPC centres start to offer Cloud infrastructures and BigData-related tools."The post The convergence of HPC and BigData: What does it mean for HPC sysadmins? appeared first on insideHPC.

|

|

by staff on (#48GFG)

Today the European EGI Federation announced that it has reached 1,000,000 million cores of high-throughput compute capacity for research. Headquartered in the Netherlands, EGI is a federation of almost 300 data centers worldwide and 21 cloud providers united by a mission to support research activities at all scales, from individuals to small research groups and large collaborations.The post EGI Federation Reaches 1,000,000 Cores appeared first on insideHPC.

|

|

by staff on (#48GAM)

Today DDN announced that RAID Inc. has been named a preferred reseller of DDN’s distribution of Lustre. "As the primary developer, maintainer and technical support provider for Lustre software, DDN implements solutions for extreme, data-intensive environments,†said Paul Bloch, president and co-founder, DDN. “Having RAID Inc. so closely aligned with us provides a real advantage in delivering the most powerful solutions to customers.â€The post RAID Inc. Becomes Preferred Lustre Reseller for DDN Whamcloud appeared first on insideHPC.

|

|

by staff on (#48GAN)

Today Atos announced that the Hartree Centre will soon become the home of the first Atos Quantum Learning Machine in the UK. "We’re thrilled to be enabling UK companies to explore and prepare for the future of quantum computing," said Alison Kennedy, Director of the STFC Hartree Centre. "This collaboration will build on our growing expertise in this exciting area of computing and result in more resilient technology solutions being developed for industry.â€The post Atos to Deploy Quantum Learning Machine at STFC Hartree Centre appeared first on insideHPC.

|

|

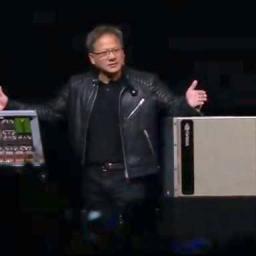

by staff on (#48EAH)

NVIDIA founder and CEO Jensen Huang will deliver the opening keynote address at the 10th annual GPU Technology Conference, being held March 17-21, in San Jose, Calif. "If you’re interested in AI, there’s no better place in the world to connect to a broad spectrum of developers and decision makers than GTC Silicon Valley,†said Greg Estes, vice president of developer programs at NVIDIA. “This event has grown tenfold in 10 years for a reason — it’s where experts from academia, Fortune 500 enterprises and the public sector share their latest work furthering AI and other advanced technologies.â€The post NVIDIA CEO Jensen Huang to Keynote World’s Premier AI Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#48EAJ)

In this podcast, Peter Braam looks at how TensorFlow framework could be used to accelerate high performance computing. "Google has developed TensorFlow, a truly complete platform for ML. The performance of the platform is amazing, and it begs the question if it will be useful for HPC in a similar manner that GPU’s heralded a revolution."The post Video: TensorFlow for HPC? appeared first on insideHPC.

|