|

by Rich Brueckner on (#4792V)

In this video from DDN booth at SC18, Thomas Anthony from the University of Alabama presents: Computing - Accelerating Science & Impacting the Real World. "As the cornerstone service of ASA's charter, we provide High Performance Computing services free of charge to faculty, staff, and enrolled students of public schools, colleges, and universities in Alabama. This allows universities to create a trained workforce with the skills needed for jobs in engineering, science, mathematics, aerospace, and medical research fields."The post Video: Accelerating Science & Impacting the Real World appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 03:30 |

|

by staff on (#478Y5)

Today the Women in High Performance Computing (WHPC) organization announced that Intel Corporation has become its first Corporate Champion. "Women represent less than 15% of the HPC sector; it is essential that we continue to work at the grassroots level and globally to grow the HPC talent pool and benefit from the diversity of talented women and other under-represented groups. With support from Intel, we can begin focusing on global programs and initiatives that can accelerate our goals and amplify our impact.â€The post Intel becomes First Corporate Champion for Women in HPC appeared first on insideHPC.

|

|

by staff on (#478Y6)

In this special guest feature, SC19 General Chair Michela Taufer catches up with Sunita and Jack Dongarra to discuss the way forward for the November conference in Denver. "By augmenting our models and our ability to do simulation, HPC enables us to understand and do things so much faster than we could in the past – and it will only get better in the future."The post Interview: HPC Thought Leaders Looking Forward to SC19 in Denver appeared first on insideHPC.

|

|

by Rich Brueckner on (#4767M)

At SC18 in Dallas, I had a chance to catch up with Gary Grider from LANL. "So we're forming a consortium to chase efficient computing. We see many of the HPC sites today seem to be headed down the path of buying machines that work really well with very dense linear algebra problems. The problem is: hardcore simulation can often not be a great fit on machines built for high Linpack numbers."The post Interview: Gary Grider from LANL on the new Efficient Mission-Centric Computing Consortium appeared first on insideHPC.

|

|

by staff on (#4767N)

With recent advances, high-performance thermoplastic now offers performance that rivals, and in some cases, surpasses their metal counterparts. Design engineers have to take several factors into consideration when trying to choose between metal or plastic quick disconnects. This guest article from CPC takes a look at the differences between metal and plastic liquid cooling connectors, and the benefits and challenges to each.The post Metal or Plastic Liquid Cooling Connectors? What You Should Consider appeared first on insideHPC.

|

|

by staff on (#4745Z)

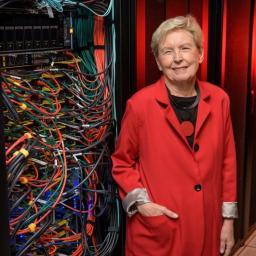

Penguin Computing has deployed a new HPC cluster at the University of Delaware. The Caviness cluster is named in honor of Jane Caviness, former director of Academic Computing Services at the University. "It is great to have our new cluster be named after Jane Caviness, who has played a very important role in establishing high performance computing at University of Delaware."The post Penguin Computing builds Caviness Cluster for Machine Learning at the University of Delaware appeared first on insideHPC.

|

|

by Rich Brueckner on (#4740Q)

GlobusWorld 2019 has issued its Call for Presentations. The event takes place May 1-2 in Chicago. The event centers around Globus, a secure, reliable research data management service that enables researchers to move, share, publish & discover data via a single interface. "We're seeking Globus users, managers and providers to present "lightning talks" at GlobusWorld 2019. Presenters will include research computing leaders, system administrators, and others who use Globus at universities, national supercomputing centers, and other research facilities."The post Call for Presentations: GlobusWorld 2019 in Chicago appeared first on insideHPC.

|

|

by staff on (#4740S)

The battle against cancer is now being fought at the data level. A new Ai Startup called PAIGE hopes to revolutionize clinical diagnosis and treatment in pathology and oncology through the use of artificial intelligence. "Through its partnership with Igneous, PAIGE will be able to securely and efficiently manage 8 petabytes of unstructured data, including anonymized tumor scan images and clinical notes, as part of an integrated machine learning-based healthcare AI workflow anchored by an industry-leading Pure Storage FlashBlade and NVIDIA GPU compute cluster."The post NVIDIA and Pure Storage Power Ai for Oncology with PAIGE appeared first on insideHPC.

|

|

by staff on (#473W0)

Today the Google Cloud announced Public Beta availability of NVIDIA T4 GPUs for Machine Learning workloads. Starting today, NVIDIA T4 GPU instances are available in the U.S. and Europe as well as several other regions across the globe, including Brazil, India, Japan and Singapore. “The T4 is the best GPU in our product portfolio for running inference workloads. Its high-performance characteristics for FP16, INT8, and INT4 allow you to run high-scale inference with flexible accuracy/performance tradeoffs that are not available on any other accelerator.â€The post NVIDIA T4 GPUs Come to Google Cloud for High Speed Machine Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#473QF)

In this video from SC18, Dr. Daniel Reed moderates a panel discussion entitled: “If you can’t measure it, you can’t improve it†-- Software Improvements from Power/Energy Measurement Capabilities." "We have made major gains in improving the energy efficiency of the facility as well as computing hardware, but there are still large gains to be had with software- particularly application software. Just tuning code for performance isn’t enough; the same time to solution can have very different power profiles."The post Dan Reed Panel on Energy Efficient Computing at SC18 appeared first on insideHPC.

|

|

by staff on (#471GN)

The Partnership for Advanced Computing in Europe has published a new report: PRACE in the EuroHPC Era. PRACE is an international non-profit association with its seat in Brussels. "The EuroHPC Joint Undertaking will advance the European supercomputer landscape by completing the infrastructure pyramid at the top level with European leadership-class supercomputers. In a context of a strong international competition with USA, China and Japan, this development is highly expected by all stakeholders of HPC in Europe. For the European HPC users from science and industry, i.e. industrials and SMEs, the seamless integration of these new top-level systems and services into the existing European HPC-ecosystem is an issue of paramount importance."The post New Report: PRACE in the EuroHPC Era appeared first on insideHPC.

|

|

by staff on (#471GQ)

Today Quobyte announced a partnership with EUROstor to expand the reach of its next-generation file system throughout the German, Austrian and Swiss marketplace to customers seeking scale-out NAS solutions for enterprise application workloads. "With scale-out NAS solutions becoming increasingly important within our market, Quobyte is an exciting expansion of our storage product portfolio,†said Wolfgang Bauer, technical director of EUROstor. “With Quobyte’s flexibility, scalability and high performance, we can easily deliver storage solutions that are purpose-built to satisfy our customers’ needs. We trust there are a lot more interesting projects to come.â€The post Quobyte Partners with EUROstor to Deliver Scale-Out NAS Solutions appeared first on insideHPC.

|

|

by staff on (#471BT)

In this special guest feature from Scientific Computing World, Robert Roe reports on new technology and 30 years of the US supercomputing conference at SC18 in Dallas. "From our volunteers to our exhibitors to our students and attendees – SC18 was inspirational," said SC18 general chair Ralph McEldowney. "Whether it was in technical sessions or on the exhibit floor, SC18 inspired people with the best in research, technology, and information sharing."The post Looking Back at SC18 and the Road Ahead to Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#46Z4H)

The upcoming Rice University Oil and Gas HPC Conference will focus on the computational challenges and needs in the Energy industry. The event takes place March 4-6, 2019 in Houston. "High-end computing and information technology continues to stand out across the industry as a critical business enabler and differentiator with a relatively well understood return on investment. However, challenges such as constantly changing technology landscape, increasing focus on software and software innovation, and escalating concerns around workforce development still remain. The agenda for the conference includes invited keynote and plenary speakers, parallel sessions made up of at least four presentations each and a student poster session."The post Industry Leaders prepare for Rice University Oil and Gas Conference in March appeared first on insideHPC.

|

|

by Rich Brueckner on (#46YYS)

In this video, Jay Kruemcke from SUSE talks presents: Five Things to Know About SLE HPC. "SUSE Linux Enterprise for High Performance Computing provides a parallel computing platform for high performance data analytics workloads such as artificial intelligence and machine learning. Fueled by the need for more compute power and scale, businesses around the world today are recognizing that a high performance computing infrastructure is vital to supporting the analytics applications of tomorrow."The post Video: Five Things to Know About SUSE Linux Enterprise for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#46YYV)

Scientists and Engineers at Berkeley Lab are busy preparing for Exascale supercomputing this week at the ECP Annual Meeting in Houston. With a full agenda running five days, LBL researchers will contribute Two Plenaries, Five Tutorials, 15 Breakouts and 20 Posters. "Sponsored by the Exascale Computing Project, the ECP Annual Meeting centers around the many technical accomplishments of our talented research teams, while providing a collaborative working forum that includes featured speakers, workshops, tutorials, and numerous planning and co-design meetings in support of integrated project understanding, team building and continued progress."The post Researchers Gear Up for Exascale at ECP Meeting in Houston appeared first on insideHPC.

|

|

by staff on (#46YYX)

In this video, Bill Jenkins from Intel presents and introduction to High Performance Computing. "This online training will provide a high-level introduction to high performance computing, the problem it solves and the vertical markets it solves it in. Rather than focusing on the step by step, this training will educate into the concepts and resources available to perform the data analytics process and even also discuss where accelerators can be used."The post An Introduction to High Performance Computing appeared first on insideHPC.

|

|

by staff on (#46X5E)

On January 7, the U.S. Department of Energy announced plans to award 189 grants totaling $33 million to 149 small businesses in 32 states. Funded through DOE’s Small Business Innovation Research (SBIR) and Small Business Technology Transfer (STTR) programs, today’s selections are for Phase I research and development. "Small businesses play a major role in spurring innovation and creating jobs in the U.S. economy. The SBIR and STTR programs were created by Congress to leverage small businesses to advance innovation at federal agencies."The post Want Room-temperature Quantum Memories? DOE Awards Small Business Research and Development Grants appeared first on insideHPC.

|

|

by staff on (#46X5F)

Computational scientists are invited to apply for the upcoming Argonne Training Program on Extreme-Scale Computing (ATPESC) this Summer. "This program provides intensive hands-on training on the key skills, approaches, and tools to design, implement, and execute computational science and engineering applications on current supercomputers and the HPC systems of the future. As a bridge to that future, this two-week program fills many gaps that exist in the training computational scientists typically receive through formal education or other shorter courses."The post Apply Now for 2019 Argonne Training Program on Extreme-Scale Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#46VF9)

In this video from DDN booth at SC18, John Bent from DDN presents: IME - Unlocking the Potential of NVMe. "DDN’s Infinite Memory Engine (IME) is a scale-out, software-defined, flash storage platform that streamlines the data path for application I/O. IME interfaces directly to applications and secures I/O via a data path that eliminates file system bottlenecks. With IME, architects can realize true flash-cache economics with a storage architecture that separates capacity from performance."The post The Infinite Memory Engine – Unlocking the Potential of NVMe appeared first on insideHPC.

|

|

by Rich Brueckner on (#46VCP)

Spectral Sciences in Massachusetts is seeking a Research Engineer in our Job of the Week. "The successful candidate will work within a small team of SSI scientists and engineers on a variety of projects in physical modeling and simulation, data analysis, and algorithm development. Typical projects include leveraging high-performance computing capabilities to simulate real events and comparison with available measured data or development and integration of key physical models within parallel software."The post Job of the Week: Research Engineer at Spectral Sciences appeared first on insideHPC.

|

|

by Rich Brueckner on (#46SYB)

In this video from DDN booth at SC18, Peter Jones from DDN presents: Lustre Roadmap & Community Update. With their new Whamcloud Business Unit, DDN has already brought key Lustre developers on board to accelerate their high performance computing solutions. "This important acquisition reinforces DDN’s presence as the global market leader for data at scale, while providing Lustre customers with enhanced field support and a well-funded technology roadmap. The acquisition also enables DDN to expand Lustre’s leading position from high performance computing (HPC) and Exascale into high growth markets such as analytics, AI and hybrid cloud."The post Video: Lustre Roadmap & Community Update appeared first on insideHPC.

|

|

by Rich Brueckner on (#46SSF)

In this video from the Exascale Computing Project, Dave Montoya from LANL describes the continuous software integration effort at DOE facilities where exascale computers will be located sometime in the next 3-4 years. "A key aspect of the Exascale Computing Project’s continuous integration activities is ensuring that the software in development for exascale can efficiently be deployed at the facilities and that it properly blends with the facilities’ many software components. As is commonly understood in the realm of high-performance computing, integration is very challenging: both the hardware and software are complex, with a huge amount of dependencies, and creating the associated essential healthy software ecosystem requires abundant testing."The post Video: Ramping up for Exascale at the National Labs appeared first on insideHPC.

|

|

by Rich Brueckner on (#46QHM)

In this video from CES 2019, AMD President and CEO Dr. Lisa Su describes how the new AMD EPYC processors are changing the game for High Performance Computing. "This is an incredible time to be in technology as the industry pushes the envelope on high-performance computing to solve the biggest challenges we face together,†said Su. “At AMD, we made big bets several years ago to accelerate the pace of innovation for high-performance computing, and 2019 will be an inflection point for the industry as we bring these new products to market."The post Video: AMD Steps up with renewed focus on High Performance Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#46PYG)

In this video from DDN User Group at SC18, Steve Hindmarsh from the Crick Institute presents: Research Computing Storage at the Francis Crick Institute. "The Francis Crick Institute is dedicated to understanding the fundamental biology underlying health and disease. Our work is helping to understand why disease develops and to translate discoveries into new ways to prevent, diagnose and treat illnesses such as cancer, heart disease, stroke, infections and neurodegenerative diseases."The post Video: Research Computing Storage at the Francis Crick Institute appeared first on insideHPC.

|

|

by staff on (#46PYJ)

The DHPCC++19 conference has issued its Call for Papers. Held in conjunction with the IWOCL event, the Distributed & Heterogeneous Programming in C/C++ event takes place May 13, 2019 in Boston. "This will be the 3rd DHPCC++ event in partnership with IWOCL, the international OpenCL workshop with a focus on heterogeneous programming models for C and C++, covering all the programming models that have been designed to support heterogeneous programming in C and C++."The post Call for Papers: Distributed & Heterogeneous Programming in C/C++ Event in Boston appeared first on insideHPC.

|

|

by staff on (#46PS8)

In the podcast, Mike Bernhardt from ECP catches up with Sameer Shende to learn how the Performance Research Lab at the University of Oregon is helping to pave the way to Exascale. "Developers of parallel computing applications can well appreciate the Tuning and Analysis Utilities (TAU) performance evaluation tool—it helps them optimize their efforts. Sameer has worked with the TAU software for nearly two and a half decades and has released more than 200 versions of it. Whatever your application looks like, there’s a good chance that TAU can support it and help you improve your performance."The post Podcast: Improving Parallel Applications with the TAU tool appeared first on insideHPC.

|

|

by staff on (#46MMP)

Earlier this week, IBM unveiled a powerful new global weather forecasting system that will provide the most accurate local weather forecasts ever seen worldwide. The new IBM Global High-Resolution Atmospheric Forecasting System (GRAF) will be the first hourly-updating commercial weather system that is able to predict something as small as thunderstorms globally. Compared to existing models, it will provide a nearly 200% improvement in forecasting resolution for much of the globe (from 12 to 3 sq km). It will be available later this year.The post IBM Weather System to Improve Forecasting Around the World appeared first on insideHPC.

|

|

by Rich Brueckner on (#46MFB)

In this talk from the 2018 HiPEAC event, Philippe Notton from Atos describes how the European Processor Initiative will help Europe achieve sovereignty in chips for advanced computing. "We expect to achieve unprecedented levels of performance at very low power, and EPI’s HPC and automotive industrial partners are already considering the EPI platform for their product roadmaps."The post Video: An Update on the European Processing Initiative appeared first on insideHPC.

|

|

by staff on (#46MFD)

Scientists’ effort to map a portion of the sky in unprecedented detail is coming to an end, but their work to learn more about the expansion of the universe has just begun. "Using the Dark Energy Camera, a 520-megapixel digital camera mounted on the Blanco 4-meter telescope at the Cerro Tololo Inter-American Observatory in Chile, scientists on DES took data for 758 nights over six years. Over those nights, the survey generated 50 terabytes (that’s 50 trillion bytes) of data over its six observation seasons. That data is stored and analyzed at NCSA. Compute power for the project comes from NCSA’s NSF-funded Blue Waters Supercomputer, the University of Illinois Campus Cluster, and Fermilab."The post Supercomputing Dark Energy Survey Data through 2021 appeared first on insideHPC.

|

|

by staff on (#46MA7)

Today R Systems announced the expansion of its data center and infrastructure capabilities HPC managed services into Switch’s Core Campus in Las Vegas. "Including Switch data centers as part of the offering for R Systems enables us to continue growing with our customers. Since 2005 R Systems has focused exclusively on HPC, which has enabled us to build expertise about the applications, computing, networking and storage technologies that are unique to HPC,†said R Systems Principal Brian Kucic.The post R Systems brings HPC Cloud to SUPERNAP in Las Vegas appeared first on insideHPC.

|

|

by staff on (#46HZZ)

Today IBM unveiled IBM Q System One, the world's first integrated universal approximate quantum computing system designed for scientific and commercial use. "The IBM Q System One is a major step forward in the commercialization of quantum computing," said Arvind Krishna, senior vice president of Hybrid Cloud and director of IBM Research. "This new system is critical in expanding quantum computing beyond the walls of the research lab as we work to develop practical quantum applications for business and science."The post IBM rolls out Quantum Computer for Commercial Use appeared first on insideHPC.

|

|

by Rich Brueckner on (#46HM7)

Today the Jülich Supercomputing Centre in Germany announced 32.4 million euros in funding for continued research into such diverse areas as quantum computing and neuromorphic computing. "With the new JUWELS system, the research center has a supercomputer that is one of the fastest in the world. Already sought after by researchers from all over Europe, the supercomputer is even used for simulations in brain research, such as the European Human Brain Project."The post Jülich Supercomputing Centre moves forward with 36 million euros of funding appeared first on insideHPC.

|

|

by staff on (#46HM9)

Today Mellanox announced that its 200 Gigabit HDR InfiniBand solutions were selected to accelerate a world-leading supercomputer at HLRS in Germany. The 5000-node supercomputer named “Hawk†will be built in 2019 and provide 24 petaFLOPs of compute performance. By utilizing the InfiniBand fast data throughput and the smart In-Network Computing acceleration engines, HLRS users will be able to achieve the highest HPC and AI application performance, scalability and efficiency.The post Mellanox Powers New Hawk Supercomputer at HLRS with 200 Gigabit HDR InfiniBand appeared first on insideHPC.

|

|

by Rich Brueckner on (#46HMA)

In this special guest feature, Dr Rosemary Francis from Ellexus writes that Data Storage needs to be integral to your plans when moving HPC workloads to the Cloud. We can all admit to being pressured into investing in a new solution as soon as it becomes available. We want to be the fastest, the best-informed, […]The post Why you can save money by being left behind appeared first on insideHPC.

|

|

by Rich Brueckner on (#46F0Y)

The 2nd High Performance Machine Learning Workshop (HPML2019) has issued their Call for Papers. The May 14 workshop will be collocated with CCGrid (19th IEEE/ACM International Symposium in Cluster, Cloud, and Grid Computing) in Cyprus. "This workshop is intended to bring together the Machine Learning (ML), Artificial Intelligence (AI) and High Performance Computing (HPC) communities. In recent years, much progress has been made in Machine Learning and Artificial Intelligence in general. This progress required heavy use of high performance computers and accelerators. Moreover, ML and AI have become a "killer application" for HPC and, consequently, driven much research in this area as well. These facts point to an important cross-fertilization that this workshop intends to nourish."The post Call for Papers: High Performance Machine Learning Workshop (HPML2019) in Cyprus appeared first on insideHPC.

|

|

by staff on (#46F0Z)

Today, Huawei announced "the industry's highest-performance ARM-based CPU." Called Kunpeng 920, the new CPU is designed to boost the development of computing in big data, distributed storage, and ARM-native application scenarios. "Kunpeng 920 integrates 64 cores at a frequency of 2.6 GHz. This chipset integrates 8-channel DDR4, and memory bandwidth exceeds incumbent offerings by 46%. System integration is also increased significantly through the two 100G RoCE ports. Kunpeng 920 supports PCIe 4.0 and CCIX interfaces, and provides 640 Gbps total bandwidth. In addition, the single-slot speed is twice that of the incumbent offering, effectively improving the performance of storage and various accelerators."The post Huawei Unveils “Industry’s Highest-Performance ARM-based CPU†appeared first on insideHPC.

|

|

by Rich Brueckner on (#46ET6)

Veteran HPC journalist Michael Feldman has departed TOP500.org to join The Next Platform as Senior Editor. Led by co-founders Nicole Hemsoth and Timothy Prickett Morgan, offers in-depth coverage of high-end computing at large enterprises, supercomputing centers, hyperscale data centers, and public clouds. "My new role also reunites me with some old friends, who just happen to be some of the best writers in the business," says Feldman. "Not surprisingly, my focus will be on HPC and all things related. These days, that covers a lot of territory since in many ways HPC has provided a useful model for other subject matter that is under the purview of The Next Platform, namely hyperscale, cloud, and high-end enterprise computing."The post Michael Feldman Joins The Next Platform as Senior Editor appeared first on insideHPC.

|

|

by Rich Brueckner on (#46EBC)

In this video from DDN booth at SC18, Larry Wikelius from Marvell presents: Deploying Marvell's ThunderX2 at Scale in HPC Market. "The ThunderX2 product family is the company's second-generation 64-bit ARMv8-A server processor SoCs for datacenter, cloud and high-performance computing (HPC) applications. The family integrates fully out-of-order, high-performance custom cores supporting single- and dual-socket configurations. ThunderX2 is optimized to drive high computational performance delivering outstanding memory bandwidth and memory capacity."The post Video: Deploying Marvell’s ThunderX2 at Scale in HPC Market appeared first on insideHPC.

|

|

by Rich Brueckner on (#46CGS)

The 4th International Workshop on Performance Portable Programming models for Manycore or Accelerators (P^3MA) has issued their Call for Papers. This workshop will provide a forum to bring together researchers and developers to discuss community’s proposals and solutions to performance portability.The post Call for Papers: International Workshop on Performance Portable Programming models for Manycore or Accelerators appeared first on insideHPC.

|

|

by Rich Brueckner on (#46CDQ)

In this video from DDN booth at SC18, Andrey Kudryavtsev from Intel presents: Reimagining the Data Centre Memory and Storage Hierarchy. "Intel Optane DC persistent memory represents a new class of memory and storage technology architected specifically for data center usage. One that we believe fundamentally breaks through some of the constricting methods for using data that have governed computing for more than 50 years."The post Video: Reimagining the Datacenter Memory and Storage Hierarchy appeared first on insideHPC.

|

|

by staff on (#46B03)

In this video from SC18, Mike Bernhardt from the Exascale Computing Project talked with Salman Habib of Argonne National Laboratory about cosmological computer modeling and simulation. Habib explained that the ExaSky project is focused on developing a caliber of simulation that will use the coming exascale systems at maximal power. Clearly, there will be different types of exascale machines,†he said, “and so they [DOE] want a simulation code that can use not just one type of computer, but multiple types, and with equal efficiency.â€The post Video: Flying through the Universe with Supercomputing Power appeared first on insideHPC.

|

|

by Rich Brueckner on (#46B05)

Oak Ridge National Lab in Tennessee is seeking a Performance Engineer in our Job of the Week. "We are seeking to build a team of Performance Engineers who will serve as liaisons between the National Center for Computational Sciences (NCCS) and the users of the NCCS leadership computing resources, particularly Exascale Computing Project application development teams and will collaborate with the ECP application teams as they ready their software applications for the OLCF Exascale computer Frontier. These positions reside in the Scientific Computing Group in the National Center for Computational Sciences at Oak Ridge National Laboratory.The post Job of the Week: Performance Engineer at Oak Ridge National Lab appeared first on insideHPC.

|

|

by staff on (#468Z3)

Today One Stop Systems announced a $4 million purchase order with a $1 million customer extension option from a major OEM in the media and entertainment industry. "As our largest-ever follow-on purchase order, we believe it reflects our industry leadership in application specific, ruggedized servers featuring the latest innovations in GPU compute, flash storage and input/output available today,†said OSS president and CEO, Steve Cooper. “With these products, we have raised the bar for those in media and entertainment production who demand only the best, with ultra-fast processing, networking and storage in a super-ruggedized chassis that provides the best protection in the most demanding physical environments.â€The post Major Media and Entertainment OEM orders $4 Million HPC Gear from One Stop Systems appeared first on insideHPC.

|

|

by staff on (#468Z4)

Over at Argonne, Jared Sagoff writes that automotive manufacturers are leveraging the power of DOE supercomputers to simulate the combustion engines of the future. "As part of a partnership between the Argonne, Convergent Science, and Parallel Works, engine modelers are beginning to use machine learning algorithms and artificial intelligence to optimize their simulations. Now, this alliance recently received a Technology Commercialization Fund award from the DOE to complete this important project."The post Machine Learning Award Powers Engine Design at Argonne appeared first on insideHPC.

|

|

by staff on (#466RD)

In this video, C.S. Chang from the Princeton Plasma Physics Laboratory describes how his team is using the GPU-powered Summit supercomputer to simulate and predict plasma behavior for the next fusion reactor. "By using Summit, Chang’s team expects its highly scalable XGC code, a first-principles code that models the reactor and its magnetically confined plasma, could be simulated 10 times faster than current supercomputers allow. Such a speedup would give researchers an opportunity to model more complicated plasma edge phenomena, such as plasma turbulence and particle interactions with the reactor wall, at finer scales, leading to insights that could help ITER plan operations more effectively."The post Video: Fusion Research on the Summit Supercomputer appeared first on insideHPC.

|

|

by staff on (#466MF)

In this special guest feature, Wolfgang Gentzsch from the UberCloud writes that a new case study shows how Ai can aid CAE. "Solving computational fluid dynamics (CFD) problems is demanding both in terms of computing power and simulation time, and requires deep expertise in CFD. In this UberCloud project #211, an Artificial Neural Network (ANN) has been applied to predicting the fluid flow given only the shape of the object that is to be simulated."The post Case Study: Deep Learning For Fluid Flow Prediction In The Cloud appeared first on insideHPC.

|

|

by staff on (#464EY)

In this video, Nicolas Charbonnier from ArmDevices.net interviews Fujitsu engineers working on the Post-K supercomputer in Japan. Based on the Arm-based A64FX from Fujitsu, the Post-K supercomputer will likely be the fastest system in the world when in debuts in 2021. 'Fujitsu A64FX is the new fastest Arm processor in the world, built on 7nm it has 2.7 TFLOPS performance per chip suitable for high-end HPC and AI, they aim to create with it the world’s fastest supercomputer with it by 2021."The post Video: Fujitsu Post-K Supercomputer to feature World’s Fastest Arm Processor appeared first on insideHPC.

|

|

by Rich Brueckner on (#464BD)

In this video from DDN booth at SC18, Jacques Bessoudo from NVIDIA presents: Accelerating Ai Outcomes with the NVIDIA DGX Reference Architecture. "DDN is focused on solutions that enable customers to radically accelerate their AI and Machine Learning initiatives to gain competitive advantage sooner and to scale flexibly,†said James Coomer, vice president of product management at DDN. “We have taken a holistic approach to this solution, ensuring that the DGX platform is maximally utilized and that customers can extract deeper insights more quickly.â€The post Video: Accelerating Ai Outcomes with the NVIDIA DGX Reference Architecture appeared first on insideHPC.

|

|

by staff on (#46356)

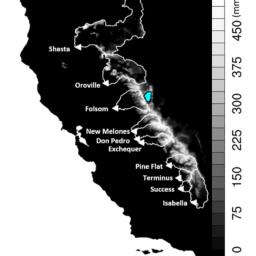

A future warmer world will almost certainly feature a decline in fresh water from the Sierra Nevada mountain snowpack. Now a new study by Berkeley Lab shows how the headwater regions of California’s 10 major reservoirs, representing nearly half of the state’s surface storage, found they could see on average a 79 percent drop in peak snowpack water volume by 2100. "What’s more, the study found that peak timing, which has historically been April 1, could move up by as much as four weeks, meaning snow will melt earlier, thus increasing the time lag between when water is available and when it is most in demand."The post NERSC: Sierra Snowpack Could Drop Significantly By End of Century appeared first on insideHPC.

|