|

by staff on (#48E02)

SkyScale's Tim Miller contends that the recent combination of One Stop Systems and Bressner create an ideal European ‘AI on the Fly’ embedded HPC partner for OEMs addressing the growing HPC at the Edge and HPC on the move markets. In this guest post, Miller explores how high performance embedded computing can address the growing HPC at the Edge and HPC on the move markets.The post High Performance Embedded Computing appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 03:30 |

|

by Rich Brueckner on (#48E03)

Prof. Dr. Dieter Kranzlmüller from the Leibniz Supercomputing Centre (LRZ) in Germany gave this talk at the Intel User Forum. "The next generation of supercomputing has arrived at LRZ with SuperMUC-NG. Learn how LRZ scientists and researchers are using a supercomputer driven by Intel Xeon Scalable processors and optimizing the system for power and storage."The post Video: The Quest for the Highest Performance – Supporting Science with SuperMUC-NG appeared first on insideHPC.

|

|

by staff on (#48BZN)

The HudsonAlpha Institute for Biotechnology will host the second HudsonAlpha Tech Challenge February 22-24, 2019. This life sciences hackathon brings together students and professionals who attempt to solve challenges using an emerging technology, and apply it to biotech and the life sciences. "The HudsonAlpha Tech Challenge is a great opportunity to introduce genomics, genetics and biotech to existing and emerging leaders in computer science and technology,†said Adam Hott, EdD, digital applications lead at HudsonAlpha."The post HudsonAlpha to host BioTech Hackathon in Huntsville appeared first on insideHPC.

|

|

by Rich Brueckner on (#48BZQ)

In this podcast, Doug Kothe from the Exascale Computing Project describes the 2019 ECP Annual Meeting. "Key topics to be covered at the meeting are discussions of future systems, software stack plans, and interactions with facilities. Several parallel sessions are also planned throughout the meeting."The post Podcast: Doug Kothe Looks back at the Exascale Computing Project Annual Meeting appeared first on insideHPC.

|

|

by staff on (#48AFC)

In this podcast, the Radio Free HPC team takes a second look at China’s plans for the Tianhe-3 exascale supercomputer. "According to news articles, Tianhe-3 will be 200 times faster than Tianhe-1, with 100x more storage. What we don’t know is if these comparisons are relative to Tianhe-1 or Tianhe 1A. The later machine weighs in at 2.256 PFlop/s which means that Tianhe-3 might be as fast as 450 PFlop/s when complete. We also made a reference to a past episode, which we know you remember vividly, where we discussed China’s three-pronged strategy for exascale."The post Podcast: China Tianhe-3 Exascale machine is coming appeared first on insideHPC.

|

|

by Rich Brueckner on (#48AC2)

Reservoir Labs is seeking a Research Engineer in our Job of the Week. We are working on some of the most interesting and challenging problems related to high-performance computing and compilers, cybersecurity, advanced algorithms, and high-performance data analytics. "You will be closely guided and mentored by one of our experienced researchers, providing you with an unparalleled learning experience. You will have the opportunity to publish your own research papers, attend client meetings and academic conferences."The post Job of the Week: Research Engineer at Reservoir Labs in New York appeared first on insideHPC.

|

|

by staff on (#485MR)

Lustre is a widely-used parallel file system in the High Performance Computing (HPC) market. It offers the performance required for HPC workloads, with its parallel design, flexibility, and scalability. This sponsored post explores HPE scalable storage and the Lustre parallel file system, and outlines 'a middle ground' available via solutions that offer the combination of Community Lustre within a qualified hardware solution.The post HPE Scalable Storage for Lustre: The Middle Way appeared first on insideHPC.

|

|

by staff on (#488HR)

In this special guest post, Thomas Francis writes that the Cloud is stepping up to answer the computational challenges of Ai and HPC. "Cloud delivers cost savings and efficiencies which are nice to have. But the reason companies are adopting the cloud is because suddenly it is a competitive advantage. And conversely, using your company's precious resources on buying and managing aging hardware is a disadvantage."The post Why HPC Cloud is your Competitive Advantage appeared first on insideHPC.

|

|

by Rich Brueckner on (#488C1)

In this video from the Intel User Forum at SC18, Prabhat from NERSC presents: Performance and Productivity in the Big Data Era. "At the National Energy Research Scientific Computing Center, HPC and AI converge and advance with Intel technologies. Explore how technologies, trends, and performance optimizations are applied to applications such as CosmoFlow using TensorFlow to help us better understand the universe."The post Video: Performance and Productivity in the Big Data Era appeared first on insideHPC.

|

|

by staff on (#486JP)

Today the Revolution In Simulation announced that the Aras Corporation has joined their technology alliance a new sponsor for 2019. "As a non-profit seeking initiative, our sponsors are critical in fueling the Democratization of Simulation movement," said RevSim.Org co-founder, Rich McFall. "We are excited to see Aras joining other market leaders who are demonstrating their revolutionary thought leadership by supporting RevSim.Org with their expertise, resources and funding that benefits industrial users of simulation technologies.â€The post Aras Joins the Revolution in Simulation Technology Alliance appeared first on insideHPC.

|

|

by staff on (#486EZ)

Sandia National Laboratories researchers are looking to shape the future of computing through a series of quantum information science projects. As part of the work, they will collaborate to design and develop a new quantum computer that will use trapped atomic ion technology. "Quantum information science represents the next frontier in the information age," said U.S. Secretary of Energy Rick Perry this fall when he announced $218 million in DOE funding for the research.The post Sandia steps up to New Quantum Computing Projects appeared first on insideHPC.

|

|

by staff on (#485RX)

The next big supercomputer is out for bid. An RFP was released today for Crossroads, an 18 Megawatt system that will support the nation’s Stockpile Stewardship Program. "Los Alamos National Laboratory is proud to serve as the home of Crossroads. This high-performance computer will continue the Laboratory’s tradition of deploying unique capabilities to achieve our mission of national security science,†said Thom Mason from LANL."The post LANL Solicits Bids for 18 MW Crossroads Supercomputer for Delivery in 2021 appeared first on insideHPC.

|

|

by staff on (#483TY)

NVIDIA is making deploying state-of-the-art AI data centers easier with their NVIDIA DGX-Ready Data Center program and the help of a growing lineup of NVIDIA partners. "With the NVIDIA DGX-Ready Data Center program, customers get access to world-class data center services offered through a qualified network of NVIDIA colocation partners. Through colocation, these customers can avoid getting mired in the challenges of facilities planning and instead focus on gaining insights from and innovating with their data. Using the program, businesses can deploy NVIDIA DGX systems and recently announced DGX reference architecture solutions from DDN, IBM Storage, NetApp and Pure Storage, with speed, simplicity and an affordable op-ex model."The post New NVIDIA DGX-Ready Program Allows Data Centers to Deploy AI with Ease appeared first on insideHPC.

|

|

by staff on (#483B3)

Today Intel announced that their new 28-core Intel Xeon W-3175X processor is now available. "Built for handling heavily threaded applications and tasks, the Intel Xeon W-3175X processor delivers uncompromising single- and all-core world-class performance for the most advanced professional creators and their demanding workloads. With the most cores and threads, CPU PCIe lanes, and memory capacity of any Intel desktop processor, the Intel Xeon W-3175X processor has the features that matter for massive mega-tasking projects such as film editing and 3D rendering."The post Intel steps up with 28 core Xeon Processor for High-End Workstations appeared first on insideHPC.

|

|

by Rich Brueckner on (#483B5)

In this video from the 2019 EasyBuild User Meeting, Kenneth Hoste from the University of Ghent presents: EasyBuild State of the Union. "EasyBuild is a software build and installation framework that allows you to manage (scientific) software on HPC systems in an efficient way. The EasyBuild User Meeting includes presentations by both EasyBuild users and developers, next to hands-on sessions."The post Video: EasyBuild State of the Union appeared first on insideHPC.

|

|

by staff on (#48354)

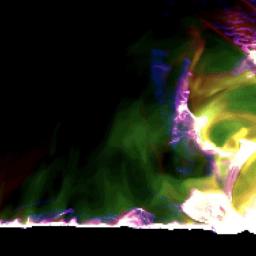

In this video, scientists simulate the formation of a solar flare, including for the first time the spectrum of light emissions known to be associated with such flares. "This work allows us to provide an explanation for why flares look like the way they do, not just at a single wavelength, but in visible wavelengths, in ultraviolet and extreme ultraviolet wavelengths, and in X-rays," said Mark Cheung, a staff physicist at Lockheed Martin Solar and Astrophysics Laboratory and a visiting scholar at Stanford University. "We are explaining the many colors of solar flares."The post Video: A comprehensive Simulation of a Solar Flare appeared first on insideHPC.

|

|

by Rich Brueckner on (#480Y3)

Hyperion Research has posted the Speaker Agenda for the HPC User Forum, which takes place April 1-3 in Santa Fe. "Key topics will include updates from senior officials on America's Exascale Computing Project, Japan and the EuroHPC exascale initiative, along with using clouds for HPC workloads, metadata and archiving, STEM education, HPDA-AI, and quantum computing."The post Agenda Posted: April HPC User Forum in Santa Fe appeared first on insideHPC.

|

|

by staff on (#480S7)

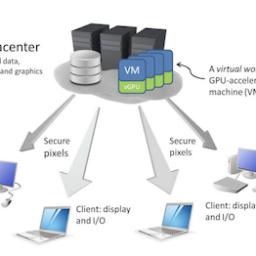

NVIDIA Quadro Virtual Workstations are now available on the Microsoft Azure Marketplace, enabling virtual workstation performance for more artists, engineers and designers. "Starting today, Microsoft Azure cloud customers in industries like architecture, entertainment, oil and gas, and manufacturing can easily deploy the advanced GPU-accelerated capabilities of Quadro Virtual Workstation (Quadro vWS) whenever, wherever they need it."The post NVIDIA Quadro Comes to the Microsoft Azure Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#480S9)

In this talk from the Intel User Forum at SC18, Dan Stanzione from TACC presents: Computing for the Endless Frontier. "Coming to TACC in 2019, the Frontera supercomputer will be powered by Intel Xeon Scalable processors. It will be the first major HPC system to deploy the Intel Optane DC persistent memory technology for a range of HPC and AI uses. Anticipated early projects on Frontera include analyses of particle collisions from the Large Hadron Collider, global climate modeling, improved hurricane forecasting, and multi-messenger astronomy."The post Video: Computing for the Endless Frontier at TACC appeared first on insideHPC.

|

|

by staff on (#47YK3)

Last week in Finland, Mellanox announced that its 200 Gigabit HDR InfiniBand solutions were selected to accelerate a multi-phase supercomputer system at CSC – the Finnish IT Center for Science. The new supercomputers, set to be deployed in 2019 and 2020 by Atos, will serve the Finnish researchers in universities and research institutes, enhancing climate, renewable energy, astrophysics, nanomaterials and bioscience, among a wide range of exploration activities. The Finnish Meteorological Institute (FMI) will have their own separate partition for diverse simulation tasks ranging from ocean fluxes to atmospheric modeling and space physics.The post 200 Gigabit HDR InfiniBand to Power New Atos Supercomputers at CSC in Finland appeared first on insideHPC.

|

|

by staff on (#47YDX)

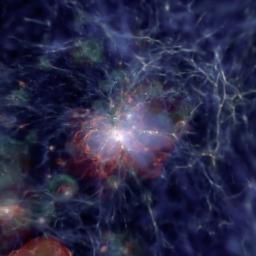

New research based on simulations using the Blue Waters supercomputer at NCSA reveals that when galaxies assemble extremely rapidly -- and sometimes violently -- that can lead to the formation of very massive black holes. In these rare galaxies, normal star formation is disrupted and black hole formation takes over. "We on the Blue Waters Project are very excited about this accomplishment and very pleased that Blue Waters, with its unique capabilities, once again enabled science that was not feasible on any other system,†said Bill Kramer, the Blue Waters Principal Investigator and Director.The post Supercomputing the Formation of Black Holes appeared first on insideHPC.

|

|

by staff on (#47YDZ)

Rice University’s Ken Kennedy Institute will host the 12th annual Rice Oil and Gas High Performance Computing Conference (OGHPC) in Houston, Texas on March 4 - 6, 2019. "With the end of Moore’s law, challenges are mounting around a rapidly changing technology landscape," said Jan E. Odegard, Executive Director of the Ken Kennedy Institute. "The end of one era is an opportunity for advancements and the beginning of a new era – a renaissance for system architectures that highlights the need for investments in workforce, algorithms, software, and hardware to support system scalability.â€The post Rice Oil & Gas Conference in March to look for ways to meet HPC Demand appeared first on insideHPC.

|

|

by Rich Brueckner on (#47Y93)

In this slidecast, Greg Stewart from Novel Therm describes how the company provides HPC As a Service using geothermal energy. As a wholesale provider of Geothermal HPC Data Centers, Novel Therm is a truly renewable energy source option for your HPC data center. "Tell us what you need and we will create a fixed price, wholesale, green energy solution for your data center needs. We will save you money and time while saving the planet. Low Impact Geothermal Energy Is At The Core Of What We Do."The post Slidecast: Novel Therm Powers HPC as a Service with Geothermal Energy appeared first on insideHPC.

|

|

by Rich Brueckner on (#47WD3)

In this video from the Intel User Forum at SC18, Raj Hazra from Intel presents: Our Journey…Accelerating. "This talk highlights the innovations needed at the infrastructure, compute, and programming level to realize the “fourth paradigm.†See how Intel’s unmatched data-centric compute platform, as well as innovations in memory and storage technologies, enable the capabilities needed to meet current and future demands in the convergence of HPC and AI. Uncover performance results across a range of HPC and AI benchmarks and real applications on the recently announced Cascade Lake advanced performance processor including the design win at HLRN."The post Raj Hazra from Intel presents: Our Journey… Accelerating appeared first on insideHPC.

|

|

by staff on (#47WAA)

In this special guest feature, Robert Roe from Scientific Computing World writes that a whole new set of processor choices could shake up high performance computing. "While Intel is undoubtedly the king of the hill when it comes to HPC processors – with more than 90 per cent of the Top500 using Intel-based technologies – the advances made by other companies, such as AMD, the re-introduction of IBM and the maturing Arm ecosystem are all factors that mean that Intel faces stiffer competition than it has for a decade."The post Choice Comes to HPC: A Year in Processor Development appeared first on insideHPC.

|

|

by Rich Brueckner on (#47TWA)

The American University of Sharjah in the United Arab Emirates is seeking an HPC Computational Scientist in our Job of the Week. "American University of Sharjah (AUS) invites applications for an HPC computational scientist position commencing in the Fall 2019 semester at the latest. AUS is seeking a highly motivated senior programmer with proven experience in developing software for research applications, with emphasis on utilizing open source tools and platforms. The successful applicant is expected to promote and support the development of research-oriented applications that utilize the AUS HPC cluster in a variety of domains that include all engineering disciplines, biology and bioengineering, finance and more."The post Job of the Week: HPC Computational Scientist at the American University of Sharjah appeared first on insideHPC.

|

|

by staff on (#47TSK)

In this episode of Radio Free HPC, Dan, Henry, and Shahin start with a spirited discussion about IBM's recent announcement of a "crowd sourced weather prediction application." Henry was dubious as to whether Big Blue could get access to the data they need in order to truly put out a valuable product. Dan had questions about the value of the crowd sourced data and how it could be scrubbed in order to be useful. Shahin was pretty favorable towards IBM’s plans and believes that they will solve the problems that Henry and Dan raised.The post Podcast: Weather Forecasting Goes Crowdsourcing, Q means Quantum appeared first on insideHPC.

|

|

by staff on (#47RRV)

In this Intel Chip Chat podcast, Dr. Pradeep Dubey, Intel Fellow and director of its Parallel Computing Lab, explains why it makes sense for the HPC and Ai to come together and how Intel is supporting this convergence. "AI developers tend to be data scientists, focused on deriving intelligence and insights from massive amounts of digital data, rather than typical HPC programmers with deep system programming skills. Because Intel architecture serves as the foundation for both AI and HPC workloads, Intel is uniquely positioned to drive their convergence. Its technologies and products span processing, memory, and networking at ever-increasing levels of power and scalability."The post Podcast: How AI and HPC Are Converging with Support from Intel Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#47RRW)

In this video from Arm HPC Asia 2019, Fu Li from Quantum Cloud presents: Scale out AI Training on Massive Core System from HPC to Fabric based SOC. "The purpose of these workshops has been to bring together the leading Arm vendors, end users and open source development community to discuss the latest products, developments and open source software support in HPC on Arm."The post Video: Scale-out AI Training on Massive Core Systems from HPC to Fabric-based SOC appeared first on insideHPC.

|

|

by staff on (#47RJX)

Queen Mary University of London is leading a major new research project, ‘Living with Machines,’ which is set to be one of the biggest and most ambitious humanities and science research initiatives ever to launch in the UK. ‘Living with Machines’ is a major five-year inter-disciplinary research project with The Alan Turing Institute and the British Library and has been awarded £9.2 million from the UK Research and Innovation’s Strategic Priorities Fund. It will use artificial intelligence (AI) to analyze historical sources.The post Queen Mary University to Drive AI Analysis of History appeared first on insideHPC.

|

|

by Rich Brueckner on (#47REB)

In this video from the Intel User Forum at SC18, Dr. Sofia Vallecorsa from CERN openlab presents: Fast Simulation with Generative Adversarial Networks. "This talk presents an approach based on generative adversarial networks (GANs) to train them over multiple nodes using TensorFlow deep learning framework with Uber Engineering Horovod communication library. Preliminary results on scaling of training time demonstrate how HPC centers could be used to globally optimize AI-based models to meet a growing community need."The post Fast Simulation with Generative Adversarial Networks appeared first on insideHPC.

|

|

by staff on (#47P75)

Ayar Labs is leveraging its recent Series A funding to bring optical interconnect silicon to the Cloud, HPC, and Telecommunication markets. "We’re seeing significant industry interest in optical chip-to-chip connectivity-- based on our assessments, the Total Addressable Market for inter-chip optical interconnect is 4x the optical transceiver market,†said Charlie Wuischpard, CEO of Ayar Labs."The post Ayar Labs Readies Optical Interconnect Silicon to Meet Industry Demand appeared first on insideHPC.

|

|

by staff on (#47P76)

The Nikhef National Institutee for Subatomic Physics in Amsterdam has expanded its data center with a cluster of powerful Dell EMC PowerEdge R6415 servers powered by the AMD EPYC processor. "It is a continuous challenge to optimize the CPU and the system design of the servers in our datacenter. This is why we are always looking for the most efficient computing power with the best ratio of performance and budget. The innovative design of the Dell EMC PowerEdge R6415 servers with the AMD EPYC processor fits perfectly with our needs."The post Dell EMC Powers Powers Physics at Nikhef with AMD EPYC Servers appeared first on insideHPC.

|

|

by staff on (#47P1K)

For the second year, Lenovo, the largest worldwide provider of supercomputers, and Intel, a leader in the semiconductor industry, co-sponsored the University Artificial Intelligence Challenge, which aims to recognize outstanding university-based AI researchers for their achievements in the field. The company started this program in 2017, with the goal of encouraging AI research in universities world-wide. "Eight researchers from universities in North America, Europe, and Asia were selected as award winners, seven of them made it to SC18 and presented their findings in the Lenovo booth."The post Video: Lenovo & Intel Give Platform to University AI Researchers appeared first on insideHPC.

|

|

by Richard Friedman on (#47GTZ)

The Intel Distribution for Python takes advantage of the Intel® Advanced Vector Extensions (Intel® AVX) and multiple cores in the latest Intel architectures. By utilizing the highly optimized Intel MKL BLAS and LAPACK routines, key functions run up to 200 times faster on servers and 10 times faster on desktop systems. This means that existing Python applications will perform significantly better merely by switching to the Intel distribution.The post Accelerated Python for Data Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#47KM6)

The American University of Sharjah is seeking an HPC System Administrator position commencing in the Fall 2019 semester at the latest. "AUS is seeking a highly motivated individual with proven experience in managing software and hardware HPC installations for research applications, with emphasis on utilizing open source tools and platform. The qualified candidate is expected to coordinate with AUS IT for integrating the newly acquired HPC cluster with the existing infrastructure. They should ensure HPC environment stability and manage and support new technology at both hardware and software level."The post Job of the Week: HPC System Administrator at the American University of Sharjah appeared first on insideHPC.

|

|

by Rich Brueckner on (#47KA3)

ORNL has issued its Call for Proposals for a set of global GPU Hackathons in 2019. "A GPU hackathon is a 5-day coding event in which teams of developers port their applications to run on GPUs, or optimize their applications that already run on GPUs. Each team consists of three or more developers who are intimately familiar with (some part of) their application, and they work alongside two mentors with GPU programming expertise. The mentors come from universities, national laboratories, supercomputing centers, government institutions, and vendors."The post Call For Proposals: Worldwide GPU Hackathons in 2019 appeared first on insideHPC.

|

|

by staff on (#47KA4)

In this special guest feature, Joe Landman from Scalability.org looks at technology trends that are driving High Performance Computing. "HPC has been moving relentlessly downmarket. Each wave of its motion has a destructive impact upon the old order, and opens up the market wider to more people. All the while growing the market."The post Reflections on HPC Past, Present, and Future appeared first on insideHPC.

|

|

by staff on (#47HAG)

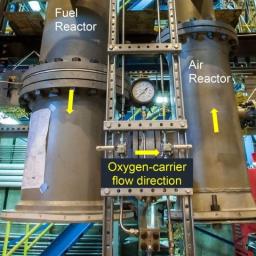

Researchers are looking to HPC to help engineer cost-effective carbon capture and storage technologies for tomorrow's power plants. "By combining new algorithmic approaches and a new software infrastructure, MFiX-Exa will leverage future exascale machines to optimize CLRs. Exascale will provide 50 times more computational science and data analytic application power than is possible with DOE high-performance computing systems such as Titan at the Oak Ridge Leadership Computing Facility (OLCF) and Sequoia at Lawrence Livermore National Laboratory."The post Supercomputing Cleaner Power Plants appeared first on insideHPC.

|

|

by staff on (#47H5K)

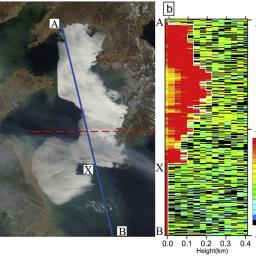

Over at the XSEDE blog, Kim Bruch from SDSC writes that an international team of researchers is using supercomputers to shed new light on how and why a particular type of sea fog forms. Through for simulation, they hope to provide more accurate fog predictions that help reduce the number of maritime mishaps. "The researchers have been using the Comet supercomputer based at the San Diego Supercomputer Center (SDSC) at UC San Diego. To-date, the team has used about 2 million core hours."The post Supercomputing Sea Fog Development to Prevent Maritime Disasters appeared first on insideHPC.

|

|

by staff on (#47GZY)

HPE is building a new Ai supercomputer for GENCI in France. Called Jean Zay, the 14 Petaflop system is part of an artificial intelligence initiative called for by President Macron to bolster the nation's scientific and economic growth. "At Hewlett Packard Enterprise, we continue to fuel the next frontier and unlock discoveries with our end-to-end HPC and AI offerings that hold a strong presence in France and have been further strengthened just in the past couple of years."The post France to Double Supercomputing Capacity with Jean Zay Ai System from HPE appeared first on insideHPC.

|

|

by staff on (#47ERH)

Last week, Canadian liquid cooling vendor CoolIT Systems announced that the company has partnered with Vistara Capital Partners as the company expands production capacity to service new and existing global data center customers. "With our order backlog expanding by 400% in the last year, access to additional capital is critical for continued success,†said CoolIT Systems CFO, Peter Calverley. “With the creative financing package provided by Vistara the financial foundation is in place for 2019 to be another year of significant growth in data center liquid cooling sales for CoolIT.â€The post As Liquid Cooling Gets Hot, CoolIT Systems Secures Round of Funding appeared first on insideHPC.

|

|

by staff on (#47ERJ)

Students and Young Researchers are encouraged to apply for the ISC Travel Grant program. It's a great way for HPC folks to get to the ISC High Performance conference, which takes place June 16-20 in Frankfurt, Germany. "As an added bonus, Dr. Julian Kunkel, of University of Reading, will mentor the selected grant recipients helping them get the most out of the conference by suggesting relevant program sessions and introducing them to experts in their research field."The post Apply Now for the ISC 2019 Travel Grant appeared first on insideHPC.

|

|

by staff on (#47ERM)

Oracle just announced the opening of a Toronto data center to support in regional customer demand for Oracle Cloud Infrastructure. "Oracle’s next-generation cloud infrastructure offers the most flexibility in the public cloud, allowing companies to run traditional and cloud-native workloads on the same platform. With Oracle’s modern cloud regions, only Oracle can deliver the industry’s broadest, deepest, and fastest growing suite of cloud applications, Oracle Autonomous Database, and new services in security, Blockchain and Artificial Intelligence, all running on its enterprise-grade cloud infrastructure."The post Oracle Expands Cloud Business with Next-Gen Data Center in Canada appeared first on insideHPC.

|

|

by Rich Brueckner on (#47EKF)

"Today, the possibilities of an interconnected, heterogeneous and intelligent world are only just beginning to make themselves known. This stunning advancement in digital technology was made possible by ever-increasing performance at ever lower costs. However, physical limits mean we won’t be able to keep shrinking computing components while increasing performance for much longer. So where do we go from here? What are the main challenges and conditions for future developments, and where? The HiPEAC Vision 2019 explores all these questions, and more."The post HiPEAC Vision 2019 Looks to the Future of Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#47CMR)

In this video from SC18 in Dallas, CJ Newburn from NVIDIA describes how developers can quickly containerize their applications and how users can benefit from running their workloads with containers from the NVIDIA GPU Cloud. "A container essentially creates a self contained environment. Your application lives in that container along with everything the application depends on, so the whole bundle is self contained.â€The post Video: HPC Containers – Democratizing HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#47CMT)

Today, Bright Computing announced that Bechtle France has won a contract to be the sole distributor of Bright Computing's products and solutions to Groupe Logiciel’s academic and research institutions across France. "I'm excited that, together with our valued partner, Bechtle France, Bright Computing has signed an agreement to deliver our industry- leading HPC, Cloud and Data Science technologies to Groupe Logiciel," said Lee Carter, VP Alliances at Bright Computing. "Our goal at Bright Computing is to offer the best cluster management solutions on the planet. With a strong and loyal customer base that stretches across all geographies and industry sectors, our software empowers organizations to push the boundaries of HPC, Cloud, Deep Learning and Artificial Intelligence.â€The post Bright Computing Powers HPC at Groupe Logiciel appeared first on insideHPC.

|

|

by Rich Brueckner on (#47B3Q)

In this video from the European R Users Meeting, Henrik Bengtsson from the University of California San Francisco presents: A Future for R: Parallel and Distributed Processing in R for Everyone. "The future package is a powerful and elegant cross-platform framework for orchestrating asynchronous computations in R. It's ideal for working with computations that take a long time to complete; that would benefit from using distributed, parallel frameworks to make them complete faster; and that you'd rather not have locking up your interactive R session."The post A Future for R: Parallel and Distributed Processing in R for Everyone appeared first on insideHPC.

|

|

by Rich Brueckner on (#47B0W)

D.E. Shaw Research is seeking an HPC System Administrator in our Job of the Week. "Exceptional sysadmins sought to manage systems, storage, and network infrastructure for a New York–based interdisciplinary research group. Successful hires will be responsible for our servers and cluster environments. Our research effort is aimed at achieving major scientific advances in the field of biochemistry and fundamentally transforming the process of drug discovery."The post Job of the Week: System Administrator at D.E. Shaw Research appeared first on insideHPC.

|

|

by Rich Brueckner on (#479NX)

Today, the U.S. Department of Energy announced plans to provide $45 million for new research in chemical and materials sciences aimed at advancing the important emerging field of Quantum Information Science (QIS). "QIS will play a major role in shaping the future of computing and a range of other vitally important technologies,†said DOE Under Secretary of Science Paul Dabbar. This initiative ensures that America will remain on the cutting edge of the chemical and materials science breakthroughs that will form the basis for future QIS systems.â€The post DOE to Provide $45 Million for Materials Research in Quantum Information Science appeared first on insideHPC.

|