|

by Rich Brueckner on (#46327)

In this video from DDN booth at SC18, Terrell Russell from the iRODS Consortium presents: Managing Data from the Edge to HPC. "The Integrated Rule-Oriented Data System (iRODS) is open source data management software used by research organizations and government agencies worldwide. iRODS is released as a production-level distribution aimed at deployment in mission critical environments. It virtualizes data storage resources, so users can take control of their data, regardless of where and on what device the data is stored."The post Video: Managing Data from the Edge to HPC with iRODS appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 03:30 |

|

by Rich Brueckner on (#46119)

In this podcast, the Radio Free HPC team looks back at the highlights of the 2018 CHPC Conference in South Africa. With over 500 attendees, the event featured a set of keynotes on high performance computing as well as a Student Cluster Challenge and a Cyber Security Competition.The post Radio Free HPC Reviews Highlights from the CHPC Conference in South Africa appeared first on insideHPC.

|

|

by Rich Brueckner on (#460W0)

In this video from the DDN booth at SC18, Laura Carriere from NCCS in Singapore presents: Centralizing Storage without going off the Rails. "The National Climate Change Secretariat (NCCS) was established on 1 July 2010 under the Prime Minister’s Office (PMO) to develop and implement Singapore's domestic and international policies and strategies to tackle climate change. NCCS is part of the Strategy Group which supports the Prime Minister and his Cabinet to establish priorities and strengthen strategic alignment across Government. The inclusion of NCCS enhances strategy-making and planning on vital issues that span multiple Government ministries and agencies."The post Video: Centralizing Storage without going off the Rails appeared first on insideHPC.

|

|

by staff on (#45ZCS)

HPE is now under contract to build and install a new AMD-powered supercomputer at HLRS in Germany. Called "Hawk, the new computing system will be 3.5 times faster than the existing HLRS supercomputer, Hazel Hen, and will be the world's fastest supercomputer for industrial production. Hawk will support academic research and industry — particularly in engineering-related fields — to advance applications for energy, climate, mobility, and health. "And if you are wondering about the name, well, Hawks eat Hazel Hens."The post HPE to Build 24 Petaflop Supercomputer with 64-Core AMD EPYC Processors for HLRS appeared first on insideHPC.

|

|

by Rich Brueckner on (#45ZCV)

In this talk from Computing Systems Week in Heraklion, Bastian Koller from the University of Stuttgart / HLRS asks whether HPC has come to a dead-end in Europe. He argues that real-life uses for applications are more important than having computing power for the sake of it, and suggests directions to take. "HPC and HPC Ecosystems are quickly evolving. Whereas the HPC community has a long history and is partially seen as as “dinosaurâ€, we see a lot of developments around HPC, namely HPDA, Deep Learning, Machine Learning etc. This keynote will have a glance on where we are today, technological trends and show with examples, how the communities around applications need to be supported to get ready for the new Era of High Performance Star (HP*), which covers the next evolution step of supercomputing."The post Video: Quo vadis HPC – Facing the Future appeared first on insideHPC.

|

|

by staff on (#45Y4H)

In this video from DDN booth at SC18, Kurt Kuckein from DataDirect Networks presents an overview of DDN A3i: (Accelerated, Any-Scale AI). "Engineered from the ground up for the AI-enabled data center, DDN A³I solutions are fully-optimized to accelerate AI applications and streamline DL workflows for greatest productivity. DDN A³I solutions make AI-powered innovation easy, with faster performance, effortless scale, and simplified operations—all backed by the data at scale experts."The post Video: Overview of DDN’s Accelerated, Any-Scale AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#45Y1V)

NVIDIA is seeking a Systems Software Engineer for NvStreams in our Job of the Week. "This position will be part of a dynamic crew that develops and maintains systems software for complex heterogeneous computing systems that power disruptive products in various Automotive platforms. The Automotive System Software team plays a vital role in realizing the vision behind these products by building core technologies and platform solutions that are complex and industry leading."The post Job of the Week: Systems Software Engineer for NvStreams at NVIDIA appeared first on insideHPC.

|

|

by Rich Brueckner on (#45WA5)

In this podcast video, the Radio Free HPC team looks at the monumental IT challenges that Santa faces each Holiday Season. "With nearly 2 billion children to serve, Santa’s operations are an IT challenge on the grandest scale. If the world’s population keeps growing by 83 million people per year, Santa may need to build a hybrid cloud just to keep up. With billions of simultaneous queries, the Big Data analytics required will certainly require an 8-socket numa machines with 4 terabytes of central memory."The post Radio Free HPC Looks at Santa’s Big Data Challenges appeared first on insideHPC.

|

|

by staff on (#45W5G)

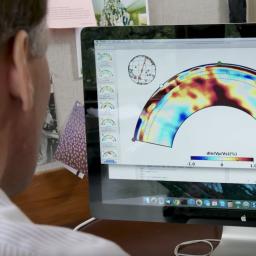

In this video, Jeroen Tromp from Princeton University describes how GPUs power 3D wave simulations that help researchers better understand the earth's interior. The Department of Geosciences at Princeton University is using Summit, powered by NVIDIA Volta GPUs, to observe and simulate seismic data, imaging the Earth's interior on a global scale.The post Video: Imaging the Earth’s Interior with the Summit Supercomputer appeared first on insideHPC.

|

|

by staff on (#45T4E)

In this special guest feature, Bill Lee from the InfiniBand Trade Association writes that the new TOP500 list has a lot to say about how interconnects matter for the world's most powerful supercomputers. "Once again, the List highlights that InfiniBand is the top choice for the most powerful and advanced supercomputers in the world, including the reigning #1 system – Oak Ridge National Laboratory’s (ORNL) Summit. The TOP500 List results not only report that InfiniBand accelerates the top three supercomputers in the world but is also the most used high-speed interconnect for the TOP500 systems."The post Why InfiniBand rules the roost in the TOP500 appeared first on insideHPC.

|

|

by staff on (#45T4F)

In this video, Thomas Wilhelm shows software developers how they can optimize their application in order to identify if they can benefit from Persistent memory. "Persistent memory is adding a completely novel memory tier to the memory hierarchy that fits between DRAM and SSDs. In this video, we will show software developers how they can analyze their application in order to identify if they will benefit from persistent memory."The post Video: Will Persistent Memory solve your Performance Problems? appeared first on insideHPC.

|

|

by Rich Brueckner on (#45RH9)

NeSI / NIWA are seeking an HPC Senior Science Advisor and Platforms Architect to work nationally with scientific communities gathering their High Performance Computing & Data Science (HPC&DS) needs, and to provide strategic advice and expertise to help drive positive change to NIWA and NeSI's shared infrastructure and services platform. "Applicants should have a PhD in a relevant science domain, combined with a considerable scientific research sector experience in a similar organization or specialized facility of at least comparable size and scale as NIWA's HPC systems."The post Job of the Week: HPC Senior Science Advisor and Platforms Architect at NeSI in New Zealand appeared first on insideHPC.

|

|

by staff on (#45R81)

In this special guest feature, Carol Wells reviews the new book by Scott E. Page entitled The Model Thinker. "A hands-on reference for the working data scientist, 'The Model Thinker' challenges us to consider that the historical methods we have used for data analysis are no longer adequate given the complexity of today’s world. The book opens by making the case for a new way of using mathematical models to solve problems, offers a close look at a number of the models, then closes with a pair of demonstrations of the method."The post Book Review: The Model Thinker – A new way to look at Data Analysis appeared first on insideHPC.

|

|

by Rich Brueckner on (#45R4Q)

In this video from the DDN User Group at SC18, Sven Oehme from DDN presents: A Glimpse into the Future of I/O. "IME is DDN’s scale-out flash cache that is designed to accelerate applications beyond the capabilities of today’s file systems. IME manages I/O in an innovate way to avoid the bottlenecks and latencies of traditional I/O management and delivers the complete potential of flash all the way to connected applications. IME also features advanced data protection, flexible erasure coding and adaptive I/O routing, which together provide new levels of resilience and performance consistency."The post Video: A Glimpse into the Future of I/O appeared first on insideHPC.

|

|

by Rich Brueckner on (#45PT4)

In this video from DDN booth at SC18, Addison Snell from Intersect360 Research presents an HPCMarket Update. "Intersect360 Research's deep knowledge of HPC, coupled with strong marketing and consulting expertise, results in accurate intelligence for the HPC industry - insights and advice that allow customers to make decisions that are measurably positive to their business. The company's end-user-focused research is inclusive from both a technology perspective and a usage standpoint, allowing Intersect360 Research to provide its clients with total market models that include both traditional and emerging HPC applications."The post Video: HPC Market Update appeared first on insideHPC.

|

|

by Siddhartha Jana on (#45PR3)

In this special guest feature, SC19 Chair Michela Taufer writes that the conference theme of HPC Now is already resonating in a market sparked by Big Data & Ai. "HPC is exciting, and not just because of the problems it will solve in the future. It’s exciting because of how it is being used right now to save and enhance lives. My own research spans many HPC areas, such as determining how HPC cyberinfrastructures can make it possible to determine how new drugs could turn protein functions on or off, or how food production could be improved by predicting when and where farmers should water their fields."The post For SC19 Next Year, HPC is Now appeared first on insideHPC.

|

|

by staff on (#45NAJ)

The ISC High Performance Travel Grant Program is now open for applications. Introduced in 2017, the Grant enables university students and young researchers to be a part of the ISC High Performance conference series. For recipients traveling from Europe or North Africa, the maximum funding is 1500 euros per person, and for the rest of the world, it is 2500 euros per person. ISC Group will also provide the grant recipients free registration for the entire conference, which takes place from June 16 – 20, in Frankfurt, Germany.The post Apply Now for the ISC High Performance Travel Grant Program appeared first on insideHPC.

|

|

by Rich Brueckner on (#45N76)

In this video from the DDN User Group at SC18, Glenn Lockwood from NERSC presents: Making Sense of Performance in the Era of Burst Buffers. "It really is important to divide burst buffers into their hardware architectures and software usage modes; different burst buffer architectures can provide the same usage modalities to users, and different modalities can be supported by the same architecture."The post Making Sense of Performance in the Era of Burst Buffers appeared first on insideHPC.

|

|

by staff on (#45K8G)

In this video, the "Illustris" scientific simulation begins just 12 million years after the Big Bang and illustrates the formation of stars, heavy elements, galaxies, exploding super novae and dark matter over 14 billion years. "We hope that the Illustris simulation will be of interest to scientists of all disciplines as well as to the broader non-scientific community."The post Video: Illustris Simulation Depicts the Birth of the Universe appeared first on insideHPC.

|

|

by Rich Brueckner on (#45JE1)

ANSYS in Canonsburg, PA is seeking a Software Developer in our Job of the Week. "The Software Development Engineer involves developing, implementing, and maintaining functionality related to the ANSYS MAPDL application and Mechanical product line."The post Job of the Week: Software Developer at ANSYS appeared first on insideHPC.

|

|

by staff on (#45G3T)

The world's fastest supercomputer is now up and running production workloads at ORNL. "A year-long acceptance process for the 200-petaflop Summit supercomputer at Oak Ridge National Lab is now complete. Acceptance testing ensures that the supercomputer and its file system meet the functionality, performance, and stability requirements agreed upon by the facility and the vendor."The post World’s Fastest Supercomputer Now Running Production Workloads at ORNL appeared first on insideHPC.

|

|

by Rich Brueckner on (#45FT5)

Today the PASC19 conference team announced that Gordon Bell Prize-winner Deborah Weighill will keynote the show with a talk entitled: Investigating Epistatic and Pleiotropic Genetic Architectures in Bioenergy and Human. The event takes place June 12-14, 2019 in Zurich, Switzerland.The post PASC19 Conference Keynote to Feature Gordon-Bell-Prize-Winner on Ai-Powered Genomics appeared first on insideHPC.

|

|

by Richard Friedman on (#45FT7)

Code modernization means ensuring that an application makes full use of the performance potential of the underlying processors. And that means implementing vectorization, threading, memory caching, and fast algorithms wherever possible. But where do you begin? How do you take your complex, industrial-strength application code to the next performance level?The post Latest Intel Tools Make Code Modernization Possible appeared first on insideHPC.

|

|

by staff on (#45E0C)

The complete ISC 2019 contributed program is now open for submissions. The event takes place June 16–20, 2019, in Frankfurt, Germany. "We encourage engineers, scientists, young researchers, students and active members of the global HPC community to participate in next year’s conference. Submissions are welcome in the following sessions: Research Papers, Research Posters, Project Posters, BoFs, PhD Forum, Tutorials and Workshops."The post Call for Participation: ISC 2019 in Frankfurt appeared first on insideHPC.

|

|

by staff on (#45DNC)

Today’s competitive cyclist benefits from more lightweight, aerodynamic and rigid bike models – and, in a similar way, the prototyping process for manufacturers has become less of a heavy load – in terms of both time and cost – thanks to technological advances in simulation software. "Wind tunnel analyses are very important, but they can be expensive, time consuming and you need to have the athlete available. So, although they are extremely important for the validation of the model, a combination of wind analysis and CFD simulation might be extremely useful."The post Supercomputing the Future of Cycling appeared first on insideHPC.

|

|

by Rich Brueckner on (#458EC)

In this video from the 2018 CHPC Conference in South Africa, Trish Damkroger from Intel describes how the company is advancing the use of high performance computing on the African Continent. "In her Keynote talk at CHPC, Trish described the changing landscape of HPC, key trends, and the convergence of HPC-AI-HPDA that is transforming our industry and will fuel HPC to fulfill its potential as a scientific tool for insight and innovation."The post Video: How Intel is Advancing High Performance Computing at CHPC in South Africa appeared first on insideHPC.

|

|

by Rich Brueckner on (#45B98)

In this video, GÃsli Kr. from Advania describes how the company enables customer to migrate HPC workloads to the company's sustainable datacenters in Iceland. "When leveraging Advania´s HPCaaS you have the flexibility to address any workload increase as needed and only pay for the added resources used. By committing resources to our HPC-as-a-Service, Advania offers extremely competitive prices for CPU resources, a price that is unprecedented in the HPC on demand or cloud (IaaS) industry. Additionally Advania is able to reserve resources to accommodate both temporary and permanent requirements for added resources.The post Video: Advania Simplifies Moving HPC to the Cloud appeared first on insideHPC.

|

|

by staff on (#45B59)

In this video, Scott Gibson discusses the ExaWind project for windmill simulation with Michael Sprague from NREL. ExaWind is part of the ECP, which is building applications that will scale to tomorrow's Exascale machines. "Sprague also explains why the simulation is important because it demonstrates that the physics models of the ExaWind team will perform well on large computers and paves the way for the team to improve the models and direct simulation capability toward the exascale platform when it’s ready. He added that, ultimately, the team plans to simulate tens of large turbines within a large wind farm."The post Video: Optimizing Wind Power with the ExaWind Project at NREL appeared first on insideHPC.

|

|

by staff on (#458KY)

Over at the IBM Blog, IBM Fellow Hillary Hunter writes that the company anticipates that the world’s volume of digital data will exceed 44 zettabytes, an astounding number. "IBM has worked to build the industry’s most complete data science platform. Integrated with NVIDIA GPUs and software designed specifically for AI and the most data-intensive workloads, IBM has infused AI into offerings that clients can access regardless of their deployment model. Today, we take the next step in that journey in announcing the next evolution of our collaboration with NVIDIA. We plan to leverage their new data science toolkit, RAPIDS, across our portfolio so that our clients can enhance the performance of machine learning and data analytics."The post IBM’s Plan to bring Machine Learning Capabilities to Data Scientists Everywhere appeared first on insideHPC.

|

|

by staff on (#458M0)

Today Violin Systems announced the appointment of a new CEO and the acquisition of X-IO Storage. "This has been a year of rebirth, of growth and of tremendous success for Violin,†said CEO Mark Lewis. “That success has been fueled by advancing the already robust feature set of our flagship product, the addition of a product line that complements our existing portfolio of solutions and a team of individuals committed to ensuring customers around the world have the opportunity to add our consistent extreme performance storage to their data centers. With the tremendous success we achieved this past year, Violin is well positioned to dominate the marketplace in 2019 and beyond.â€The post Violin Systems Acquires X-IO Storage on Road to Recovery appeared first on insideHPC.

|

|

by Rich Brueckner on (#4589S)

Atos has announced plans to build a BullSequana XH2000 for IT4Innovations in the Czech Republic. The new supercomputer will be located in the National Supercomputing Center IT4Innovations, which is part of the Technical University of Ostrava and will be 8 times more powerful than its predecessor Anselm, which was installed in 2013.The post Atos to build BullSquana Supercomputer in Czech Republic appeared first on insideHPC.

|

|

by Rich Brueckner on (#456Q3)

In this video from SC18 in Dallas, James Coomer from DDN describes how the company powers HPC and Machine Learning applications. "Organizations around the world are leveraging DDN’s people, technology, performance and innovation to achieve their greatest visions and make revolutionary insights and discoveries! Designed, optimized and right-sized for Commercial HPC, Higher Education and Exascale Computing, our full range of DDN products and solutions are changing the landscape of HPC and delivering the most value with the greatest operational efficiency. Meet with our team of technologists to see how DDN is delivering the most optimized and efficient storage solutions for HPC, AI, and Hybrid Cloud."The post Video: How DDN Powers HPC & Ai Applications appeared first on insideHPC.

|

|

by Rich Brueckner on (#456EY)

In this video, NVIDIA CEO Jensen Huang showcases new weather prediction capabilities enabled by GPUs. The demo was initially shown on the big screen at SC18 in Dallas for a crowd of more than 700 conference attendees. "We’re looking at a future prediction of microclimates,†Huang said. "The map is the product of a GPU-accelerated COSMO weather prediction model. It offers resolution down to one square kilometer as well as 80 altitude layers — from the ground to as high as 20 kilometers. The model can predict two days of weather in just 20 minutes running on a single NVIDIA DGX-2 supercomputer. That’s computing muscle equivalent to 75 dual-socket CPU nodes."The post Video: Two Days of Weather Predicted in Just 20 Minutes appeared first on insideHPC.

|

|

by Rich Brueckner on (#4565K)

The Swiss HPC Conference has issued its Call for Participation. Held in conjunction with the HPCXXL User Group, the four-day event takes place April 1-4, 2019 in Lugano, Switzerland. "Explore the domains and disciplines driving change and progress at an unprecedented pace, join the HPC-AI Advisory Council (HPCAIAC), the Swiss National Supercomputing Centre (CSCS) and HPCXXL Board for the 10th annual Swiss Conference and HPCXXL Winter meeting. Conference sessions are open to submissions exploring the vast domains of HPC & AI - architectures, applications and usage - from emerging trends and hot topics to big breakthroughs, best practices and much more."The post Call for Participation: Swiss HPC Conference & HPCXXL Meeting in Lugano appeared first on insideHPC.

|

|

by Rich Brueckner on (#4560T)

In this video from ICT2018, Mateo Valero from the Barcelona Supercomputing Centre presents: HPC and the Future of Computing. "Europe is going in the right direction with EuroHPC and this must be sustained in the long-term. BSC fully supports this initiative and will do everything we can to help make it a success,†said Professor Valero, noting that much had been done since his previous keynote at ICT 2015 in Lisbon, where he already stressed the importance of developing European HPC technology."The post Video: HPC and the Future of Computing appeared first on insideHPC.

|

|

by staff on (#455W7)

AMD recently announced two new Radeon Instinct compute products including the AMD Radeon Instinct MI60 and Radeon Instinct MI50 accelerators, which are the first GPUs in the world based on the advanced 7nm FinFET process technology. The company has made numerous improvements on these new products, including optimized deep learning operations. This guest post from AMD outlines the key features of its new Radeon Instinct compute product line.The post World’s First 7nm GPU and Fastest Double Precision PCIe Card appeared first on insideHPC.

|

|

by staff on (#454AE)

In this video, the Microsoft Quantum Team describes how Quantum computers have the potential to solve the world’s hardest computational problems and alter the economic, industrial, academic, and societal landscape. In just hours or days, a quantum computer can solve complex problems that would otherwise take billions of years to solve. "To unlock these potential applications, the Microsoft Quantum Development Kit includes a new chemistry library that allows chemists to simulate molecular interactions and explore quantum algorithms for real-world applications in the chemistry domain."The post Simulating nature with the new Microsoft Quantum Development Kit chemistry library appeared first on insideHPC.

|

|

by Rich Brueckner on (#454AG)

The OpenFabrics Alliance has issued their Call for Participation for the 2019 OFA Workshop. The event takes place March 20-21 in Austin, Texas. "The annual OFA Workshop is a premier means of fostering collaboration among those who develop fabrics, deploy fabrics, and create applications that rely on fabrics. It is the only event of its kind where fabric developers and users can discuss emerging fabric technologies, collaborate on future industry requirements, and address problems that exist today."The post Call for Participation: OFA Workshop in Austin appeared first on insideHPC.

|

|

by Rich Brueckner on (#452DQ)

Booz Allen is seeking a Data Scientist in our Job of the Week. "We have an opportunity for you to use your analytical skills to improve geospatial intelligence. You’ll work closely with your customer to understand their questions and needs, then dig into their data-rich environment to find the pieces of their information puzzle. You’ll explore data from various sources, discover patterns and previously hidden insights to address business problems, and use the right combination of tools and frameworks to turn that set of disparate data points into objective answers to help senior leadership make informed decisions. You’ll provide your customer with a deep understanding of their data, what it all means, and how they can use it. Join us as we use data science for good in geospatial intelligence."The post Job of the Week: Data Scientist at Booz Allen appeared first on insideHPC.

|

|

by staff on (#452BP)

Today Atos announced a HPC announced plans to deploy a large-scale supercomputer at C-DAC in India. This contract is part of the NSM (National Supercomputing Mission), a 7-year plan of INR 4500 crores (~650M$) led by the Government of India which aims to create a network of over 70 high-performance supercomputing facilities for various academic and research institutions across India. "We’re delighted to officially become today the technology partner of C-DAC for HPC-related platforms and to participate in India’s prestigious NSM (National Supercomputing Mission) program. We are honored that our BullSequana supercomputers, will be empowering Indian academic and R&D institutions across the country to accelerate their research and at the same time support India’s ambition to be a leader in HPC.â€The post Atos to Build Bull Supercomputer for C-DAC in India appeared first on insideHPC.

|

|

by staff on (#450KR)

Today IBM Research released a 2018 retrospective and blog essay by Dr. Dario Gil, COO of IBM Research, that provides a sneak-peek into the future of AI. "We have curated a collection of one hundred IBM Research AI papers we have published this year, authored by talented researchers and scientists from our twelve global Labs. These scientific advancements are core to our mission to invent the next set of fundamental AI technologies that will take us from today’s “narrow†AI to a new era of “broad†AI, where the potential of the technology can be unlocked across AI developers, enterprise adopters and end-users."The post IBM Publishes Compendium of Ai Research Papers appeared first on insideHPC.

|

|

by Rich Brueckner on (#450KT)

In this video from SC18, Karan Batta from Oracle describes how the company provides high performance computing in the Cloud with Bare Metal speed. "Oracle Bare Metal Cloud Services (BMCS) public cloud infrastructure. Oracle BMCS is a new generation of scalable, inexpensive and performant compute, network and storage infrastructure that combines internet cloud scale architecture with enterprise scale-up bare metal capabilities, providing the ideal platform for demanding High Performance Computing workloads."The post Oracle Offers Bare Metal Instances for HPC in the Cloud appeared first on insideHPC.

|

|

by staff on (#44YFX)

This week NVIDIA announced that the company has broken a total of six performance records on a broad set of AI benchmarks. As a full suite, the benchmarks cover a variety of workloads and infrastructure scale – ranging from 16 GPUs on one node to up to 640 GPUs across 80 nodes. "Backed by Google, Intel, Baidu, NVIDIA and dozens more technology leaders, the new MLPerf benchmark suite measures a wide range of deep learning workloads. Aiming to serve as the industry’s first objective AI benchmark suite, it covers such areas as computer vision, language translation, personalized recommendations and reinforcement learning tasks."The post NVIDIA Sets Six Records in AI Performance appeared first on insideHPC.

|

|

by Rich Brueckner on (#44YAE)

In this video from SC18 in Dallas, Altair CTO Sam Mahalingam describes how the company's PBS works software helps manufacturers build better products with design innovation driven by HPC. Now, with advent of Machine Learning and analytics, the company is helping customers move forward to a new age of data-driven design. After that, Chief Scientist . Andrea Casotto from Altair describes how Altairs "obsessively efficient" approach drives EDA automation.The post Video: How Altair Powers HPC with PBS Works appeared first on insideHPC.

|

|

by Rich Brueckner on (#44VE5)

In this video, Dell EMC specialists and CoolIT technicians build the Ohio Supercomputing Center's newest, most efficient supercomputer system, the Pitzer Cluster. Named for Russell M. Pitzer, a co-founder of the center and emeritus professor of chemistry at The Ohio State University, the Pitzer Cluster is expected to be at full production status and available to clients in November. The new system will power a wide range of research from understanding the human genome to mapping the global spread of viruses.The post Time-Lapse Video: Building the Pitzer Cluster at the Ohio Supercomputing Center appeared first on insideHPC.

|

|

by Rich Brueckner on (#44VE7)

In this video from SC18 in Dallas, Madhu Matta from Lenovo describes how the company is driving HPC & Ai technologies for Science, Research, and Enterprises across the globe. "Lenovo cares about solving real-world problems, and working with researchers is one of the best ways to gather insights from those whose daily work involves high-computing data and analytics to do just that."The post How Lenovo is Helping Build Ai Solutions to Solve the World’s Toughest Challenges appeared first on insideHPC.

|

|

by Rich Brueckner on (#44VE9)

In this video from SC18 in Dallas, Scott Tease from Lenovo describes how the company is leading the TOP500 with innovative HPC cooling technologies. "At #8 on the TOP500, the Lenovo-built, hot-water cooled SuperMUC system at the LRZ in Germany is one of the most power efficient supercomputers on the planet. With more than 241,000 cores and a combined peak performance of the two installation phases of more than 6.8 Petaflops."The post Video: Lenovo Leads the TOP500 with Innovative HPC Cooling Technologies appeared first on insideHPC.

|

|

by staff on (#44S5B)

In this video, Mike Bernhardt from the Exascale Computing Project catches up with ORNL's David Bernholdt at SC18. They discuss supercomputing the conference, his career, the evolution and significance of message passing interface (MPI) in parallel computing, and how ECP has influenced his team’s efforts.The post Interview: The Importance of the Message Passing Interface to Supercomputing appeared first on insideHPC.

|

|

by Rich Brueckner on (#44RZT)

NOAA is out with their 2018 Arctic Report Card and the news is not good, folks. Issued annually since 2006, the Arctic Report Card is a timely and peer-reviewed source for clear, reliable and concise environmental information on the current state of different components of the Arctic environmental system relative to historical records. "The Report Card is intended for a wide audience, including scientists, teachers, students, decision-makers and the general public interested in the Arctic environment and science."The post NOAA Report: Effects of Persistent Arctic Warming Continue to Mount appeared first on insideHPC.

|

|

by Rich Brueckner on (#44RVH)

In this video from SC18 in Dallas, Yan Fisher and Dan McGuan from Red Hat describe the company's powerful software solutions for HPC and Ai workloads. "All supercomputers on the coveted Top500 list run on Linux, a scalable operating system that has matured over the years to run some of the most critical workloads and in many cases has displaced proprietary operating systems in the process. For the past two decades, Red Hat Enterprise Linux has served as the foundation for building software stacks for many supercomputers. We are looking to continue this trend with the next generation of systems that seek to break the exascale threshold."The post Red Hat Steps Up with HPC Software Solutions at SC18 appeared first on insideHPC.

|