|

by Rich Brueckner on (#43G2H)

In this video from the NVIDIA Showcase event at SC18, Jensen Huang hosts a demo of Singularity containers running a full galaxy simulation. "This has to be the best Container demo ever," said Huang. "As part of that effort, Huang announced new multi-node HPC and visualization containers for the NGC container registry, which allow supercomputing users to run GPU-accelerated applications on large-scale clusters. NVIDIA also announced a new NGC-Ready program, including workstations and servers from top vendors."The post Singularity Containers Power NVIDIA Full Galaxy Simulation appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 07:00 |

|

by Rich Brueckner on (#43DKS)

In this video from SC18, Philip Maher from Tyan describes how the company is packaging AMD EPYC processors with innovative server configurations for tackling data-intensive workloads. "AMD EPYC processors are based on the 14nm "Zen" x86 core architecture with 32 cores and 64 threads, featuring 8 memory channels and up to16 DIMMs per socket. The new powerful processor allows TYAN to offer the optimal balance of computing, memory and I/O for the customers. By adopting the latest AMD EPYC processor technology, TYAN brings high memory channel bandwidth and PCI Express high speed I/O connectivity features to enterprises and datacenters."The post AMD EPYC Powers Tyan Servers for Data-intensive Computing appeared first on insideHPC.

|

|

by staff on (#43DFE)

Last week at SC18, Atos announced support for AMD EPYC processors in its upcoming BullSequana X range of supercomputers. The next generation AMD EPYC processor (codenamed Rome) is expected to be available in Atos’ new BullSequana XH2000 liquid cooled supercomputer in 2019.The post Atos Adds AMD processors to BullSequana X Line of Supercomputers appeared first on insideHPC.

|

|

by staff on (#43BY3)

Last week at SC18 in Dallas, the Exascale Computing Project released a portion of the next version of collaboratively developed products that compose the ECP software stack, including libraries and embedded software compilers. "Mike Heroux, ECP Software Technology director, said in an interview at SC18 that the software pieces in this release represent new capabilities and, in most instances, are highly tested and quite robust, and point toward exascale computing architectures."The post Video: ECP Launches Extreme-Scale Scientific Software Stack 0.1 Beta appeared first on insideHPC.

|

|

by ralphwells on (#43BPQ)

Last week at SC18, Tyan showcased their Thunder SX GT62H-B7106 storage product line featuringIntel Xeon Scalable processors, large memory capacity and NVMe connectivity for high performance software defined storage applications. "New data-intensive applications, such as analytics and deep learning are changing data processing workflows. Data centers need powerful storage architectures that can extract economic value by managing massive amounts of data efficiently,†said Danny Hsu, Vice President of MiTAC Computing Technology Corporation’s TYAN Business Unit. â€The Thunder SX GT62H-B7106 is optimized for data center environment. The platform provides an outstanding foundation for all-flash storage by leveraging NVMe technology.â€The post TYAN Storage Server Steps up with 10 NVMe Bays of High Performance Flash appeared first on insideHPC.

|

|

by ralphwells on (#43B7B)

Last week at SC18, E8 Storage announced it is expanding its technology partnership with Mellanox with an integrated solution built on Mellanox BlueField SmartNICs. Customers will be able to maximize computing resources by pairing the E8 agent with the SmartNIC. The new integration will allow users to utilize the benefits of CPU offload to overcome any performance bottlenecks to application performance.The post Mellanox BlueField SmartNICs Accelerate E8 Storage appeared first on insideHPC.

|

|

by staff on (#43B1Y)

The SC18 conference drew a record-breaking 13,071 attendees, making it the largest SC conference of all time. As the premier international conference showcasing high performance computing, networking, storage, and analysis, the conference and exhibition infused the local economy with more than $40 million in revenue, according to the local Dallas Convention Bureau.The post Record SC18 Attendance a Bellwether for Growth in HPC Market appeared first on insideHPC.

|

|

by Rich Brueckner on (#438QV)

In this video from SC18 in Dallas, Marc Hamilton from NVIDIA describe the all new overclocked DGX-2H supercomputer. Built by NVIDIA, a cluster of 36 DGX-2H devices with 3 Petaflops of LINPACK performance was just ranked #62 on the TOP500 list of the world's fastest supercomputers.The post Overclocked NVIDIA DGX-2H Cluster Lands at #61 on the TOP500 appeared first on insideHPC.

|

|

by Rich Brueckner on (#438JZ)

In this video from SC18 in Dallas, Dr. Sofia Vallecorsa from CERN OpenLab describes how Ai is being used in design of experiments for the Large Hadron Collider. "An award-winning effort at CERN has demonstrated potential to significantly change how the physics based modeling and simulation communities view machine learning. The CERN team demonstrated that AI-based models have the potential to act as orders-of-magnitude-faster replacements for computationally expensive tasks in simulation, while maintaining a remarkable level of accuracy."The post Video: How Ai is helping Scientists with the Large Hadron Collider appeared first on insideHPC.

|

|

by staff on (#438K1)

High performance computing manufacturers are increasingly deploying liquid cooling. To avoid damage to electronic equipment due to leaks, secure drip-free connections are essential. Quick disconnects for HPC applications simplify connector selection. And with expensive electronics at stake, understanding the components in liquid cooling systems is critical. This article details what to look for when seeking the optimal termination for connectors—a way to help ensure leak-free performance.The post The Right Terminations for Reliable Liquid Cooling in HPC appeared first on insideHPC.

|

|

by staff on (#438ER)

In this special guest post, Axel Huebl looks at the TOP500 and HPCG with an eye on power efficiency trends to watch on the road to Exascale. "This post will focus one efficiency, in terms of performance per Watt, simply because system power envelope is a major constrain for upcoming Exascale systems. With the great numbers from TOP500, we try to extend theoretical estimates from theoretical Flop/Ws of individual compute hardware to system scale."The post The Green HPCG List and the Road to Exascale appeared first on insideHPC.

|

|

by staff on (#438ET)

Earlier this week CSC in Finland announced that Atos will build it's next-generation supercomputer for academic research. "The initial delivery will consist of the first partition of the supercomputer and its tightly integrated fast storage. The solution is based on next-generation Intel Xeon processors, code named Cascade Lake, and HDR InfiniBand interconnect from Mellanox."The post Atos to Build Finland’s Newest Supercomputer appeared first on insideHPC.

|

|

by staff on (#436V6)

In this video from CGTN, China's Tsinghua University takes the Student Cluster Competition at the SC18 conference in Dallas. The win was the final step in a Triple Crown for the team, as they won similar competitions in Asia and Germany earlier this year. "With sponsorship from hardware and software vendor partners, student teams design and build small clusters, learn designated scientific applications, apply optimization techniques for their chosen architectures, and compete in a non-stop, 48-hour challenge at the SC conference to complete a real-world scientific workload, showing off their HPC knowledge for conference attendees and judges."The post Video: China’s Tsinhua University Takes the Student Cluster Competition appeared first on insideHPC.

|

|

by Rich Brueckner on (#43560)

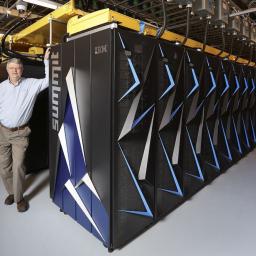

In this podcast, the Radio Free HPC team looks back on the highlights of SC18 and the newest TOP500 list of the world’s fastest supercomputers.Buddy Bland shows off Summit, the world’s fastest supercomputer at ORNL. "The latest TOP500 list of the world’s fastest supercomputers is out, a remarkable ranking that shows five Department of Energy supercomputers in the top 10, with the first two captured by Summit at Oak Ridge and Sierra at Livermore. With the number one and number two systems on the planet, the “Rebel Alliance†vendors of IBM, Mellanox, and NVIDIA stand far and tall above the others."The post Radio Free HPC Runs Down the TOP500 Fastest Supercomputers appeared first on insideHPC.

|

|

by Rich Brueckner on (#43561)

The Institute of Defense Analyses (IDA) in La Jolla is seeking a Lead Systems Engineer in our Job of the Week. "We are seeking a Lead Systems Engineer, who with minimal supervision, envisions, designs, administers, and maintains multiple IT systems, servers and software. Applies advanced subject matter expertise to develop solutions to solve a full range of complex problems."The post Job of the Week: Lead Systems Engineer at Defense Analyses (IDA) in San Diego appeared first on insideHPC.

|

|

by ralphwells on (#433A2)

Two months after its introduction, the NVIDIA T4 GPU is featured in 57 separate server designs from the world’s leading computer makers. It is also available in the cloud, with the first availability of the T4 for Google Cloud Platform customers. "Just 60 days after the T4’s launch, it’s now available in the cloud and is supported by a worldwide network of server makers. The T4 gives today’s public and private clouds the performance and efficiency needed for compute-intensive workloads at scale.â€The post NVIDIA’s New Turing T4 GPU is going gangbusters in the Cloud Space appeared first on insideHPC.

|

|

by ralphwells on (#43358)

"With Bitfusion along with Mellanox and VMWare, IT can now offer an ability to mix bare metal and virtual machine environments, such that GPUs in any configuration can be attached to any virtual machine in the organization, enabling easy access of GPUs to everyone in the organization,†said Subbu Rama, co-founder and chief product officer, Bitfusion. “IT can now pool together resources and offer an elastic GPU as a service to their organizations.â€The post Bitfusion Enables InfiniBand-Attached GPUs on Any VM appeared first on insideHPC.

|

|

by Rich Brueckner on (#4330C)

In this video from SC18 in Dallas, Thor Sewell from Intel describes the company's pending Cascade Lake Advanced Performance chip. "This next-gen platform doubles the cores per socket from an Intel system by joining a number of Cascade Lake Xeon dies together on a single package with the blue team's Ultra Path Interconnect, or UPI. Intel will allow Cascade Lake-AP servers to employ up to two-socket (2S) topologies, for as many as 96 cores per server."The post Intel Offers Sneak Peek at Cascade Lake Advanced Performance edition for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#430RB)

In this video from SC18, Raj Hazra describes how Intel is driving the convergence of HPC and Ai. "To meet the new computational challenges presented by this AI and HPC convergence, HPC is expanding beyond its traditional role of modeling and simulation to encompass visualization, analytics, and machine learning. Intel scientists and engineers will be available to discuss how to implement AI capabilities into your current HPC environments and demo how new, more powerful HPC platforms can be applied to meet your computational needs now and in the future."The post Video: Intel Driving HPC on the Road to Exascale appeared first on insideHPC.

|

|

by ralphwells on (#430KQ)

Indiana University staff and faculty will build the world’s first single-channel 400-gigabit-per-second-capable network for research and education at SC18. The connection will be capable of transmitting 50 gigabytes of data every second—or, enough to stream 16,000 ultra-high-definition movie. The demonstration, “Wide area workflows at 400 Gbps,†is IU’s submission to the conference’s annual Network Research Exhibition, which spotlights innovation in emerging network hardware, protocols, and advanced network-intensive scientific applications.The post Indiana University Demonstrates World’s First Single-Channel 400G Network appeared first on insideHPC.

|

|

by Rich Brueckner on (#430E1)

"Cloud computing offers a potential solution by allowing people to create and access computing resources on demand. Yet meeting the complex software demands of an HPC application can be quite challenging in a cloud environment. In addition, running HPC workloads on virtualized infrastructure may result in unacceptable performance penalties for some workloads. Because of these issues, relatively few organizations have run production HPC work- loads in either private or public clouds."The post How Intel is Fostering HPC in the Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#430KS)

In this video from SC18 in Dallas, Trish Damkroger describes how Intel is pushing the limits of HPC and Machine Learning with a full suite of Hardware, Software, and Cloud technologies. "

|

|

by ralphwells on (#42XQG)

Today HLRS in Germany announced plans to deploy next-generation supercomputer for HLRS, 3.5 times faster than its current system. The upcoming system, which HLRS has named Hawk, will be the world’s fastest supercomputer for industrial production, powering computational engineering and research across science and industrial fields to advance applications in energy, climate, mobility, and health.The post HPE to build Hawk Supercomputer for HLRS in Germany appeared first on insideHPC.

|

|

by Rich Brueckner on (#42XQJ)

"The emerging AI community on HPC infrastructure is critical to achieving the vision of AI," said Pradeep Dubey, Intel Fellow. "Machines that don’t just crunch numbers, but help us make better and more informed complex decisions. Scalability is the key to AI-HPC so scientists can address the big compute, big data challenges facing them and to make sense from the wealth of measured and modeled or simulated data that is now available to them."The post How Intel is Driving the Convergence of HPC & Ai appeared first on insideHPC.

|

|

by Rich Brueckner on (#42XKK)

In this video from SC18 in Dallas, Dieter Kranzlmueller from LRZ in Germany describes how Intel powers the SuperMUC-NG supercomputer. "The 19.4 Petaflop machine ranks number 8 among the fastest supercomputers in the world. SuperMUC-NG is in its start-up phase, and will be ready for full production runs in 2019. The machine, build in partnership with Lenovo and Intel, has a theoretical peak of 26.9 petaflops, and is comprised of 6,400 compute nodes based on Intel Xeon Scalable processors."The post Intel Powers SuperMUC-NG Supercomputer at LRZ in Germany appeared first on insideHPC.

|

|

by staff on (#42XKN)

Today Hyperion Research announced that the winner of the 2018 Hyperion Award for Innovation Excellence goes to UberCloud and its project partners. "This work represents a breakthrough in demonstrating the high value of computational modeling and simulation in improving the clinical application of non-invasive electro-stimulation of the human brain in schizophrenia and the potential to apply this technology to the treatment of other neuropsychiatric disorders such as depression and Parkinson’s disease."The post UberCloud Wins Hyperion HPC Innovation Excellence Award with Neuromodulation Project appeared first on insideHPC.

|

|

by staff on (#42V4X)

Today Ayar Labs announced that Charlie Wuischpard, former vice president and general manager at Intel, has joined Ayar Labs as CEO and a member of the board of directors. “With his track record of driving growth in both small and large companies, forming deep trusted relationships with high performance computing industry participants, and delivering value to stakeholders, we know he will lead Ayar Labs to growth in the advanced computing and data center markets.â€The post HPC Veteran Charlie Wuischpard Joins Optical Startup Ayar Labs appeared first on insideHPC.

|

|

by staff on (#42RPF)

Today OrionX Research today announced that Bitcoin and BTC.com top the first release of the CryptoSuper500 list. The list recognizes cryptocurrency mining as a new form of supercomputing and tracks the top mining pools. “The growth of the cryptocurrency market has put the spotlight on emerging decentralized applications, the new ways in which they are funded, and the software stack on which they are built.†Cryptocurrency technologies include blockchain, consensus algorithms, digital wallets, and utility and security tokens.The post Podcast: Announcing the New CryptoSuper500 List appeared first on insideHPC.

|

|

by Rich Brueckner on (#42V16)

In this video, Dan Stanzione from TACC describes how Intel technologies from Intel will power the World's Fastest Supercomputer in Academia at the Texas Advanced Computing Center. "If completed today, Frontera would be the fifth most powerful system in the world, the third fastest in the U.S. and the largest at any university. For comparison, Frontera will be about twice as powerful as Stampede2."The post Video: Intel to Power Fastest Supercomputer in Academia at TACC appeared first on insideHPC.

|

|

by staff on (#42V18)

Today Rescale announced its launch into academia with their ScaleX Pro for Students and ScaleX Pro for Classroom products. “Rescale’s ScaleX Pro for Students and ScaleX Pro for Classroom solve for today’s academia pain points by providing students with the ability to run projects immediately and unique access to different technology partner programs such as to cloud service providers.â€The post Rescale Brings Cloud HPC to Academia appeared first on insideHPC.

|

|

by ralphwells on (#42V19)

Today NVIDIA showcased its HPC leadership in the TOP500 list of the world’s fastest supercomputers. The closely watched list shows a 48 percent jump in one year in the number of systems using NVIDIA GPU accelerators. The total climbed to 127 from 86 a year ago, and is three times greater than five years ago. “With the end of Moore’s Law, a new HPC market has emerged, fueled by new AI and machine learning workloads. These rely as never before on our high performance, highly efficient GPU platform to provide the power required to address the most challenging problems in science and society.â€The post NVIDIA Powers New Performance Records on TOP500 List appeared first on insideHPC.

|

|

by Rich Brueckner on (#42T21)

In this video, Beenish Zia from Intel presents: BigDL Open Source Machine Learning Framework for Apache Spark. "BigDL is a distributed deep learning library for Apache Spark*. Using BigDL, you can write deep learning applications as Scala or Python* programs and take advantage of the power of scalable Spark clusters. This article introduces BigDL, shows you how to build the library on a variety of platforms, and provides examples of BigDL in action."The post Slidecast: BigDL Open Source Machine Learning Framework for Apache Spark appeared first on insideHPC.

|

|

by Rich Brueckner on (#42SYD)

We are pleased to offer our readers a Livestream of the HPC Inspires Plenary from SC18 in Dallas. "Simulation and modeling along with AI are being applied to some of our most challenging global threats and humanitarian crises. The SC18 plenary session will explore the capacity of HPC to help mitigate human suffering and advance our capacity to protect the most vulnerable, identify methods to produce enough food globally and ensure the safety of our planet and natural resources."The post Video Replay: HPC Inspires Plenary from SC18 appeared first on insideHPC.

|

|

by staff on (#42RTA)

Unlocking the bigger-picture meaning from raw data volumes is no easy task. Unfortunately, that means that many important insights remain hidden within the untapped data which quietly floods data centers around the globe each day. Today’s advanced applications require faster and increasingly powerful hardware and storage technologies to make sense of the data deluge. Intel seeks to address this critical trend with a new class of future Intel® Xeon® Scalable processors, code-named Cascade Lake.The post New Class of Intel Xeon Scalable Processors Break Through Performance Bottlenecks appeared first on insideHPC.

|

|

by staff on (#42RPE)

The latest TOP500 list of the world's fastest supercomputers is out, a remarkable ranking that shows five Department of Energy supercomputers in the top 10, with the first two captured by Summit at Oak Ridge and Sierra at Livermore. With the number one and number two systems on the planet, the "Rebel Alliance" vendors of IBM, Mellanox, and NVIDIA stand far and tall above the others.The post New TOP500 List topped by DOE Supercomputers appeared first on insideHPC.

|

|

by staff on (#42QK6)

The Women in High Performance Computing (WHPC) organization will be be leading the conversation on diversity and inclusion this week at SC18 in Dallas. "The movement is growing. More and more allies are joining in efforts to support diversity and encourage inclusion. Women in HPC members’ efforts are increasing and we are organizing at the grassroots level. We are now a force that is fostering positive change in the community,†says Dr. Toni Collis, Chair and Co-Founder of WHPC and Chief Business Development Officer at Appentra Solutions."The post Women in HPC Events on deck for SC18 appeared first on insideHPC.

|

|

by staff on (#42NHC)

In this special guest post, Peter ffoulkes writes that automation software from companies like XTREME-D are making HPC datacenters more productive every day. "One such example of these capabilities comes from startup XTREME-D, which provides a simple point-and-click user interface based on HPC workload templates and the XTREME-Stargate cluster portal, which acts as a “super head node†to provide simple access to virtual cluster resources."The post Instant Gratification Takes Too Long and Costs Too Much for HPC. Or Does It? appeared first on insideHPC.

|

|

by staff on (#42NHD)

Today OFA issued their Call for Sessions for the annual OpenFabrics Alliance Workshop 2019. The event takes place March 19-21 in Austin, Texas. "The Workshop places a high value on collaboration and communication among participants. In keeping with the theme of collaboration, proposals for Birds of a Feather sessions and Panels are particularly encouraged."The post Call For Participation: OpenFabrics Workshop appeared first on insideHPC.

|

|

by staff on (#42KV6)

Today TYAN announced plans to showcase a full line of HPC, storage, and cloud computing server platforms at SC18 next week in Dallas. "Customers in the datacenter to the enterprise are facing the challenge to get more value out of enormous amounts of data. The demand is pushing to move data faster, store data more and analyze data accurately,†said Danny Hsu, Vice President of MiTAC Computing Technology Corporation's TYAN Business Unit. “TYAN’s leading portfolio of HPC, storage and cloud server platforms are based on the Intel Xeon Scalable processors and are designed to help our customers move, store and process massive amounts of data efficiently.â€The post TYAN to showcases x86 HPC Solutions at SC18 appeared first on insideHPC.

|

|

by staff on (#42KNF)

Today Rescale announced that the company is now delivering secure HPC in the Cloud to the public sector. "In our platform development we have taken several steps towards protecting customers through the security of our platform which is why we are proud to be the first HPC platform solution in the industry to be ITAR and EAR compliant. Additionally, we are the only HPC platform today that is both independently SOC 2 Type 2 attested and HIPAA compliant."The post Rescale Delivers Secure HPC in the Cloud for the Public Sector appeared first on insideHPC.

|

|

by staff on (#42KGX)

We are pleased to report that the Lenovo AI Challenge has chosen outstanding researchers in the Machine Learning arena to receive an all-expenses-paid trip to SC18. "The winning researchers will have the opportunity to share their work with the world through a presentation in the Lenovo SC18 booth and videos of their presentations that will live forever."The post Lenovo 2018 AI Challenge brings best and brightest to SC18 appeared first on insideHPC.

|

|

by staff on (#42KCW)

Today CPC (Colder Products Company) introduced its new PLQ2 high-performance thermoplastic connector. "PLQ Series QDs are among the highest-performing engineered polymer connectors for liquid cooling applications available. They vastly reduce concerns around plastic QD durability and performance,†said Elizabeth Langer, senior design engineer, thermal management. “HPC and data center specifiers and operators can use these plastic QDs with confidence.â€The post CPC to Showcase New QD Connectors for HPC Liquid Cooling at SC18 appeared first on insideHPC.

|

|

by staff on (#42GYF)

Today Asetek announced plans to showcase its latest liquid cooling technologies at SC18 in Dallas next week. Also on display will be Intel Compute Modules that include Asetek direct-to-chip (D2C) liquid cooling technology, validated and factory installed by Intel. "Now enterprises and hyperscalers have a choice of exploiting the plug & play convenience of our high-performance liquid assisted air cooling solution – InRackLAAC – or the extreme performance, quality and reliability of our full-blown liquid cooling solution – InRackCDU."The post Asetek to Showcase Liquid Cooling Technology for AI and HPC at SC18 appeared first on insideHPC.

|

|

by staff on (#42GRZ)

"In the modern world of genomics where analysis of 10’s of thousands of genomes is required for research, the cost per genome and the number of genomes per time are critical parameters. Parabricks adaption of the GATK4 Best Practice workflows running seamlessly on SkyScale’s Accelerated Cloud provides unparalleled price and throughput efficiency to help unlock the power of the human genome."The post Parabricks and SkyScale Raise the Performance Bar for Genomic Analysis appeared first on insideHPC.

|

|

by staff on (#42GYH)

Today Spectra Logic announced the industry’s most advanced tape technology, the IBM TS1160, for use in Spectra’s enterprise-class tape libraries. Spectra’s TFinity ExaScale, T950, T950v and T380 Tape Libraries will support the new tape drive and media. When fully populated with the TS1160 tape drives and media, a TFinity ExaScale will store and allow access to more than 2 exabytes (2,000 petabytes) of data, making Spectra’s TFinity ExaScale Tape Library the largest single data storage machine in the world.The post Spectra Logic Unveils World’s Largest Data Storage Machine with 2 Exabytes Capacity appeared first on insideHPC.

|

|

by staff on (#42GYK)

Today the UberCloud announced the availability of UberCloud consulting services in the Microsoft Azure Marketplace, an online store providing applications and services for use on Microsoft Azure. UberCloud customers can now take advantage of the scalability, high availability, and security of Azure, with streamlined deployment and management. "UberCloud’s HPC software containers provide engineers with seamless and immediate access to the cloud,†said Wolfgang Gentzsch, President of TheUberCloud Inc.The post UberCloud Now Available in Microsoft Azure Marketplace appeared first on insideHPC.

|

|

by staff on (#42GS0)

Today Atos announced a deal to deploy a BullSequana X1310 Arm-based supercomputer to CEA in France. The machine will include the new Marvell ThunderX2 64-bit processors. "The availability of this new model is fully in-line with our policy of technological openness and our support for the European exascale supercomputer effort,†said Sophie Proust Houssiaux, Head of Big Data and Security Research and Development at Atos."The post ARM Powers Atos Supercomputer for CEA in France appeared first on insideHPC.

|

|

by Rich Brueckner on (#42FKZ)

The Beowulf community will be featured prominently in the SC 30th Anniversary Exhibit at SC18 in Dallas next week. Beowulf is a methodology that uses open software to connect a local cluster of commodity-grade hardware into a single processing element. What began as a disruptive idea in 1993 has fundamentally changed the trajectory of supercomputing, and its influence has filtered down into nearly every aspect of High Performance Computing today.The post Beowulf Bash Returns to Dallas for SC18 with Live Cluster Build appeared first on insideHPC.

|

|

by staff on (#42F0W)

Today HPC Cloud provider R Systems announced plans to showcase its flexible High Performance Computing solutions at the annual NAFEMS (for engineering analysis & simulation in the automotive industry) conference Nov. 8, 2018 in Troy, Mich. Attendees can learn more about how automotive organizations are using new methods to access HPC resources such as cloud computing (public, private and hybrid) as well as dedicated and managed HPC resources and services by visiting the R Systems booth or by attending the IndyCar case study NAFEMS session.The post R Systems Powers Parallel Works in the Cloud at NAFEMS Engineering Event appeared first on insideHPC.

|

|

by staff on (#42EJQ)

Today the Gauss Centre for Supercomputing’s (GCS) in Germany awarded 816.3 million core hours as part of the organization’s 20th Call for Large-Scale Projects. The computing time grants support national research activities from the fields of Computational and Scientific Engineering (351.3 million core hours), Astrophysics (247.5 million core hours), and High Energy Physics (217.5 million core hours).The post GCS in Germany Grants 816 Million Core Hours to Science appeared first on insideHPC.

|