|

by staff on (#2S1NR)

Today the Ohio Supercomputer Center announced that is has run the single-largest scale calculation in the Center’s history. Scientel IT Corp used 16,800 cores of the Owens Cluster on May 24 to test database software optimized to run on supercomputer systems. The seamless run created 1.25 Terabytes of synthetic data.The post Ohio Supercomputer Center runs Biggest Calculation Ever appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 00:15 |

|

by Rich Brueckner on (#2S1KF)

In this video from GTC 2017, Scot Schultz from Mellanox describes how high performance InfiniBand is powering new capabilities for Machine Learning with RDMA. "Mellanox Solutions accelerate many of the world’s leading artificial intelligence and machine learning platforms. Mellanox solutions enable companies and organizations such as Baidu, Facebook, JD.com, NVIDIA, PayPal, Tencent, Yahoo and many more to leverage machine learning platforms to enhance their competitive advantage."The post How InfiniBand is Powering new capabilities for Machine Learning with RDMA appeared first on insideHPC.

|

|

by staff on (#2RY7G)

Today Intel announced that it has been selected by DARPA to collaborate on the development of a powerful new data-handling and computing platform that will leverage machine learning and other artificial intelligence (AI) techniques. "By mid-2021, the goal of HIVE is to provide a 16-node demonstration platform showcasing 1,000x performance-per-watt improvement over today’s best-in-class hardware and software for graph analytics workloads,†said Dhiraj Mallick, vice president of the Data Center Group and general manager of the Innovation Pathfinding and Architecture Group at Intel. “Intel’s interest and focus in the area may lead to earlier commercial products featuring components of this pathfinding technology much sooner.â€The post Intel to Develop New Machine Learning and AI Platform for DARPA appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RXQN)

Last week at the Computex conference, Nvidia launched a partner program with hyperscale vendors Foxconn, Inventec, Quanta and Wistron to more rapidly meet the demands for AI cloud computing. Through the program, NVIDIA is providing each partner with early access to the NVIDIA HGX reference architecture, NVIDIA GPU computing technologies, and design guidelines. HGX is the same […]The post Nvidia Partners with Hyperscale Vendors for HGX-1 AI Reference Platform appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RXJJ)

Les Ottolenghi from Ceasers Entertainment presented this keynote at the PBS Works Users Group meeting in Las Vegas. "Ceasers is focused on building loyalty and value with its guests through a unique combination of great service, excellent products, unsurpassed distribution, operational excellence and technology leadership."The post High Performance Computing: A Business Platform at Caesars Entertainment appeared first on insideHPC.

|

|

by staff on (#2RXFK)

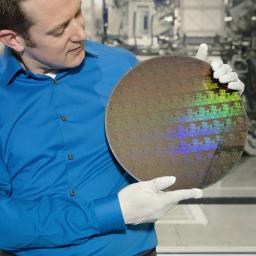

Today IBM announced it has developed an industry-first process with its research partners to build silicon nanosheet transistors that will enable 5 nanometer chips. The details of the process will be presented at the 2017 Symposia on VLSI Technology and Circuits conference in Kyoto, Japan.The post IBM Research Alliance Paves the way for 5nm Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RXDH)

In this Radio Free HPC podcast, Rich gives us the lowdown on his recent tour of the SuperNAP datacenter in Las Vegas. Run by Switch, the campus has over up to 2.4 million sqft of Tier IV Gold data center space with 315 Megawatts of datacenter capacity.The post Radio Free HPC Tours the 100 Megawatt SuperNAP Datacenter appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RTS9)

In this special guest feature, Bill Mannel from Hewlett Packard Enterprise writes that this week's HPE Discover meeting in Las Vegas will bring together leaders from across the HPC community to collaborate, share, and investigate the impact of IT modernization, big data analytics, and AI. "Organizations across all industries must adopt solutions that allow them to anticipate and pursue future innovation. HPE is striving to be your best strategic digital transformation partner."The post Accelerating Innovation with HPC and AI at HPE Discover appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RTNP)

In this podcast, Sumit Gupta from IBM describes the company's new PowerAI deep learning software distribution on Power Systems. PowerAI attacks the major challenges facing data scientists and developers by simplifying the development experience with tools and data preparation while also dramatically reducing the time required for AI system training from weeks to hours.The post Podcast: Sumit Gupta on IBM’s New PowerAI Software for Data Scientists appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RR4S)

Hyperion Research, the new name for the former IDC HPC group, today announced that it will hold its Annual HPC Market Update Meeting at ISC 2017 in Frankfurt, Germany. The session will take 4pm to 5pm, Monday, June 19 in the Panorama 1 room, at the Messe Frankfurt.The post Hyperion Research to hold Annual HPC Market Update Meeting at ISC 2017 appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RR3D)

Dale Brantley presented this talk at the PBS Works User Group meeting. "Panasas storage solutions drive industry and research innovation by accelerating workflows and simplifying data management. Our ActiveStor appliances leverage the patented PanFS storage operating system and DirectFlow protocol to deliver performance and reliability at scale from an appliance that is as easy to manage as it is fast to deploy. Panasas storage is optimized for the most demanding workloads in life sciences, manufacturing, media and entertainment, energy, government as well as education environments, and has been deployed in more than 50 countries worldwide."The post Overview of Panasas Storage for HPC & Big Data appeared first on insideHPC.

|

|

by staff on (#2RMSN)

Today Seagate Government Solutions announced that Carahsoft has become an approved distributer of the company's Secure Self-Encrypting Drives (SEDs). Designed to help Protect Data At Rest (DAR), Seagate SEDs are used to mitigate security risks associated with today’s mobile workforce. "Seagate SEDs are used to protect Data at Rest and reduce IT drive retirement costs—two key goals of every government agency,†said Craig P. Abod, Carahsoft president. “With Seagate in our portfolio, we are now able to offer our government customers and reseller partners the widest selection of FIPS 140-2 certified SEDs to prevent the loss and theft of sensitive data and achieve regulatory compliance.â€The post Seagate Self-Encrypting Drives now available through Carahsoft appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RMP4)

In this video from the Dell EMC HPC Community meeting, Armughan Ahmad describes how the company is delivering world-class HPC solutions together with its partners and customers. "I lead a global team at Dell EMC that is focused on helping our customers and partners navigate and succeed in the rapidly-evolving world of digital transformation. We want to help our customers deliver industry specific outcomes that are enabled through ON / OFF premise workload focused solutions that lower cost of traditional IT allowing them to invest in a new digital ready business models."The post Armughan Ahmad on how Dell EMC is Driving High Performance Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RMKX)

The MVAPICH User Group Meeting (MUG) has issued its Call for Presentations. As the annual gathering of MVAPICH2 users, researchers, developers, and system administrators, the MUG event includes Keynote Talks, Invited Tutorials, Invited Talks, Contributed Presentations, Open MIC session, and hands-on sessions. The event takes place August 14-16, 2017 in Columbus, Ohio.The post Call for Presentations: MVAPICH User Group Meeting (MUG) appeared first on insideHPC.

|

|

by staff on (#2RMFY)

In this video from the PBS Works User Group 2017, Rich Brueckner from insideHPC moderates a vendor panel on The Changing Landscape of HPC. "When it comes down to it, the HPC community depends on a healthy ecosystem. In this panel discussion, we will explore emerging technology trends that are reshaping the way high performance computing is delivered to the end user."The post Vendor Panel: The Changing Landscape of HPC appeared first on insideHPC.

|

|

by staff on (#2RM92)

The Intel Omni-Path Architecture is the next-generation fabric for high-performance computing. In this feature, we will explore what makes a great HPC fabric and the workings behind Intel Omni-Path Architecture. "What makes a great HPC fabric? Focusing on improving and enhancing application performance with fast throughput and low latency."The post Intel Omni-Path Architecture: A Focus on Application Performance appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RGR9)

In this video from the Dell EMC HPC Community meeting, Tim Carroll from Cycle Computing describes how the company makes it easier to move big HPC workloads to the Cloud with the help of Dell EMC. "Dell EMC has embraced the notion that hybrid environments of internal and external resources will be the optimal path for customers."The post Cycle Computing’s Tim Carroll on how Dell EMC Powers Cloud HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RGMT)

"The successful conclusion of this event provides assurance to the FC SAN community that the draft version of the FC-NVMe standard specification combined with the NVMe fabric specifications meets the demanding performance and availability requirements of flash and NVMe storage,†said Mark Jones, president and chairman of the board, FCIA, and director, Technical Marketing and Performance, Broadcom Limited and FC-NVMe plugfest participant. “This event was also notable as providing the first multi-vendor interoperability demonstrating sustained low latency of Gen 6 32GFC port and fabric concurrency of FC-NVMe and FC, highlighting the adaptive architecture of FC that has more than 50 million installed ports in operation in the world’s leading datacenters.â€The post Plugfest Demonstrates Fibre Channel Capabilities for NVMe Storage appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RGJ4)

In this video from GTC 2017, Dr. Rene Meyer from AMAX describes the company's innovative virtualization solutions for GPU computing. "What we are showcasing here is a very interesting solution—a hardware/software solution. We not only present the hardware, but we put a software layer on top, which allows you to virtualize GPUs in those machines."The post AMAX Showcases Virtualization Solutions for GPUs at GTC 2017 appeared first on insideHPC.

|

|

by MichaelS on (#2RGEB)

"With a wide range of Intel Xeon processors available, a truly immersive video experience can be delivered to a diverse set of end users who enjoy watching a concert or sporting event from wherever they may be located. While not an immersive VR experience, immersive video where the user has control over the viewing angle is a very important technology that can deliver exciting content to many consumers."The post Jump Start your Immersive Video Experiences appeared first on insideHPC.

|

|

by staff on (#2RGCA)

Today UT-Battelle announced that Thomas Zacharia, who helped build Oak Ridge National Laboratory into a global supercomputing power, has been selected as the laboratory’s next director. "Thomas has a compelling vision for the future of ORNL that is directly aligned with the U.S. Department of Energy’s strategic priorities,†said Joe DiPietro, chair of the UT-Battelle Board of Governors and president of the University of Tennessee.The post Thomas Zacharia named Director of Oak Ridge National Lab appeared first on insideHPC.

|

|

by staff on (#2RCXM)

In this special guest post, Professor Jack Dongarra sits down with Mike Bernhardt from ECP to discuss the role of Dongarra’s team as they tackle several ECP-funded software development projects. "What we’re planning with ECP is to take the algorithms and the problems that are tackled with LAPACK and rearrange, rework, and reimplement the algorithms so they run efficiently across exascale-based systems.â€The post Jack Dongarra on ECP-funded Software Projects for Exascale appeared first on insideHPC.

|

|

by staff on (#2RCRG)

The importance of supercomputing on local and national economic prosperity has been highlighted by a recent study which reported that its Blue Waters project to be worth more than $1.08 billion for the Illinois’ economy. The study was completed by the published by the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign.The post NCSA Blue Waters Report Shows Economic Benefits of HPC appeared first on insideHPC.

|

|

by staff on (#2RBYB)

ISC 2017 may be just around the corner, but the conference team is already busy planning the show for next year. Today they announced that Prof Horst Simon will be the ISC 2018 program chair. "ISC 2018 topics will embrace a range of subject matter critical to the development of the high performance computing field, which, in return, impacts the quality of human life."The post Horst Simon to be Program Chair for ISC 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#2RBX0)

Jim Glidewell from Boeing presented this talk at the PBS Works User Group. "There are multiple elements to providing an effective and efficient HPC service. This presentation will share some of our strategies for extracting maximal value from our HPC hardware and providing a service that meets the needs of our engineering customers."The post Video: Optimizing HPC Service Delivery at Boeing appeared first on insideHPC.

|

|

by staff on (#2RBNN)

In the past 5 years, GPUs have gotten even more powerful so rendering tasks can be completed even faster than before. Katie (Garrison) Rivera, of One Stop Systems, explains how HPC can act as a service for high performance video rendering.The post HPC as a Service for High Performance Video Rendering appeared first on insideHPC.

|

|

by staff on (#2R898)

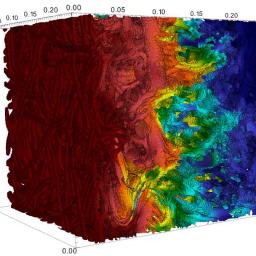

Industry is collaborating with researchers at LBNL to significantly reduce paper production costs and increase energy efficiencies. “The major purpose is to leverage our advanced simulation capabilities, high performance computing resources and industry paper press data to help develop integrated models to accurately simulate the water papering process.â€The post Supercomputing More Efficient Paper Production with HPC4MFG appeared first on insideHPC.

|

|

by staff on (#2R876)

Twenty Ohio high school students were selected to attend the Ohio Supercomputer Center’s 28th annual Summer Institute, June 4-16 at Ohio State University. These academically gifted students will investigate complex science and engineering problems while discovering the many career opportunities in science, technology, engineering and mathematics (STEM) fields. "The Summer Institute allows students to gain a better understanding of what scientists and engineers do,†Guilfoos said. “SI gives students real-world knowledge and experience that they can use for the rest of their lives.â€The post Ohio Supercomputer Center to Boost STEM Education at annual Summer Institute appeared first on insideHPC.

|

|

by Rich Brueckner on (#2R803)

In this podcast, Rich notes that recent reports on the Aurora supercomputer were incorrect. According to Rick Borchelt from the DoE: “On the record, Aurora contract is not cancelled." Before that, we follow Henry on an unprecedented shopping spree at Best Buy.The post In Search Of: Radio Free HPC on the Hunt for the Aurora Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#2R7TT)

In this video from the Dell EMC HPC Community meeting, Adnan Khaleel, Global Strategist for HPC at Dell describes how the company works with partners to deliver HPC in the Cloud. "By tapping into on-demand cloud resources as needed, your organization can access high-end computing power without the additional capital investment of building and maintaining your own HPC cluster."The post Adnan Khaleel on how Dell EMC Delivers HPC in the Cloud appeared first on insideHPC.

|

|

by staff on (#2R7RH)

Wattages are rising so high that to cool the nodes containing the highest performance chips used in HPC leaves one with little choice other than liquid cooling to maintain reasonable rack densities. Asetek explores how an increase in wattage trends is pushing the HPC industry toward more versatile liquid cooling options.The post Increase in Wattage Trends Make Versatile Liquid Cooling the New Norm appeared first on insideHPC.

|

|

by Rich Brueckner on (#2R4S2)

"In a real-time challenge, 12 teams of six undergraduate students will build a small cluster of their own design on the ISC exhibit floor and race to demonstrate the greatest performance across a series of benchmarks and applications. The students will have a unique opportunity to learn, experience and demonstrate how high-performance computing influence our world and day-to-day learning."The post Video: Previewing the ISC 2017 Student Cluster Competition appeared first on insideHPC.

|

|

by Rich Brueckner on (#2R4PV)

Today Inventec in Taiwan announced Baymax, a new server platform optimized for Cloud compute, high-performance cloud storage and Big Data applications based on Cavium's second-generation 64-bit ARMv8 ThunderX2 processors. "Inventec's success as world's largest server ODM has been based on our compelling designs and manufacturing expertise and our ability to deliver leading edge cost effective server platforms to world's largest mega scale datacenters," said Evan Chien, Senior Director of Inventec Server Business Unit 6. "Earlier this year Inventec's customers requested platforms based on Cavium's ThunderX2 ARMv8 processors, and the new Baymax platform is the first platform being delivered."The post Cavium ThunderX2 Processors Power new Baymax HyperScale Server Platforms appeared first on insideHPC.

|

|

by dangutierrez2 on (#2R49B)

We'll start by providing a handy five-step enterprise AI strategy designed to ensure your early AI deployment projects are a success. This article is part of a special insideHPC report that explores trends in machine learning and deep learning.The post What Does it Take to Get Started with AI? appeared first on insideHPC.

|

|

by Rich Brueckner on (#2R1J7)

"What is so new about the container environment that a new class of storage software is emerging to address these use cases? And can container orchestration systems themselves be part of the solution? As is often the case in storage, metadata matters here. We are implementing in the open source OpenEBS.io some approaches that are in some regards inspired by ZFS to enable much more efficient scale out block storage for containers that itself is containerized. The goal is to enable storage to be treated in many regards as just another application while, of course, also providing storage services to stateful applications in the environment."The post Learning from ZFS to Scale Storage on and under Containers appeared first on insideHPC.

|

|

by staff on (#2R1FT)

"In recent years there has been increasing concern about the reproducibility of scientific results. Because scientific research represents a major public investment and is the basis for many decisions that we make in medicine and society, it is essential that we can trust the results. Our goal is to provide researchers with tools to do better science. Our starting point is in the field of neuroimaging, because that’s the domain where our expertise lies."The post RCE Podcast Looks at Reproducibility of Scientific Results appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QYST)

Ellen Salmon from NASA gave this talk at the 2017 MSST conference. "This talk will describe recent developments at the NASA Center for Climate Simulation, which is funded by NASA’s Science Mission Directorate, and supports the specialized data storage and computational needs of weather, ocean, and climate researchers, as well as astrophysicists, heliophysicists, and planetary scientists."The post Evolving Storage and Cyber Infrastructure at the NASA Center for Climate Simulation appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QYQZ)

Oracle in Seattle is seeking a Cloud Performance Architect in our Job of the Week. "As a Cloud Performance Architect, you will be a leading contributor in improving performance of Oracle’s latest Cloud Services Technologies. You will take an active role in the definition and evolution of standard practices and procedures. Additionally, you will be responsible for defining and developing software for tasks associated with the developing, designing and debugging of software applications or operating systems. If you have a passion for improving and creating high performance software products, this is the place where you can make a difference."The post Job of the Week: Cloud Performance Architect at Oracle in Seattle appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QVE5)

The ExaComm 2017 Workshop at ISC High Performance has posted its Full Agenda. As the Third International Workshop on Communication Architectures for HPC, Big Data, Deep Learning and Clouds at Extreme Scale, the one day workshop takes place at the Frankfurt Marriott Hotel on Thursday, June 22. "The objectives of this workshop will be to share the experiences of the members of this community and to learn the opportunities and challenges in the design trends for exascale communication architectures."The post ExaComm 2017 Workshop at ISC High Performance posts Full Agenda appeared first on insideHPC.

|

|

by staff on (#2QVB1)

Recently, the Massachusetts Open Cloud (MOC) adopted Atmosphere as a means of making it easier for academics to access cloud resources. MOC is a public computational cloud based upon the Open Cloud Exchange model in which many stakeholders participate in the cloud’s development and operation. The project caters to a varied community of users, including academic entities and industrial partners, and those who require computational resources as well as groups providing such resources.The post CyVerse’s Atmosphere Platform comes to Massachusetts Open Cloud appeared first on insideHPC.

|

|

by staff on (#2QV99)

The worldwide iRODS community will gather June 13 – 15 for their first User Group Meeting to be held at Utrecht University in The Netherlands. Along with use cases and presentations by iRODS users from at least seven countries, the meeting will offer a glimpse at new technologies that will soon be available alongside iRODS 4.2.The post iRODS User Group Meeting to Preview New Capabilities appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QV5G)

In this video from the Dell EMC HPC Community meeting in Austin, Thomas Lippert from the Jülich Supercomputing Centre describes their pending 5 Petaflop Booster system. "This will be the first-ever demonstration in a production environment of the Cluster-Booster concept, pioneered in DEEP and DEEP-ER at prototype-level, and a considerable step towards the implementation of JSC’s modular supercomputing concept,†explains Prof. Thomas Lippert, Director of the Jülich Supercomputing Centre.The post Video: 5 Petaflop Booster System from Dell EMC coming to Jülich appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QQJ2)

Rob Ober from Nvidia describes the company's new HGX-1 reference platform for GPU computing. "Powered by NVIDIA Tesla GPUs and NVIDIA NVLink high-speed interconnect technology; the HGX-1 comes as AI workloads—from autonomous driving and personalized healthcare to superhuman voice recognition—are taking off in the cloud."The post The New Nvidia HGX-1: GPU Power for Machine Learning at Hyperscale appeared first on insideHPC.

|

|

by staff on (#2QQFQ)

Today the OpenMP ARB announced the appointment of Duncan Poole and Kathryn O’Brien to its Board of Directors. As industry veterans, they bring a wealth of experience to the OpenMP ARB.The post OpenMP ARB Appoints Duncan Poole and Kathryn O’Brien to its Board appeared first on insideHPC.

|

|

by Richard Friedman on (#2QQCD)

OpenMP is a good example of how hardware and software vendors, researchers, and academia, volunteering to work together, can successfully design a standard that benefits the entire developer community. Today, most software vendors track OpenMP advances closely and have implemented the latest API features in their compilers and tools. With OpenMP, application portability is assured across the latest multicore systems, including Intel Xeon Phi processors.The post The OpenMP API Celebrates 20 Years of Success appeared first on insideHPC.

|

|

by staff on (#2QQAZ)

Today DDN announced that large commercial machine learning programs in manufacturing, autonomous vehicles, smart cities, medical research and natural-language processing are overcoming production scaling challenges with DDN’s large-scale, high-performance storage solutions. "The high performance and flexible sizing of DDN systems make them ideal for large-scale machine learning architectures,†said Joel Zysman, director of advanced computing at the Center for Computational Science at the University of Miami. “With DDN, we can manage all our different applications from one centrally located storage array, which gives us both the speed we need and the ability to share information effectively. Plus, for our industrial partnership projects that each rely on massive amounts of instrument data in areas like smart cities and autonomous vehicles, DDN enables us to do mass transactions on a scale never before deemed possible. These levels of speed and capacity are capabilities that other providers simply can’t match.â€The post How DDN Technology Speeds Machine Learning at Scale appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QQ7T)

In this video from the 2017 GPU Technology Conference, Jason Chen from Exxact Corporation describes the company's innovative GPU solutions for HPC & Deep Learning workloads. "Exxact Corporation is a global, value-added supplier of computing products and solutions. We provide services in IT distribution and system integration for HPC, Big Data, Cloud, and AV applications and markets, while servicing many of world’s top national labs, research universities, online retailers, and Fortune 100 & 1000 companies."The post Exxact Powers HPC & Deep Learning at the GPU Technology Conference appeared first on insideHPC.

|

|

by staff on (#2QKVZ)

Today the RoCE Initiative at the InfiniBand Trade Association announced the availability of the RoCE Product Directory. The new online resource is intended to inform CIOs and enterprise data center architects about their options for deploying RDMA over Converged Ethernet (RoCE) technology within their Ethernet infrastructure.The post RoCE Initiative Launches Online Product Directory appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QKDT)

In this video from the MSST 2017 Mass Storage Conference, Andrew Klein from Backblaze shares the results from their latest disk drive reliability study. "For the last four years, Backblaze has collected and reported on the failure rates and SMART stats of the hard drives in use in our data centers. Currently we have over 80,000 drives ranging from 3 to 8TB. Let's take a look at what we learned over the years about hard drives, including failure rates, by model, and the ability to predict drive failure before it happens."The post Behind the Curtain of Backblaze Hard Drive Stats appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QK9X)

In this video from GTC 2017, Rodolfo Campos from Supermicro describes the company's innovative solutions for GPU-accelerated computing. "Leveraging our extensive portfolio of GPU solutions, customers can massively scale their compute clusters to accelerate their most demanding deep learning, scientific and hyperscale workloads with fastest time-to-results, while achieving maximum performance per watt, per square foot, and per dollar."The post Supermicro Showcases Powerful GPU Solutions at GTC 2017 appeared first on insideHPC.

|