|

by Rich Brueckner on (#2P0B2)

Over at TACC, Aaron Dubrow writes that researchers are using TACC supercomputers to improve, plan, and understand the basic science of radiation therapy. "The science of calculating and assessing the radiation dose received by the human body is known as dosimetry – and here, as in many areas of science, advanced computing plays an important role."The post Supercomputing High Energy Cancer Treatments appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 03:45 |

|

by Rich Brueckner on (#2P096)

Bob Wisniewski from Intel presents: OpenHPC: A Cohesive and Comprehensive System Software Stack. "OpenHPC is a collaborative, community effort that initiated from a desire to aggregate a number of common ingredients required to deploy and manage High Performance Computing (HPC) Linux clusters including provisioning tools, resource management, I/O clients, development tools, and a variety of scientific libraries.The post OpenHPC: A Comprehensive System Software Stack appeared first on insideHPC.

|

|

by staff on (#2P07A)

Today the Living Computers: Museum + Labs added a pair of Cray supercomputers to its permanent collection. The Cray-1 supercomputer went on display at Living Computers this week and will be joined by the Cray-2 supercomputer later this year. Living Computers intends to recommission the Cray-2 and make it available to the public. "I honestly can’t overstate how important these two supercomputers are to computing history, and I am thrilled to be adding them to our collection,†says Lath Carlson, Executive Director of Living Computers. “Bringing these milestones back from the depths of storage has been an incredible journey, and we look forward to making them available to the public.†Living Computers will also host a private reception for the Cray User Group on May 9th to celebrate the new home of these Cray supercomputers.The post Living Computers Museum Adds Two Iconic Cray Systems appeared first on insideHPC.

|

|

by staff on (#2P03F)

Today Bright Computing announced the release of Bright Cluster Manager 8.0 and Bright OpenStack 8.0 with advanced, integrated solutions to improve ease-of use and management of HPC and Big Data clusters as well as private and public cloud environments. "In our latest software release, we incorporated many new features that our users have requested,†said Martijn de Vries, Chief Technology Officer of Bright Computing. De Vries continues, “We’ve made significant improvements that provide greater ease-of-use for systems administrators as well as end-users when creating and managing their cluster and cloud environments. Our goal is to increase productivity to decrease the time to results.â€The post Bright Cluster Manager 8.0 Release Sets New Standard for Automation appeared first on insideHPC.

|

|

by staff on (#2NZTY)

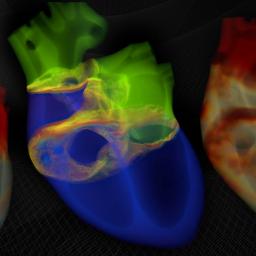

This week at the GPU Technology Conference, Nvidia and Barcelona Supercomputing Center demonstrated an interactive visualization of a cardiac computational model that shows the potential of HPC-based simulation codes and GPU-accelerated clusters to simulate the human cardiovascular system. "This demonstration brings together Alya simulation code and NVIDIA IndeX scalable software to implement an in-situ visualization solution for of electro mechanical simulations of the BSC cardiac computational model. While Alya simulates the electromechanical cardiac propagation, NVIDIA IndeX is used for an immediate in-situ visualization. The in-situ visualization allows researchers to interact with the data on the fly giving a better insight into the simulations."The post BSC and NVIDIA Showcase Interactive Simulation of Human Body appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NWMD)

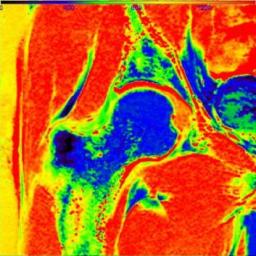

W. Joe Allen from TACC gave this talk at the HPC User Forum. "The Agave Platform brings the power of high-performance computing into the clinic," said William (Joe) Allen, a life science researcher for TACC and lead author on the paper. "This gives radiologists and other clinical staff the means to provide real-time quality control, precision medicine, and overall better care to the patient."The post Leveraging HPC for Real-Time Quantitative Magnetic Resonance Imaging appeared first on insideHPC.

|

|

by staff on (#2NWJT)

Today, DOE announced nearly $3.9 million for 13 projects designed to stimulate the use of high performance supercomputing in U.S. manufacturing. The Office of Energy Efficiency and Renewable Energy (EERE) Advanced Manufacturing Office's High Performance Computing for Manufacturing (HPC4Mfg) program enables innovation in U.S. manufacturing through the adoption of high performance computing to advance applied science and technology relevant to manufacturing. HPC4Mfg aims to increase the energy efficiency of manufacturing processes, advance energy technology, and reduce energy's impact on the environment through innovation.The post DOE to Fund HPC for Manufacturing appeared first on insideHPC.

|

|

by staff on (#2NWH7)

Today SkyScale announced the launch of its world-class, ultra-fast multi-GPU hardware platforms in the cloud, available for lease to customers desiring the fastest performance available as a service anywhere on the globe. "Employing OSS compute and flash storage systems gives SkyScale customers the overwhelming competitive advantage in the cloud they have been asking for," said Tim Miller, President of SkyScale. “The systems we deploy at SkyScale in the cloud are identical to the hundreds of systems in the field and in the sky, trusted in the most rigorous defense, space, and medical deployments with cutting-edge, rock-solid performance, stability, and reliability. By making these systems accessible on a time-rental basis, developers have the advantage of using the most sophisticated systems available to run their algorithms without having to own them, and they can scale up or down as needs change. SkyScale is the only service that gives customers the opportunity to lease time on these costly systems, saving time-to-deployment and money.â€The post SkyScale Announces “World’s Fastest Cloud Computing Service†appeared first on insideHPC.

|

|

by staff on (#2NW5V)

One Stop Systems announced plans to exhibit a wide array of its high-density GPU appliances at the GPU Technology Conference in San Jose. "One Stop Systems offers a wide variety of GPU appliances with different power density solutions to support a range of customer needs," said Steve Cooper, OSS CEO. "Customers can choose a solution that fits their rack space and budget while still ensuring they get the compute power they need for their application. OSS GPU Appliances allow for tremendous performance gains in many applications like deep learning, oil and gas exploration, financial calculations, and medical devices. As GPU technology continues to improve, OSS products are immediately able to accommodate the newest and most powerful GPUs."The post One Stop Systems Showcases High-Density GPU Appliances at GTC 2017 appeared first on insideHPC.

|

|

by staff on (#2NRE3)

Today Infervision introduced its innovative, deep learning solution to help radiologists identify suspicious lesions and nodules in lung cancer patients faster than ever before. The Infervision AI platform is the world’s first to reshape the workflow of radiologists and it is already showing dramatic results at several top hospitals in China.The post AI Technology from China Helps Radiologists Detect Lung Cancer appeared first on insideHPC.

|

|

by staff on (#2NRC8)

Today MapD Technologies released the MapD Core database to the open source community under the Apache 2 license, seeding a new generation of data applications. "Open source is sparking innovation for data science and analytics developers," said Greg Papadopoulos, venture partner at New Enterprise Associates (NEA). "An open-source GPU-powered SQL database will make entirely new applications possible, especially in machine learning where GPUs have had such an enormous impact. We're incredibly proud to partner with the MapD team as they take this pivotal step."The post MapD Open Sources High-Speed GPU-Powered Database appeared first on insideHPC.

|

|

by staff on (#2NR8Y)

Today IBM announced its development of Non-Volatile Memory Express (NVMe) solutions to provide clients the ability to significantly lower latencies in an effort to speed data to and from storage solutions and systems. "IBM's developers are re-tooling the end-to-end storage stack to support this new, faster interconnect protocol to boost the experience of everyone consuming the massive amounts of data now being perpetuated across cloud services, retail, banking, travel and other industries."The post IBM Supports New Faster Protocols for NVMe Flash Storage appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NR5V)

In this podcast, the Radio Free HPC team reviews the results from the ASC17 Student Cluster Competition finals in Wuxi, China. "As the world's largest supercomputing competition, ASC17 received applications from 230 universities around the world, 20 of which got through to the final round held this week at the National Supercomputing Center in Wuxi after the qualifying rounds. During the final round, the university student teams were required to independently design a supercomputing system under the precondition of a limited 3000W power consumption."The post Radio Free HPC Reviews the ASC17 Student Cluster Competition appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NR0R)

In this slidecast, Ronald P. Luijten from IBM Research in Zurich presents: DOME 64-bit μDataCenter. "I like to call it a dataÂcenÂter in a shoeÂbox. With the comÂbiÂnaÂtion of power and enerÂgy efÂfiÂcienÂcy, we beÂlieve the miÂcroÂservÂer will be of inÂteÂrest beÂyond the DOME proÂject, parÂticÂuÂlarÂly for cloud data centers and Big Data anaÂlyÂtics apÂpliÂcaÂtions."The post Leaping Forward in Energy Efficiency with the DOME 64-bit μDataCenter appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NNB2)

"Focused on High Performance and Scientific Computing at Novartis Institutes for Biomedical Research (NIBR), in Basel Switzerland, Nick Holway and his team provide HPC resources and services, including programming and consultancy for the innovative research organization. Supporting more than 6,000 scientists, physicians and business professionals from around the world focused on developing medicines and devices that can produce positive real-world outcomes for patients and healthcare providers, Nick also contributes expertise in bioinformatics, image processing and data science in support of the researchers and their works."The post Video: HPC at NIBR appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NN9P)

The Naval Air Systems Command is seeking a Computer Scientist in our Job of the Week.The post Job of the Week: Computer Scientist at Naval Air Systems Command appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NJGG)

"Big data analytics, machine learning and deep learning are among the most rapidly growing workloads in the data center. These workloads have the compute performance requirements of traditional technical computing or high performance computing, coupled with a much larger volume and velocity of data. Conventional data center architectures have not kept up with the needs for these workloads. To address these new client needs, IBM has adopted an innovative, open business model through its OpenPOWER initiative."The post Video: IBM Datacentric Servers & OpenPOWER appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NJFE)

The 25th International Symposium on High Performance Interconnects (HotI 2017) has issued its Call for Papers. The event takes place August 29-30 at the Ericsson Campus in Santa Clara, California. "Hot Interconnects is the premier international forum for researchers and developers of state-of-the-art hardware and software architectures and implementations for interconnection networks of all scales, ranging from multi-core on-chip interconnects to those within systems, clusters, data centers, and clouds. This yearly conference is attended by leaders in industry and academia. The atmosphere provides for a wealth of opportunities to interact with individuals at the forefront of this field."The post Call for Papers: Hot Interconnects appeared first on insideHPC.

|

|

by staff on (#2NF9F)

Today SC17 announced that 16 teams will take part in the Student Cluster Competition. Hailing from across the U.S., as well as Asia and Europe, the student teams will race to build HPC clusters and run a full suite of applications in the space of just a few days.The post Sixteen Teams to Compete in SC17 Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#2NF48)

In this TACC Podcast, host Jorge Salazar interviews Xian-He Sun, Distinguished Professor of Computer Science at the Illinois Institute of Technology. Computer Scientists working in his group are bridging the file system gap with a cross-platform Hadoop reader called PortHadoop, short for portable Hadoop. "We tested our PortHadoop-R strategy on Chameleon. In fact, the speedup is 15 times faster," said Xian-He Sun. "It's quite amazing."The post Podcast: PortHadoop Speeds Data Movement for Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NF29)

"Hyperion research (previously the HPC team at IDC) has launched a program to both collect this data and recognize noteworthy achievements using High Performance Computing (HPC) resources. We are interested in Innovation and/or ROI examples from today or dating back as far as 10 years. Please complete and submit a separate application form for each ROI / Innovation success story."The post Nominate Your Customers for the 2017 HPC Innovation Awards appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NEYJ)

"The interconnect is going to be a key enabling technology for exascale systems. This is why one of the cornerstones of Bull’s exascale program is the development of our own new-generation interconnect. The Bull eXascale Interconnect or BXI introduces a paradigm shift in terms of performance, scalability, efficiency, reliability and quality of service for extreme workloads."The post Slidecast: BXI – Bull eXascale Interconnect appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NBMJ)

Peter Braam is well-known in the HPC Community for his early work with Lustre and other projects like the SKA telescope Science Data Processor. As one of the featured speakers at the upcoming MSST Mass Storage Conference, Braam will describe how his Campaign Storage Startup provides tools for massive parallel data movement between the new low cost, industry standard campaign storage tiers with premium storage for performance or availability.The post Interview: Peter Braam on How Campaign Storage Bridges the Small & Big, Fast & Slow appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NBGZ)

Hewlett Packard Enterprise has posted their Preliminary Agenda for HP-CAST. As HPE's user group meeting for high performance computing, the event takes place June 16-17 in Frankfurt, just prior to ISC 2017. "The High Performance Consortium for Advanced Scientific and Technical (HP-CAST) computing users group works to increase the capabilities of Hewlett Packard Enterprise solutions for large-scale, scientific and technical computing. HP-CAST provides guidance to Hewlett Packard Enterprise on the essential development and support issues for such systems. HP-CAST meetings typically include corporate briefings and presentations by HPE executives and technical staff (under NDA), and discussions of customer issues related to high-performance technical computing."The post Agenda Posted for HP-CAST at ISC 2017 appeared first on insideHPC.

|

|

by Rich Brueckner on (#2NBDQ)

Martin Hilgeman from Dell gave this talk at the Switzerland HPC Conference. "With all the advances in massively parallel and multi-core computing with CPUs and accelerators it is often overlooked whether the computational work is being done in an efficient manner. This efficiency is largely being determined at the application level and therefore puts the responsibility of sustaining a certain performance trajectory into the hands of the user. This presentation shows the well-known laws of parallel performance from the perspective of a system builder."The post Trends in Systems and How to Get Efficient Performance appeared first on insideHPC.

|

|

by staff on (#2NBAG)

Today Fastdata.io announced it has raised a total of $1.5 million from NVIDIA and other investors. The company is introducing "the world’s fastest and most efficient stream processing software engine" to meet the critical and growing need for efficient, real-time big data processing. Fastdata.io will use the financing to invest in developing its product, marketing and talent acquisition.The post Nvidia Funds AI Startup Fastdata.io appeared first on insideHPC.

|

|

by staff on (#2NB8R)

The San Diego Supercomputer Center has been granted a supplemental award from the National Science Foundation to double the number of GPUs on its petascale-level Comet supercomputer. "This expansion is reflective of a wider adoption of GPUs throughout the scientific community, which is being driven in large part by the availability of community-developed applications that have been ported to and optimized for GPUs,†said SDSC Director Michael Norman, who is also the principal investigator for the Comet program.The post Comet Supercomputer Doubles Down on Nvidia Tesla P100 GPUs appeared first on insideHPC.

|

|

by MichaelS on (#2NB2R)

Machine Learning is a hot topic for many industries and is showing tremendous promise to change how we use systems. From design and manufacturing to searching for cures for diseases, machine learning can be a great disrupter, when implemented to take advantage of the latest processors.The post Intel Processors for Machine Learning appeared first on insideHPC.

|

|

by staff on (#2N7Y7)

Today One Stop Systems (OSS) annouced the launch of SkyScale, a new company that provides HPC as a Service (HPCaaS). For years OSS has been designing and manufacturing the latest in high performance computing and storage systems. Now customers can lease time on these same systems, saving time and money. OSS systems are the distinguishing factor for SkyScale's HPCaaS offering. OSS has been the first company to successfully produce a system that can operate sixteen of the latest NVIDIA Tesla GPU accelerators connected to a single server. These systems are employed today in deep learning applications and in a variety of industries including defense and oil and gas.The post One Stop Systems Announces SkyScale HPC as a Service appeared first on insideHPC.

|

|

by Rich Brueckner on (#2N79T)

As our newest Rock Star of HPC, DK Panda sat down with us to discuss his passion for teaching High Performance Computing. "During the last several years, HPC systems have been going through rapid changes to incorporate accelerators. The main software challenges for such systems have been to provide efficient support for programming models with high performance and high productivity. For NVIDIA-GPU based systems, seven years back, my team introduced a novel `CUDA-aware MPI’ concept. This paradigm allows complete freedom to application developers for not using CUDA calls to perform data movement."The post Rock Stars of HPC: DK Panda appeared first on insideHPC.

|

|

by Rich Brueckner on (#2N7G3)

Daniel Crawford from Virginia Tech gave this talk at the HPC User Forum. "The Molecular Sciences Software Institute serves as a nexus for science, education, and cooperation serving the worldwide community of computational molecular scientists – a broad field including of biomolecular simulation, quantum chemistry, and materials science. The Institute will spur significant advances in software infrastructure, education, standards, and best-practices that are needed to enable the molecular science community to open new windows on the next generation of scientific Grand Challenges."The post Video: The Molecular Sciences Software Institute appeared first on insideHPC.

|

|

by staff on (#2N7E1)

Today NASA announced a code-speedup contest called the High Performance Fast Computing Challenge (HPFCC). The competition will reward qualified contenders who can manipulate the agency’s FUN3D design software so it runs ten to 10,000 times faster on the Pleiades supercomputer without any decrease in accuracy. "This is the ultimate ‘geek’ dream assignment,†said Doug Rohn, director of NASA’s Transformative Aeronautics Concepts Program (TACP). “Helping NASA speed up its software to help advance our aviation research is a win-win for all.â€The post NASA Spins Up High Performance Computing Challenge appeared first on insideHPC.

|

|

by MichaelS on (#2N6Y2)

Cloud technologies are influencing HPC just as it is the rest of enterprise IT. This is the second entry in an insideHPC series that explores the HPC transition to the cloud, and what your business needs to know about this evolution. This series, compiled in a complete Guide available, covers cloud computing for HPC, industry examples, IaaS components, OpenStack fundamentals and more.The post Cloud Computing Continues to Influence HPC appeared first on insideHPC.

|

|

by staff on (#2N3S6)

At MSST 2017, Andrew Klein from Backblaze will present on what they’ve learned about hard drives over the years including failure rates by model, and the ability to predict drive failure before it happens. "Currently we have over 80,000 drives ranging from 3 to 8TB. Let's take a look at what we learned over the years about hard drives, including failure rates, by model, and the ability to predict drive failure before it happens."The post Backblaze to Report Latest Hard Drive Failure Stats at MSST 2017 appeared first on insideHPC.

|

|

by Rich Brueckner on (#2N3DH)

A software toolkit developed at Berkeley Lab to better understand supercomputer performance is now being used to boost application performance for researchers running codes at NERSC and other supercomputing facilities. "Since its initial development, what is now known as the Empirical Roofline Toolkit (ERT) has benefitted from contributions by several Berkeley Lab staff. Along the way, HPC users who write scientific applications for manycore systems have been able to apply the toolkit to their applications and see how changing parameters of their code can improve performance."The post Boosting Manycore Code Optimization Efforts with Roofline Technology appeared first on insideHPC.

|

|

by staff on (#2N39X)

Today Booz Allen Hamilton and Kaggle today announced the winners of the third annual Data Science Bowl, a competition that harnesses the power of data science and crowdsourcing to tackle some of the world’s toughest problems. This year’s challenge brought together nearly 10,000 participants from across the world. Collectively they spent more than an estimated 150,000 hours and submitted nearly 18,000 algorithms—all aiming to help medical professionals detect lung cancer earlier and with better accuracy.The post Turning AI Against Cancer at the Data Science Bowl appeared first on insideHPC.

|

by Rich Brueckner on (#2N39Z)

Today the European PRACE initiative announced that Prof Dr Frauke Gräter from University of Heidelberg is the winner of the PRACE Ada Lovelace Award for HPC. It is the second time that PRACE awards a female scientist for her outstanding impact on HPC research, computational science or service provision at a global level. The award will be presented at PRACEdays17, to be held May 16-18 in Barcelona.The post Frauke Gräter to receive 2nd PRACE Ada Lovelace Award for HPC appeared first on insideHPC.

|

by staff on (#2N35J)

Today DataSite announced it will install Motivair Corporation’s ChilledDoor Rack Cooling system to accommodate a high performance computing environment for a strategic customer. "This initial engagement will officially launch an ongoing partnership between the two companies to deliver HPC services across DataSite’s facilities.We were eager to accommodate our customers’ desire to deploy HPC clusters in DataSite facilities, but did not want to sacrifice operational efficiencies,†comments Rob Wilson, Executive Vice President for DataSite. “After an extensive search of the HPC cooling market, Motivair was selected as the ideal product that provided industry best cooling capabilities while meeting DataSite’s Sustainability commitments.â€The post DataSite HPC Solution to use Motivair ChilledDoor Rack Systems appeared first on insideHPC.

|

|

by staff on (#2N0F8)

Today Cray announced a contract to deliver a Cray CS400 cluster supercomputer to the Laboratory Computing Resource Center (LCRC) at Argonne National Laboratory. The new Cray system will serve as the Center’s flagship cluster, and in continuing with LCRC’s theme of jazz-music inspired computer names, the Cray CS400 system is named “Bebop.â€The post Argonne to Install 1.5 Petaflop Cray CS400 Cluster appeared first on insideHPC.

|

|

by Rich Brueckner on (#2N09E)

The SC17 housing reservation system is now open for business. The conference takes place Nov. 12-17 in Denver.The post Housing Registration Opens for SC17 in Denver appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MZHH)

Today Rescale announced that hourly, on-demand licenses of the popular finite element analysis software LS-DYNA are now available in Europe on ScaleX Enterprise, Rescale’s enterprise cloud platform for big compute. The announcement builds on the growth of on-demand licensing in the United States, which accounts for 99% of LS-DYNA jobs on ScaleX in that country. DYNAmore, an LS-DYNA European distributor, will take care of orders and billing, while Rescale will deliver the software on its cloud platform.The post Rescale to Offer On-Demand LS-DYNA Licenses in Europe appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MZFH)

Sibendu Som from Argonne presented this talk at the HPC User Forum. "Sibendu Som is a mechanical engineer and principal investigator for developing predictive spray and combustion modeling capabilities for compression ignition engines. With the aid of high-performance computing, Sibendu focuses on developing robust models which can improve the performance and emission characteristics of a variety of bio-derived fuels. Predictive simulation capability can provide significant insights on how to improve the efficiency and emissions for different bio-derived fuels of interest."The post HPC Accelerating Combustion Engine Design appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MZAD)

Over at the Singularity Blog, Greg Kurtzer writes that he has created a new organization, SingularityWare, LLC. In partnership with RStor, the new company will be dedicated to further developing Singularity, supporting the associated open source community and growing the project. "In addition to continuing my leadership of Singularity (and the new LLC), I will be maintaining my association with Lawrence Berkeley National Laboratory, as a scientific advisor as well as continuing other efforts I am associated with (e.g. Warewulf and OpenHPC)."The post Greg Kurtzer of LBNL Launches SingularityWare, LLC appeared first on insideHPC.

|

|

by Beth Harlen on (#2MZ6Q)

From software developer at a small start-up in New Zealand, to Senior Program Manager at one of the largest multinational technology companies in the US, Karan Batta has led a career touched by HPC – even if he didn’t always realize it at the time. As the driving force behind the GPU Infrastructure vision, roadmap and deployment in Microsoft Azure, Karan Batta is a Rock Star of HPC.The post Rock Stars of HPC: Karan Batta appeared first on insideHPC.

|

|

by staff on (#2MWSM)

Early bird registration for ISC 2017 is ending soon. This is your last chance to enjoy a substantial discount on registration for the conference, which takes place June 18-22 in Frankfurt.The post Register Now for ISC 2017 at Early Bird Rates appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MWQ3)

In this video, Paul Messina from the Exascale Computing Project describes recent progress towards the development of machines with 50x applications performance than is possible today. “The Exascale Computing Project (ECP) was established with the goals of maximizing the benefits of high-performance computing (HPC) for the United States and accelerating the development of a capable exascale computing ecosystem.The post Interview: Paul Messina Update on the Exascale Computing Project (ECP) appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MSNE)

Altair has posted the Agenda for the PBS Works User Group May 22-25 in Las Vegas. This four-day event (including 2 days of user presentations, round table discussions and surrounded by hands-on workshops) is the global user event of the year for PBS Professional and other PBS Works products. "This year we are excited to announce that we will be hosting a tour of the Switch data centerfacility on Tuesday afternoon.â€The post Agenda Posted for PBS Works User Group in Las Vegas appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MSM9)

"To date, most data-intensive HPC jobs in the government, academic and industrial sectors have involved the modeling and simulation of complex physical and quasi-physical systems. The systems range from product designs for cars, planes, golf clubs and pharmaceuticals, to subatomic particles, global weather and climate patterns, and the cosmos itself. But from the start of the supercomputer era in the 1960s — and even earlier —an important subset of HPC jobs has involved analytics — attempts to uncover useful information and patterns in the data itself."The post High Performance Data Analysis (HPDA): HPC – Big Data Convergence appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MNRZ)

John Turner from ORNL presented this talk at the HPC User Forum. "Fully exploiting future exascale architectures will require a rethinking of the algorithms used in the large scale applications that advance many science areas vital to DOE and NNSA, such as global climate modeling, turbulent combustion in internal combustion engines, nuclear reactor modeling, additive manufacturing, subsurface flow, and national security applications."The post Overview of the Exascale Additive Manufacturing Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#2MNM4)

The National Security Agency in Maryland is seeking an HPC Software Engineer in our Job of the Week. "NSA's High Performance Computing team develops and integrates advanced architectures and unique technologies to sustain its world-class HPC inventory. Applicants have the opportunity to research, design, develop, program, integrate, and test HPCs and all related components. NSA stays abreast of, and utilizes, new and emerging HPC technologies to address NSA's unique and critical mission."The post Job of the Week: HPC Software Engineer at the NSA appeared first on insideHPC.

|