|

by staff on (#2QK88)

Today DDN appointed Eric Barton as the company’s chief technology officer for software-defined storage. In this role, Barton will lead the company’s strategic roadmap, technology architecture and product design for DDN’s newly created Infinite Memory Engine business unit. Barton brings with him more than 30 years of technology innovation, entrepreneurship and expertise in networking, distributed systems and storage software.The post Eric Barton Joins DDN as CTO for Software-Defined Storage appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 00:15 |

|

by staff on (#2QFSN)

Today the Transaction Processing Performance Council (TPC) announced the immediate availability of TPCx-HS Version 2, extending the original benchmark’s scope to include the Spark execution framework and cloud services. "Enterprise investment in Big Data analytics tools is growing exponentially, to keep pace with the rapid expansion of datasets,†said Tariq Magdon-Ismail, chairman of the TPCx-HS committee and staff engineer at VMware. “This is leading to an explosion in new hardware and software solutions for collecting and analyzing data. So there is enormous demand for robust, industry standard benchmarks to enable direct comparison of disparate Big Data systems across both hardware and software stacks, either on-premise or in the cloud. TPCx-HS Version 2 significantly enhances the original benchmark’s scope, and based on industry feedback, we expect immediate widespread interest.â€The post Transaction Processing Performance Council Launches TPCx-HS Big Data Benchmark appeared first on insideHPC.

|

|

by staff on (#2QFH7)

The Standard Performance Evaluation Corp.'s High-Performance Group (SPEC/HPG) is offering rewards of up to $5,000 and a free benchmark license for application code and datasets accepted under its new SPEC MPI Accelerator Benchmark Search Program. "Our goal is to develop a benchmark that contains real-world scientific applications and scales from a single node of a supercomputer to thousands of nodes," says Robert Henschel, SPEC/HPG chair. "The broader the base of contributors, the better the chance that we can cover a wide range of scientific disciplines and parallel-programming paradigms."The post SPEC High-Performance Group Seeking Applications for New MPI Accelerator Benchmark appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QFF7)

Kimberly Keeton from HPE gave this talk at the MSST Conference. "Data growth and data analytics requirements are outpacing the compute and storage technologies that have provided the foundation of processor-driven architectures for the last five decades. This divergence requires a deep rethinking of how we build systems, and points towards a memory-driven architecture, where memory is the key resource and everything else, including processing, revolves around it.The post Kimberly Keeton from HPE Presents: Memory-Driven Computing appeared first on insideHPC.

|

|

by staff on (#2QFQ3)

The Summer of HPC is a PRACE program that offers summer placements at HPC centres across Europe. “I was part of the Laboratory of Computer-Aided Design at the University of Ljubljana, working on data visualization for nuclear fusion simulations. I developed and improved the capabilities of the data visualization framework, making it easier and providing more flexibility for scientists to analyze the modeling of Tokamak fusion reactors.â€The post Why Summer of HPC is an Experience to Remember appeared first on insideHPC.

|

|

by staff on (#2QEY8)

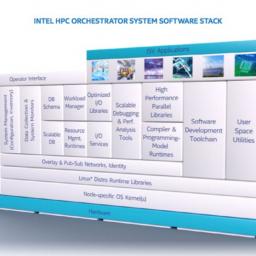

When it comes to getting the performance out of your HPC system, it’s the small things that count. David Lombard, Sr. Principal Engineer at Intel Corporation, explains. " Intel HPC Orchestrator encapsulates the important tradeoffs, and pays attention to the small details that can greatly impact how well the underlying features of the hardware are leveraged to deliver better performance and scalability."The post Reliability, Scalability and Performance – the Impact of Intel HPC Orchestrator appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QBSJ)

Today Bright Computing announced support for the BeeGFS parallel file system into Bright Cluster Manager 8.0. BeeGFS, developed at the Fraunhofer Center for High Performance Computing in Germany and delivered by ThinkParQ, is a parallel cluster file system with a strong focus on performance and flexibility, and is designed for very easy installation and management. […]The post Bright Cluster Manager Adds BeeGFS Support appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QBH7)

In this podcast, the Radio Free HPC team looks at the announcements coming from Google IO conference. Of particular interest was their second-generation TensorFlow Processing Unit (TPU2). We've also got news on the new OS/2 operating system, Quantum Computing, and the new Emerging Woman Leader in Technical Computing Award.The post Radio Free HPC Looks at the TPU2 TensorFlow Processing Unit appeared first on insideHPC.

|

|

by Rich Brueckner on (#2QBBR)

The upcoming PASC17 conference has posted further details on a pair of panel discussions taking place at the conference next month. The conference takes place June 26-28 in Lugano, Switzerland.The post PASC17 to Feature Panel Discussions on Moore’s Law and Computational Sciences appeared first on insideHPC.

|

|

by staff on (#2QB0N)

The Eco Blade is a unique server platform engineered specifically for high performance, high density computing environments – simultaneously increasing compute density while decreasing power use. Eco Blade offers two complete, independent servers within 1U of rack space. Each independent server supports up to 64 Intel Xeon processor cores and 1.0 TB of enterprise memory for a total of up to 128 Cores and 2 TB of memory per 1U.The post PSSC Labs Launches Eco Blades for HPC appeared first on insideHPC.

|

|

by dangutierrez2 on (#2QAVJ)

Artificial intelligence and machine learning are rising in popularity as the needs of big data call for systems that exceed human capabilities. This article is part of a special insideHPC report that explores trends in machine learning and deep learning.The post Drilling Down into Machine Learning and Deep Learning appeared first on insideHPC.

|

|

by staff on (#2Q8A6)

Today Zadara Storage announced the opening of a new Storage-as-a-Service demo center near Munich, Germany, in collaboration with Intel Corporation. Customers and prospects are now able to experience the Zadara Storage-as-a-Service (STaaS) offering live and test the services using their own unique configurations.The post Storage-as-a-Service Demo Center Opens in Munich appeared first on insideHPC.

|

|

by Rich Brueckner on (#2Q885)

Peter Braam gave this talk at the MSST 2017 Mass Storage Conference. "Campaign Storage offers radical revisions of old workflows and adapts to new technologies. But it also leverages widely available technologies and interfaces to offer stability from the ground up and blend in with the past. We'll discuss how a simple combination of components can support scalability, data analytics and efficient integration with memory based storage."The post Bridging Big – Small, Fast – Slow with Campaign Storage appeared first on insideHPC.

|

|

by Rich Brueckner on (#2Q5AH)

Microsoft Azure CTO Mark Russinovich recently disclosed major advances in Microsoft’s hyperscale deployment of Intel field programmable gate arrays (FPGAs). These advances have resulted in the industry’s fastest public cloud network, and new technology for acceleration of Deep Neural Networks (DNNs) that replicate “thinking†in a manner that’s conceptually similar to that of the human brain.The post How Intel FPGAs Power Azure Deep Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#2Q58D)

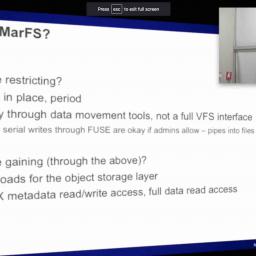

David Bonnie from LANL presented this talk at the MSST 2017 Mass Storage Conference. MarFS is a Near-POSIX File System using cloud storage for data and many POSIX file systems for metadata. "With MarFS in production at LANL since fall 2016, we have gained new insights, learned lessons, and expanded our future plans. We'll discuss the various hurdles required to deploy such an ambitious system with minimal manpower. Further, we'll delve into the challenges, triumphs, and defeats on the road to a new tier of inexpensive scalable storage."The post An Update on MarFS in Production appeared first on insideHPC.

|

|

by staff on (#2Q24V)

The 13th Annual OpenFabrics Alliance (OFA) Workshop wrapped at the end of March with a look toward the future. The annual gathering, held this year in Austin, Texas, was devoted to advancing cutting edge networking technology through the ongoing collaborative efforts of OpenFabrics Software (OFS) producers and users. With a record 130+ attendees, the 2017 Workshop expanded on the OFA’s commitment to being an open organization by hosting an engaging Town Hall discussion and an At Large Board election, filling two newly added director seats for current members.The post A Recap of the 2017 OpenFabrics Workshop appeared first on insideHPC.

|

|

by Rich Brueckner on (#2Q239)

The Ohio Supercomputer Center (OSC) seeks a Senior Scientific Applications Engineer to support the overall mission of the group and in particular to contribute to the overall mission of the Center with a focus on application support for OSC’s industrial engagement and research programs.The post Job of the Week: Senior Scientific App Engineer at Ohio State appeared first on insideHPC.

|

|

by Rich Brueckner on (#2Q1YG)

Christian Kniep is hosting a half-day Linux Container Workshop on Optimizing IT Infrastructure and High-Performance Workloads on June 23 in Frankfurt. "Docker as the dominant flavor of Linux Container continues to gain momentum within datacenter all over the world. It is able to benefit legacy infrastructure by leveraging the lower overhead compared to traditional, hypervisor-based virtualization. But there is more to Linux Containers - and Docker in particular, which this workshop will explore."The post ISC 2017 Workshop Preview: Optimizing Linux Containers for HPC & Big Data Workloads appeared first on insideHPC.

|

|

by staff on (#2Q1VD)

The new ACM SIGHPC Emerging Woman Leader in Technical Computing award will be presented every two years, with the first presentation in November during SC17. "The obvious benefit is the recognition and exposure that can come from receiving an award like this one. But, just as important, over time the recipients of this award will serve as models of success that can encourage others as they start their own careers."The post Announcing the Emerging Woman Leader in Technical Computing Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#2Q1QX)

"The Liqid Composable Infrastructure (CI) Platform is the first solution to support GPUs as a dynamic, assignable, bare-metal resource. With the addition of graphics processing, the Liqid CI Platform delivers the industry’s most fully realized approach to composable infrastructure architecture. With this technology, disaggregated pools of compute, networking, data storage and graphics processing elements can be deployed on demand as bare-metal resources and instantly repurposed when infrastructure needs change."The post Liqid Showcases Composable Infrastructure for GPUs at GTC 2017 appeared first on insideHPC.

|

|

by staff on (#2Q00C)

It’s fair to say that women continue to be underrepresented in STEM, but the question is whether there is a systemic bias making it difficult for women to join and succeed in tech industries, or has the tech industry failed to motivate and persuade women to join? Intel’s Figen Ulgen shares her view.The post A Seat at the Table – The Value of Women in High-Performance Computing appeared first on insideHPC.

|

|

by staff on (#2PYCT)

"In cooperation with vendors and TACC, BioTeam utilizes the lab to evaluate solutions for its clients by standing up, configuring and testing new infrastructure under conditions relevant to life sciences in order to deliver on its mission of providing objective, vendor agnostic solutions to researchers. The life sciences community is producing increasingly large amounts of data from sources ranging from laboratory analytical devices, to research, to patient data, which is putting IT organizations under pressure to support these growing workloads."The post Avere Systems Powers BioTeam Test Lab at TACC appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PY1H)

In this video, Michael Wolfe from PGI continues his series of tutorials on parallel programming. "The second in a series of short videos to introduce you to parallel programming with OpenACC and the PGI compilers, using C++ or Fortran. You will learn by example how to build a simple example program, how to add OpenACC directives, and to rebuild the program for parallel execution on a multicore system. To get the most out of this video, you should download the example programs and follow along on your workstation."The post Introduction to Parallel Programming with OpenACC – Part 2 appeared first on insideHPC.

|

|

by staff on (#2PY1J)

OCF in the UK has deployed a new 600 teraflop supercomputer at the University of Bristol. Designed, integrated, and configured by OCF, the system is the largest of any UK university by core count. "Early benchmarking is showing that the new system is three times faster than our previous cluster."The post OCF Deploys 600 Teraflop Cluster at University of Bristol appeared first on insideHPC.

|

|

by MichaelS on (#2PXXP)

Video streams may constructed using various standards, which contain information such as resolution, frame rate, color depth, etc. It is the job of the transcoder to take in one format and produce another format that would then be used downstream. While an application could be written that does the transformation, optimizing the application requires the expertise of the hardware manufacturer.The post Transcoding for Optimal Video Consumption appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PXVT)

In this video from GTC 2017, Jaan Mannik from One Stop Systems describes the company's new HPC as a Service offering. As makers of high density GPU expansion chassis, One Stop Systems designs and manufactures high performance computing systems that revolutionize the data center by increasing speed to the Internet while reducing cost and impact to the infrastructure.The post One Stop Systems Showcases HPC as a Service at GTC 2017 appeared first on insideHPC.

|

|

by staff on (#2PVGM)

Hyperion Research, the new name for the former IDC HPC group, today announced it is adding two new categories to its global awards program for high performance computing innovation. Both new categories are for innovations benefiting HPC use in data centers—either dedicated HPC data centers or the growing number of enterprise data centers that are exploiting HPC server and storage systems for advanced analytics.The post Hyperion Research Announces New HPC Innovation Awards for Data Centers appeared first on insideHPC.

|

|

by staff on (#2PSX9)

Hewlett Packard Enterprise today introduced the world’s largest single-memory computer, the latest milestone in The Machine research project. "The prototype unveiled today contains 160 terabytes (TB) of memory, capable of simultaneously working with the data held in every book in the Library of Congress five times over—or approximately 160 million books. It has never been possible to hold and manipulate whole data sets of this size in a single-memory system, and this is just a glimpse of the immense potential of Memory-Driven Computing."The post HPE Introduces the World’s Largest Single-memory Computer appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PSRN)

"The DEEP-ER project has created far-reaching impact. Its results have led to widespread innovation and substantially reinforced the position of European industry and academia in HPC. We are more than happy that we are granted the opportunity to continue our DEEP projects journey and generalize the Cluster-Booster approach to create a truly Modular Supercomputing system,†says Prof. Dr. Thomas Lippert, Head of Jülich Supercomputing Centre and Scientific Coordinator of the DEEP-ER project.The post DEEP-ER Project Paves the Way to Future Supercomputers appeared first on insideHPC.

|

|

by staff on (#2PSM3)

Today D-Wave Systems announced that it has received up to $50 Million in funding from PSP Investments. This facility brings D-Wave’s total funding to approximately US$200 million. The new capital is expected to enable D-Wave to deploy its next-generation quantum computing system with more densely-connected qubits, as well as platforms and products for machine learning applications. "This commitment from PSP Investments is a strong validation of D-Wave’s leadership in quantum computing,†said Vern Brownell, CEO of D-Wave. “While other organizations are researching quantum computing and building small prototypes in the lab, the support of our customers and investors enables us to deliver quantum computing technology for real-world applications today. In fact, we’ve already demonstrated practical uses of quantum computing with innovative companies like Volkswagen. This new investment provides a solid base as we build the next generation of our technology.â€The post D-Wave Lands $50M Funding for Next Generation Quantum Computers appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PNVH)

We are very excited to bring you this livestream of the 2017 MSST Conference in Santa Clara. We'll be broadcasting all the talks Wednesday, May 17 starting at 8:30am PDT.The post Livestream: 2017 MSST Mass Storage Conference appeared first on insideHPC.

|

|

by MichaelS on (#2PSBH)

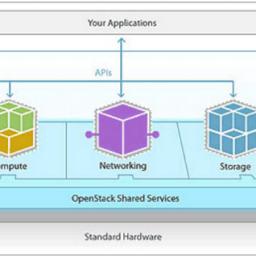

This is the fourth entry in an insideHPC series that explores the HPC transition to the cloud, and what your business needs to know about this evolution. This series, compiled in a complete Guide available, covers cloud computing for HPC, why the OS is important when running HPC applications, OpenStack fundamentals and more.The post Why the OS is So Important when Running HPC Applications appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#2PP5Z)

Many more companies are turning to in-memory computing (IMC) as they struggle to analyze and process increasingly large amounts of data. That said, it’s often hard to make sense of the growing world of IMC products and solutions. A recent white paper from GridGain aims to help businesses decide which solution best matches their specific needs.The post Why IMC is Right for Today’s Fast-Data and Big-Data Applications appeared first on insideHPC.

|

|

by staff on (#2PP61)

Today Cray and the Markley Group announced a partnership to provide supercomputing as a service solutions. "The need for supercomputers has never been greater,†said Patrick W. Gilmore, chief technology officer at Markley. “For the life sciences industry especially, speed to market is critical. By making supercomputing and big data analytics available in a hosted model, Markley and Cray are providing organizations with the opportunity to reap significant benefits, both economically and operationally.â€The post Cray and Markley Group to Offer Supercomputing as a Service appeared first on insideHPC.

|

|

by staff on (#2PNXX)

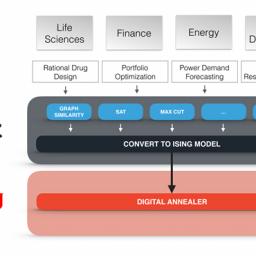

Today Fujitsu and 1QB Information Technologies Inc. announced that they are collaborating on quantum-inspired technology in the field of artificial intelligence, focusing on the areas of combinatorial optimization and machine learning. The companies will work together in both the Japanese and global markets to develop applications which address industry problems using AI developed for use with quantum computers.The post Fujitsu and 1QBit Collaborate on Quantum-Inspired AI Cloud Service appeared first on insideHPC.

|

|

by staff on (#2PN8J)

Next-generation sequencing methods are empowering doctors and researchers to improve their ability to treat diseases, predict and prevent diseases before they occur, and personalize treatments to specific patient profiles. "With this increase in knowledge comes a tidal wave of data. Genomic data is growing so quickly that scientists are predicting that this data will soon take the lead as the largest data category in the world, eventually creating more digital information than astronomy, particle physics and even popular Internet sites like YouTube."The post Genome Analytics Driving a Healthcare Revolution appeared first on insideHPC.

|

|

by staff on (#2PHMW)

Today Penguin Computing announced support for Singularity containers on its Penguin Computing On-Demand (POD) HPC Cloud and Scyld ClusterWare HPC management software. "Our researchers are excited about using Singularity on POD,†said Jon McNally, Chief HPC Architect at ASU Research Computing. “Portability and the ability to reproduce an environment is key to peer reviewed research. Unlike other container technologies, Singularity allows them to run at speed and scale.â€The post Penguin Computing Adds Support for Singularity Containers on POD HPC Cloud appeared first on insideHPC.

|

|

by staff on (#2PHFJ)

Today Mellanox announced that the University of Waterloo selected Mellanox EDR 100G InfiniBand solutions to accelerate their new supercomputer. The new supercomputer will support a broad and diverse range of academic and scientific research in mathematics, astronomy, science, the environment and more. "The growing demands for research and supporting more complex simulations led us to look for the most advanced, efficient, and scalable HPC platforms,†said John Morton, technical manager for SHARCNET. “We have selected the Mellanox InfiniBand solutions because their smart acceleration engines enable high performance, efficiency and robustness for our applications.â€The post Mellanox InfiniBand to Power Science at University of Waterloo appeared first on insideHPC.

|

|

by staff on (#2PHDJ)

Today the Gauss Centre for Supercomputing (GCS) in Germany approved 30 large-scale projects as part of their 17th call for large-scale proposals. Combined, these projects received 2.1 billion core hours, marking the highest total ever delivered by the three GCS centres. "GCS awards large-scale allocations to researchers studying earth and climate sciences, chemistry, particle physics, materials science, astrophysics, and scientific engineering, among other research areas of great importance to society."The post Gauss Centre in Germany Awards 2.1 Billion Core Hours for Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PH9A)

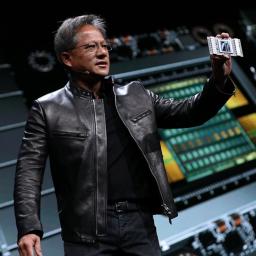

In this podcast, the Radio Free HPC team looks at Volta, Nvidia's new GPU architecture that delivers up to 5x the performance of its predecessor. "At the GPU Technology Conference, Nvidia CEO Jen-Hsun Huang introduced a lineup of new Volta-based AI supercomputers including a powerful new version of our DGX-1 deep learning appliance; announced the Isaac robot-training simulator; unveiled the NVIDIA GPU Cloud platform, giving developers access to the latest, optimized deep learning frameworks; and unveiled a partnership with Toyota to help build a new generation of autonomous vehicles."The post Radio Free HPC Looks at the New Volta GPUs for HPC & AI appeared first on insideHPC.

|

|

by staff on (#2PH44)

The Intel Omni-Path Architecture is the next-generation fabric for high-performance computing. In this feature, we will focus on what it takes for for supercomputer application to scale well by taking full advantage of processor features. "To keep these apps working at their peak means not letting them starve for the next bit or byte or calculated result—for whatever reason."The post Intel Omni-Path Architecture: What’s a Supercomputer App Want? appeared first on insideHPC.

|

|

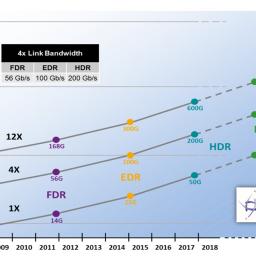

by Rich Brueckner on (#2PED6)

The InfiniBand Trade Association (IBTA) has updated their InfiniBand Roadmap. With HDR 200 Gb/sec technolgies shipping this year, the roadmap looks out to an XDR world where server connectivity reaches 1000 Gb/sec. "The IBTA‘s InfiniBand roadmap is continuously developed as a collaborative effort from the various IBTA working groups. Members of the IBTA working groups include leading enterprise IT vendors who are actively contributing to the advancement of InfiniBand. The roadmap details 1x, 4x, and 12x port widths with bandwidths reaching 600Gb/s data rate HDR in 2017. The roadmap is intended to keep the rate of InfiniBand performance increase in line with systems-level performance gains."The post InfiniBand Roadmap Foretells a World Where Server Connectivity is at 1000 Gb/sec appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PEBJ)

"In this new world, every citizen needs data science literacy. UC Berkeley is leading the way on broad curricular immersion with data science, and other universities will soon follow suit. The definitive data science curriculum has not been written, but the guiding principles are computational thinking, statistical inference, and making decisions based on data. “Bootcamp†courses don't take this approach, focusing mostly on technical skills (programming, visualization, using packages). At many computer science departments, on the other hand, machine-learning courses with multiple pre-requisites are only accessible to majors. The key of Berkeley’s model is that it truly aims to be “Data Science for All.â€The post Lorena Barba Presents: Data Science for All appeared first on insideHPC.

|

|

by Rich Brueckner on (#2PBKV)

Darren Cepulis from ARM gave this talk at the HPC User Forum. "ARM delivers enabling technology behind HPC. The 64-bit design of the ARMv8-A architecture combined with Advanced SIMD vectorization are ideal to enable large scientific computing calculations to be executed efficiently on ARM HPC machines. In addition ARM and its partners are working to ensure that all the software tools and libraries, needed by both users and systems administrators, are provided in readily available, optimized packages."The post Video: ARM HPC Ecosystem appeared first on insideHPC.

|

|

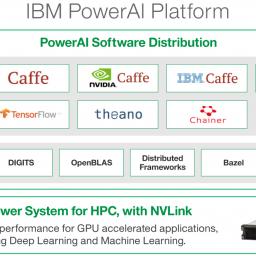

by staff on (#2PBGB)

IBM PowerAI on Power servers with GPU accelerators provide at least twice the performance of our x86 platform; everything is faster and easier: adding memory, setting up new servers and so on,†said current PowerAI customer Ari Juntunen, CTO at Elinar Oy Ltd. “As a result, we can get new solutions to market very quickly, protecting our edge over the competition. We think that the combination of IBM Power and PowerAI is the best platform for AI developers in the market today. For AI, speed is everything —nothing else comes close in our opinion.â€The post IBM’s New PowerAI Software Speeds Deep Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#2P84E)

In this podcast, Marc Hamilton from Nvidia describes how the new Volta GPUs will power the next generation of systems for HPC and AI. According to Nvidia, the Tesla V100 accelerator is the world’s highest performing parallel processor, designed to power the most computationally intensive HPC, AI, and graphics workloads.The post Podcast: Marc Hamilton on how Volta GPUs will Power Next-Generation HPC and AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#2P7WX)

Liqid Inc. has fully integrated GPU support into the Liqid Composable Infrastructure (CI) Platform. "Liqid’s CI Platform is the first solution to support GPUs as a dynamic, assignable, bare-metal resource. With the addition of graphics processing, the Liqid CI Platform delivers the industry’s most fully realized approach to composable infrastructure architecture."The post Liqid Delivers Composable Infrastructure Solution for Dynamic GPU Resource Allocation appeared first on insideHPC.

|

|

by Rich Brueckner on (#2P7RE)

The MSST Mass Storage Conference in Silicon Valley is just a few days away, and the agenda is packed with High Performance Computing topics. In one of the invited talks, Kimberly Keeton from Hewlett Packard Enterprise speak on Memory Driven Computing. We caught up Kimberly to learn more.The post Memory Driven Computing in the Spotlight at MSST Conference Next Week appeared first on insideHPC.

|

|

by Rich Brueckner on (#2P4ZY)

This week at the GPU Technology Conference, Nvidia CEO Jensen Huang Wednesday launched Volta, a new GPU architecture that delivers 5x the performance of its predecessor. "Over the course of two hours, Huang introduced a lineup of new Volta-based AI supercomputers including a powerful new version of our DGX-1 deep learning appliance; announced the Isaac robot-training simulator; unveiled the NVIDIA GPU Cloud platform, giving developers access to the latest, optimized deep learning frameworks; and unveiled a partnership with Toyota to help build a new generation of autonomous vehicles."The post Nvidia Unveils GPUs with Volta Architecture appeared first on insideHPC.

|

|

by Richard Friedman on (#2P3TW)

Parallel STL now makes it possible to transform existing sequential C++ code to take advantage of the threading and vectorization capabilities of modern hardware architectures. It does this by extending the C++ Standard Template Library with an execution policy argument that specifies the degree of threading and vectorization for each algorithm used.The post C++ Parallel STL Introduced in Intel Parallel Studio XE 2018 Beta appeared first on insideHPC.

|