|

by staff on (#1SVK0)

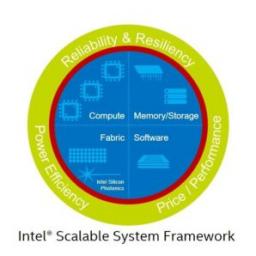

With Intel Scalable System Framework Architecture Specification and Reference Designs, the company is making it easier to accelerate the time to discovery through high-performance computing. The Reference Architectures (RAs) and Reference Designs take Intel Scalable System Framework to the next step—deploying it in ways that will allow users to confidently run their workloads and allow system builders to innovate and differentiate designsThe post Facilitate HPC Deployments with Reference Designs for Intel Scalable System Framework appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 10:30 |

|

by Rich Brueckner on (#1SV7C)

"This release will allow aerospace stress analysts to do their tasks in a much more efficient manner. We really focused on understanding the desired workflows and creating an environment to easily move between CAD models, CAE models and results, and external tools such as Microsoft Excel,†said Dr. Robert Yancey, Altair Vice President of Aerospace. “We look forward to working with our Aerospace customers to help them implement their workflows in the streamlined HyperWorks environment.â€The post Altair Updates HyperWorks for Aerospace appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SRZP)

Charles W. Nakhleh from LANL presented this talk at the 2016 DOE NNSA SSGF Annual Program Review. "This talk will explore some of the future opportunities and exciting scientific and technological challenges in the National Nuclear Security Administration Stockpile Stewardship Program. The program’s objective is to ensure that the nation's nuclear deterrent remains safe, secure and effective. Meeting that objective requires sustained excellence in a variety of scientific and engineering disciplines and has led to remarkable advances in theory, experiment and simulation."The post The Challenges and Rewards of Stockpile Stewardship appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SRXB)

Applications are now open for the annual SuperComputing Camp in Colombia. The five-day camp takes place Oct. 16-21 at CIBioFI at Universidad del Valle in Santiago de Cali.The post SuperComputing Camp Returns to Colombia appeared first on insideHPC.

|

|

by staff on (#1SRTS)

The Piz Daint supercomputer at the Swiss National Supercomputing Centre (CSCS) is again assisting researchers in competition for the prestigious Gordon Bell prize. "Researchers led by Peter Vincent from Imperial College London have made this year’s list of finalists for the Gordon Bell prize, with the backing of Piz Daint at the Swiss National Supercomputing Centre. The prize is awarded annually in November at SC, the world’s largest conference on supercomputing. It honors the success of scientists who are able to achieve very high efficiencies for their research codes running on the fastest supercomputer architectures currently available."The post Powering Aircraft CFD with the Piz Daint Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SNXR)

Rick Wagner from SDSC presented this talk at the the 4th Annual MVAPICH User Group. "At SDSC, we have created a novel framework and infrastructure by providing virtual HPC clusters to projects using the NSF sponsored Comet supercomputer. Managing virtual clusters on Comet is similar to managing a bare-metal cluster in terms of processes and tools that are employed. This is beneficial because such processes and tools are familiar to cluster administrators."The post Video: User Managed Virtual Clusters in Comet appeared first on insideHPC.

|

|

by staff on (#1SNWJ)

Over at NASA, Michelle Moyers writes that the 2016 NASA Software of the Year Award has gone to Pegasus 5, a revolutionary CFD tool. "Developed in-house by a team led by aerospace engineer Stuart Rogers from NASA Ames, Pegasus 5 has been used for aerodynamic modeling and simulation by nearly every NASA program over the past 15 years, including the space shuttle, the next-generation Orion spacecraft and Space Launch System, and commercial crew programs."The post Pegasus 5 CFD Program Wins NASA’s Software of the Year appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SK79)

In this podcast, the Radio Free HPC team previews the HPC User Forum & StartupHPC Events coming up in the Fall of 2016.The post Radio Free HPC Previews the HPC User Forum & StartupHPC Events appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SH44)

Today ALA Services announced it has acquired Adaptive Computing of Provo, Utah. "Adaptive Computing is adding proven growth expertise and infrastructure through this acquisition by ALA Services," said Marty Smuin, CEO of Adaptive Computing. "Arthur L. Allen brings deep insights and a proven process he has used to drive success. We look forward to accelerating our business and improving value to our customers by combining ALA's expertise with Adaptive Computing's leading technology and great position within the market."The post ALA Services Acquires Adaptive Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SG41)

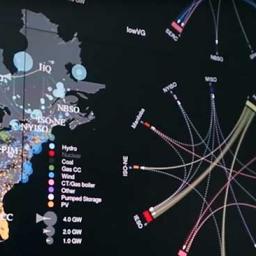

"By modeling the power system in depth and detail, NREL has helped reset the conversation about how far we can go operationally with wind and solar in one of the largest power systems in the world," said the Energy Department's Charlton Clark, a DOE program manager for the study. "Releasing the production cost model, underlying data, and visualization tools alongside the final report reflects our commitment to giving power system planners, operators, regulators, and others the tools to anticipate and plan for operational and other important changes that may be needed in some cleaner energy futures."The post Supercomputing Alternative Energy in the Eastern Power Grid appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SFYR)

Miles Lubin from presented this talk at the CSGF Annual Program Review. "JuMP is an open-source software package in Julia for modeling optimization problems. In less than three years since its release, JuMP has received more than 50 citations and has been used in at least 10 universities for teaching. We tell the story of how JuMP was developed, explain the role of the DOE CSGF and high-performance computing, and discuss ongoing extensions to JuMP developed in collaboration with DOE labs."The post Video: JuMP – A Modeling Language for Mathematical Optimization appeared first on insideHPC.

|

|

by staff on (#1SFTH)

Scientists at the Energy Department's National Renewable Energy Laboratory (NREL) discovered a use for perovskites that could propel the development of quantum computing. "Considerable research at NREL and elsewhere has been conducted into the use of organic-inorganic hybrid perovskites as a solar cell. Perovskite systems have been shown to be highly efficient at converting sunlight to electricity. Experimenting on a lead-halide perovskite, NREL researchers found evidence the material could have great potential for optoelectronic applications beyond photovoltaics, including in the field of quantum computers."The post NREL Discovery Could Propel Quantum Computing appeared first on insideHPC.

|

|

by staff on (#1SFRQ)

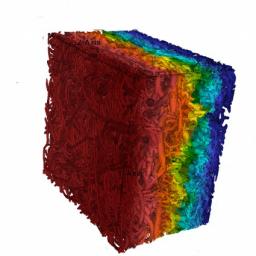

Today, the National Geospatial-Intelligence Agency and NSF released 3-D topographic maps that show Alaska’s terrain in greater detail than ever before. Powered by the Blue Waters supercomputer, the maps are the result of a White House Arctic initiative to inform better decision-making in the Arctic. "We can’t live without Blue Waters now,†said Paul Morin, head of the University of Minnesota’s Polar Geospatial Center. “The supercomputer itself, the tools the Blue Waters team at NCSA developed, the techniques they’ve come up with in using this hardware. Blue Waters is changing the way digital terrain is made and that is changing how science is done in the Arctic.â€The post Supercomputing 3D Elevation Maps of Alaska on Blue Waters appeared first on insideHPC.

|

|

by staff on (#1SC4W)

Engineers at Sandia are developing new datacenter cooling technologies that could save millions of gallons of water nationwide.The post Saving Water with Sandia’s New Datacenter Cooling Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SC0W)

James Reinders presented this talk at the 2016 Argonne Training Program on Extreme-Scale Computing. Reinders is the author of multiple books on parallel programming. His most recent book, entitled Intel Xeon Phi Processor High Performance Programming: Knights Landing Edition 2nd Edition, was co-authored by James Jeffers and Avinash Sodani.The post Video: SIMD, Vectorization, and Performance Tuning appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SBVH)

EPSRC and Cray have signed an agreement to add a Cray XC40 Development System with Intel Xeon Phi processors to ARCHER, the UK National Supercomputing Service. "The new Development system will have a very similar environment to the main ARCHER system, including Cray's Aries interconnect, operating system and Cray tools, meaning that interested users will enjoy a straightforward transition."The post Cray to Add Intel Xeon Phi to Archer Supercomputing Service in the UK appeared first on insideHPC.

|

|

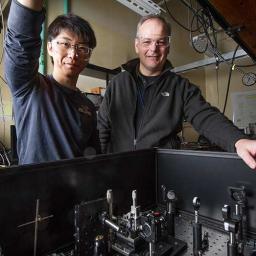

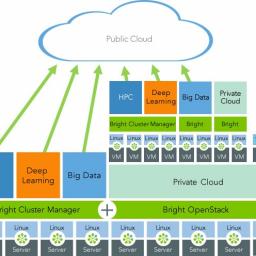

by Rich Brueckner on (#1SBSA)

"We have enhanced Bright Cluster Manager 7.3 so our customers can quickly and easily deploy new deep learning techniques to create predictive applications for fraud detection, demand forecasting, click prediction, and other data-intensive analyses,†said Martijn de Vries, Chief Technology Officer of Bright Computing. “Going forward, customers using Bright to deploy and manage clusters for deep learning will not have to worry about finding, configuring, and deploying all of the dependent software components needed to run deep learning libraries and frameworks.â€The post New Bright for Deep Learning Solution Designed for Business appeared first on insideHPC.

|

|

by MichaelS on (#1SBH2)

"In the HPC domain, Python can be used to develop a wide range of applications. While tight loops may still need to be coded in C or FORTRAN, Python can still be used. As more systems become available with coprocessors or accelerators, Python can be used to offload the main CPU and take advantage of the coprocessor. pyMIC is a Python Offload Module for the Intel Xeon Phi Coprocessor and is available at popular open source code repositories."The post Python and HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S8MS)

Today Dell Inc. and EMC Corp. announced that they intend to close the transaction to combine Dell and EMC on Wednesday, September 7, 2016. The name of the newly combined company will be Dell Technologies.The post Dell and EMC Transaction to Close on September 7, 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S825)

"Bridges has enabled early scientific successes, for example in metagenomics, organic semiconductor electrochemistry, genome assembly in endangered species, and public health decision-making. Over 2,300 users currently have access to Bridges for an extremely wide range of research spanning neuroscience, machine learning, biology, the social sciences, computer science, engineering, and many other fields."The post Bridges Supercomputer Enters Production at PSC appeared first on insideHPC.

|

|

by Douglas Eadline on (#1S7WR)

The move to network offloading is the first step in co-designed systems. A large amount of overhead is required to service the huge number of packets required for modern data rates. This amount of overhead can significantly reduce network performance. Offloading network processing to the network interface card helped solve this bottleneck as well as some others.The post Co-Design Offloading appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S7VE)

Paul Messina presented this talk at the 2016 Argonne Training Program on Extreme-Scale Computing. "The President's NSCI initiative calls for the development of Exascale computing capabilities. The U.S. Department of Energy has been charged with carrying out that role in an initiative called the Exascale Computing Project (ECP). Messina has been tapped to lead the project, heading a team with representation from the six major participating DOE national laboratories: Argonne, Los Alamos, Lawrence Berkeley, Lawrence Livermore, Oak Ridge and Sandia. The project program office is located at Oak Ridge.The post Paul Messina Presents: A Path to Capable Exascale Computing appeared first on insideHPC.

|

|

by staff on (#1S7QS)

Today Mellanox announced that SysEleven in Germany used the company's 25/50/100GbE Open Ethernet solutions to build a new SSD-based, fully-automated cloud datacenter. "We chose the Mellanox suite of products because it allows us to fully automate our state-of-the-art Cloud data center,†said Harald Wagener, CTO, SysEleven. “Mellanox solutions are highly scalable and cost effective, allowing us to leverage the Company’s best-in-class Ethernet technology that features the industry’s best bandwidth with the flexibility of the OpenStack open architecture.â€The post Mellanox Ethernet Solutions Power Germany’s Most Advanced Cloud Datacenter appeared first on insideHPC.

|

|

by staff on (#1S4S2)

The Department of Energy has funded $3.8 million fro 13 new industry projects as part of its HPC4Mfg program. "We're excited about this second round of projects because companies are bringing forward challenges that we can help address, which result in advancing innovation in U.S. manufacturing and increasing our economic competitiveness," said LLNL mathematician Peg Folta, the director of the HPC4Mfg Program.The post DOE Funds 13 HPC4Mfg Clean Energy Projects appeared first on insideHPC.

|

|

by staff on (#1S4JT)

Indiana University plans to unveil three new HPC resources at a launch event on Sept 1: Jetstream, Big Red II+, and Diet. "With these new systems, IU continues to provide our researchers the leading-edge computational tools needed for the scale of today’s research problems," said Brad Wheeler, IU vice president for IT and CIO. "Each of these systems is quite distinct in its purpose to meet the needs of our researchers and students."The post Indiana University to Launch Three New HPC Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S4E3)

Thomas Schulthess presented this talk at the MVAPICH User Group. "Implementation of exascale computing will be different in that application performance is supposed to play a central role in determining the system performance, rather than just considering floating point performance of the high-performance Linpack benchmark. This immediately raises the question as to what the yardstick will be, by which we measure progress towards exascale computing. I will discuss what type of performance improvements will be needed to reach kilometer-scale global climate and weather simulations. This challenge will probably require more than exascale performance."The post Exascale Computing – What are the Goals and the Baseline? appeared first on insideHPC.

|

|

by staff on (#1S3ZW)

Seven women who work in IT departments at research institutions around the country have been selected to help build and operate the high performance SCinet conference network at SC16. The announcement came from the Women in IT Networking at SC program, also known as WINS.The post WINS Program Selects Seven Women to Help Build SCinet at SC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S3WE)

We'd like to invite our readers to participate in our new HPC Customer Experience Survey. It's an effort to better understand our readers and what is really happening out there in the world of High Performance Computing. "This survey should take less than 10 minutes to complete. All information you provide will be treated as private and kept confidential."The post Requesting Your Input on the HPC Customer Experience Survey appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S3TJ)

Today SGI announced a significant investment in extreme scale software research at the Irish Centre for High-End Computing (ICHEC), a top European center. The investment highlights the commitment of SGI to the European software research community. These resources, including SGI application software and supercomputing hardware expertise, will assist scientists as they explore issues related to climate change, weather forecasting, and environmental research among many other topics.The post SGI Opens European Research Centre at ICHEC in Ireland appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S0MT)

"Galaxies are complex—many physical processes operate simultaneously, and over a huge range of scales in space and time. As a result, accurately modeling the formation and evolution of galaxies over the lifetime of the universe presents tremendous technical challenges. In this talk I will describe some of the important unanswered questions regarding galaxy formation, discuss in general terms how we simulate the formation of galaxies on a computer, and present simulations (and accompanying published results) that the Enzo collaboration has recently done on the Blue Waters supercomputer. In particular, I will focus on the transition from metal-free to metal-enriched star formation in the universe, as well as the luminosity function of the earliest generations of galaxies and how we might observe it with the upcoming James Webb Space Telescope."The post Simulating the Earliest Generations of Galaxies with Enzo and Blue Waters appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S0BZ)

Karl Schulz from Intel presented this talk at the 4th Annual MVAPICH User Group meeting. "Today, many supercomputing sites spend considerable effort aggregating a large suite of open-source projects on top of their chosen base Linux distribution in order to provide a capable HPC environment for their users. This presentation will introduce a new, open-source HPC community (OpenHPC) that is focused on providing HPC-centric package builds for a variety of common building-blocks in an effort to minimize duplication, implement integration testing to gain validation confidence, incorporate ongoing novel R&D efforts, and provide a platform to share configuration recipes from a variety of sites."The post OpenHPC – Community Building Blocks for HPC Systems appeared first on insideHPC.

|

|

by MichaelS on (#1S08N)

Coming in the second half of 2016: The HPE Apollo 6500 System provides the tools and the confidence to deliver high performance computing (HPC) innovation. The system consists of three key elements: The HPE ProLiant XL270 Gen9 Server tray, the HPE Apollo 6500 Chassis, and the HPE Apollo 6000 Power Shelf. Although final configurations and performance are not yet available, the system appears capable of delivering over 40 teraflop/s double precision, and significantly more in single or half precision modes.The post HPE Solutions for Deep Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#1S05F)

In this podcast, the Radio Free HPC team looks at why it’s so difficult for new processor architectures to gain traction in HPC and the datacenter. Plus, we introduce a new regular feature for our show: The Catch of the Week.The post Radio Free HPC Looks at Alternative Processors for High Performance Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RXE3)

In this video, students describe their learning experience at the 2016 PRACE Summer of HPC program in Barcelona. "The PRACE Summer of HPC is a PRACE outreach and training program that offers summer placements at top HPC centers across Europe to late-stage undergraduates and early-stage postgraduate students. Up to twenty top applicants from across Europe will be selected to participate. Participants spend two months working on projects related to PRACE technical or industrial work and produce a report and a visualization or video of their results."The post Students Learn Supercomputing at the Summer of HPC in Barcelona appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RXD8)

SC16 has extended the application deadline for its Impact Showcase, a forum designed to show attendees why HPC Matters in the real world. Submissions are now due Sept. 15.The post There’s Still Time to Show Why HPC Matters in the SC16 Impact Showcase appeared first on insideHPC.

|

|

by staff on (#1RTXR)

Over at the SC16 Blog, JP Vetters writes that planning for the SCinet high-bandwidth conference network is a multiyear process. "The success of any large conference depends on the, often unseen, hard work of many. During the last quarter century, the SCinet team has strived to perfect its routine so that conference-goers can experience a smoothly run Show."The post SCinet Preps World’s Fastest Network Infrastructure at SC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RTVP)

George Slota presented this talk at the Blue Waters Symposium. "In recent years, many graph processing frameworks have been introduced with the goal to simplify analysis of real-world graphs on commodity hardware. However, these popular frameworks lack scalability to modern massive-scale datasets. This work introduces a methodology for graph processing on distributed HPC systems that is simple to implement, generalizable to broad classes of graph algorithms, and scales to systems with hundreds of thousands of cores and graphs of billions of vertices and trillions of edges."The post Extreme-scale Graph Analysis on Blue Waters appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RR3F)

"The Simons Foundation is beginning a new computational science organization called the Flatiron Institute. Flatiron will seek to explore challenging science problems in astrophysics, biology and chemistry. Computational science techniques involve processing and simulation activities and large-scale data analysis. This position is intended to help manage and fully exploit the data and storage resources at Flatiron to further the scientific mission."The post Job of the Week: Data Operations Specialist at the Simons Foundation appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RR07)

"This talk will discuss various system performance issues, and the methodologies, tools, and processes used to solve them. The focus is on single systems (any operating system), including single cloud instances, and quickly locating performance issues or exonerating the system. Many methodologies will be discussed, along with recommendations for their implementation, which may be as documented checklists of tools, or custom dashboards of supporting metrics. In general, you will learn to think differently about your systems, and how to ask better questions."The post Video: System Methodology—Holistic Performance Analysis on Modern Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RQYG)

Today ACM announced the recipients of the 2016 ACM/IEEE George Michael Memorial HPC Fellowships. The fellowship honors exceptional PhD students throughout the world whose research focus is on high performance computing applications, networking, storage or large-scale data analytics using the most powerful computers that are currently available.The post ACM Announces George Michael Memorial HPC Fellowships appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RQTK)

In this video from the 4th Annual MVAPICH User Group, DK Panda from Ohio State University presents: Overview of the MVAPICH Project and Future Roadmap. "This talk will provide an overview of the MVAPICH project (past, present and future). Future roadmap and features for upcoming releases of the MVAPICH2 software family (including MVAPICH2-X, MVAPICH2-GDR, MVAPICH2-Virt, MVAPICH2-EA and MVAPICH2-MIC) will be presented. Current status and future plans for OSU INAM, OEMT and OMB will also be presented."The post Overview of the MVAPICH Project and Future Roadmap appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RMHD)

IDC has announced the featured speakers for the next international HPC User Forum. The event will take place Sept. 22 in Beijing, China.The post Speakers Announced for HPC User Forum in Beijing appeared first on insideHPC.

|

|

by staff on (#1RMAZ)

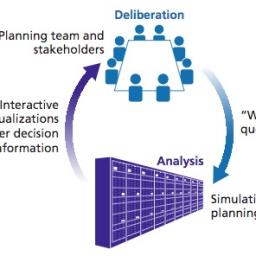

Researchers from the RAND Corporation and LLNL have joined forces to combine HPC with innovative public policy analysis to improve planning for particularly complex issues such as water resource management. By using supercomputer simulations, the participants were able to customize and speed up the analysis guiding the deliberations of decision makers. “In the latest workshop we performed and evaluated about 60,000 simulations over lunch. What would have taken about 14 days of continuous computations in 2012 was completed in 45 mins — about 500 times faster,†said Ed Balkovich, senior information scientist at the RAND Corporation, a nonprofit research organization.The post Report: Using HPC for Public Policy Analysis & Water Resource Management appeared first on insideHPC.

|

|

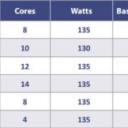

by staff on (#1RM7X)

This week at the Hot Chips conference, Phytium Technology from China unveiled a 64-core CPU and a related prototype computer server. "Phytium says the new CPU chip, with 64-bit arithmetic compatible with ARMv8 instructions, is able to perform 512 GFLOPS at base frequency of 2.0 GHz and on 100 watts of power dissipation."The post Phytium from China Unveils 64-core ARM HPC Processor appeared first on insideHPC.

|

|

by staff on (#1RM6D)

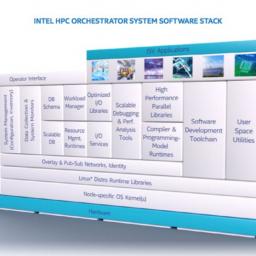

Today Nor-Tech announced the imminent rollout of clusters integrated with the Intel HPC Orchestrator. HPC Orchestrator is a licensed, value-add version of OpenHPC that will be supported by Intel and Nor-Tech.The post Nor-Tech to Offer Clusters with Intel HPC Orchestrator appeared first on insideHPC.

|

|

by MichaelS on (#1RM1H)

"With up to 72 processing cores, the Intel Xeon Phi processor x200 can accelerate applications tremendously. Each core contains two Advanced Vector Extensions, which speeds up the floating point performance. This is important for machine learning applications which in many cases use the Fused Multiply-Add (FMA) instruction."The post Machine Learning and the Intel Xeon Phi Processor appeared first on insideHPC.

|

|

by staff on (#1RHC8)

"Spack is like an app store for HPC,†says Todd Gamblin, its creator and lead developer. “It’s a bit more complicated than that, but it simplifies life for users in a similar way. Spack allows users to easily find the packages they want, it automates the installation process, and it allows contributors to easily share their own build recipes with others.†Gamblin is a computer scientist in LLNL’s Center for Applied Scientific Computing and works with the Development Environment Group at Livermore Computing.The post Spack Tool Eases Transition to Next-Gen Scientific Simulations appeared first on insideHPC.

|

|

by Rich Brueckner on (#1RGS6)

"Clear trends in the past and current petascale systems (i.e., Jaguar and Titan) and the new generation of systems that will transition us toward exascale (i.e., Aurora and Summit) outline how concurrency and peak performance are growing dramatically, however, I/O bandwidth remains stagnant. In this talk, we explore challenges when dealing with I/O-ignorant high performance computing systems and opportunities for integrating I/O awareness in these systems."The post Video: Exploring I/O Challenges at Exascale appeared first on insideHPC.

|

|

by staff on (#1RGKM)

"High performance computing continues to underwrite the progress of research using computational methods for the analysis and modeling of complex phenomena,†said Vint Cerf and John White, ACM Award Committee co-chairs, in a statement. “This year’s finalists illustrate the key role that high performance computing plays in 21st Century research. The Gordon Bell Award committee has worked diligently to select from many choices, those most deserving of recognition for this year. Like everyone else, we will be eager to learn which of the nominees takes the top prize for 2016.â€The post Finalists Announced for Gordon Bell Prize in HPC appeared first on insideHPC.

|

|

by staff on (#1RGEP)

Today Mellanox announced the opening of its new APAC headquarters and solutions centre in Singapore. The Company’s new APAC headquarters will feature a technology solution centre for showcasing the latest technologies from Mellanox, in addition to an executive briefing facility. The solution centre will feature the innovative solutions enabled by latest Mellanox technologies including HPC, Cloud, Big Data, and storage.The post Mellanox Opens New Singapore Headquarters to Strengthen HPC in Asia appeared first on insideHPC.

|