|

by staff on (#1P22V)

Today NVM Express, Inc. released the results of its fifth NVM Express (NVMe) Plugfest. The event was a distinct success, with the highest attendance to date. "NVM Express is quickly being adopted as a high performance interface standard for PCIe SSDs, and compatibility among different products is essential for greater market adoption," said Frank Shu, VP of R&D, Verification Engineering & Compatibility Test at Silicon Motion Inc. "We are proud of having our products successfully pass through the NVMe Plugfest in ensuring compliance to the specification as well as interoperability with other NVMe products."The post Record Participation Sets Stage for next NVM Express Plugfest appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 10:30 |

|

by Rich Brueckner on (#1P21S)

In this video from PASC16, Annick V. Renevey from ETH Zurich describes her award-winning poster on peptide simulations at CSCS.The post Video: PASC16 Poster on Peptide Simulation appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NZQB)

Today, the National Science Foundation (NSF) announced two major awards to establish Scientific Software Innovation Institutes (S2I2). The awards, totaling $35 million over 5 years, will support the Molecular Sciences Software Institute and the Science Gateways Community Institute, both of which will serve as long-term hubs for scientific software development, maintenance and education. "The institutes will ultimately impact thousands of researchers, making it possible to perform investigations that would otherwise be impossible, and expanding the community of scientists able to perform research on the nation's cyberinfrastructure," said Rajiv Ramnath, program director in the Division of Advanced Cyberinfrastructure at NSF."The post NSF to Invest $35 million in Scientific Software appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NZP4)

Today the University of Maryland (UMD) and the U.S. Army Research Laboratory (ARL) announced a strategic partnership to provide HPC resources for use in higher education and research communities. As a result of this synergistic partnership, students, professors, engineers and researchers will have unprecedented access to technologies that enable scientific discovery and innovation.The post University of Maryland and U.S. Army Research Lab to Collaborate on HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NWV5)

The Department of Energy’s Oak Ridge National Laboratory will add its computational know-how to the battle against cancer through several new projects recently announced at the White House Cancer Moonshot Summit. "ORNL brings to the table our world-class resources in high-performance computing, including the Titan supercomputer, as well as leading experts in the data sciences and neutron analysis, to the fight against cancer,†said ORNL’s Joe Lake, deputy for operations at the HDSI."The post ORNL Supercomputers to Boost National Cancer Moonshot appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NWSC)

Tony Hey from the Science and Technology Facilities Council presented this talk at The Digital Future conference in Berlin. "Increasingly, scientific breakthroughs will be powered by advanced computing capabilities that help researchers manipulate and explore massive datasets. The speed at which any given scientific discipline advances will depend on how well its researchers collaborate with one another, and with technologists, in areas of eScience such as databases, workflow management, visualization, and cloud computing technologies."The post Tony Hey Presents: The Fourth Paradigm – Data-Intensive Scientific Discovery appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NWK4)

This week the White House Office of Science and Technology Policy released the Strategic Plan for the NSCI Initiative. "The NSCI strives to establish and support a collaborative ecosystem in strategic computing that will support scientific discovery and economic drivers for the 21st century, and that will not naturally evolve from current commercial activity,†writes Altaf Carim, William Polk, and Erin Szulman from the OSTP in a blog post.The post White House Releases Strategic Plan for NSCI Initiative appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NWHD)

In this video from PASC16, Andrew Lumsdaine from Indiana University gives his perspectives on the conference. "The PASC16 Conference, co-sponsored by the Association for Computing Machinery (ACM) and the Swiss National Supercomputing Centre (CSCS), brings together research across the areas of computational science, high-performance computing, and various domain sciences."The post Video: Andrew Lumsdaine on Computing Trends at PASC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NT0R)

Today SIGHPC announced the first-ever recipients of the ACM SIGHPC/Intel Computational and Data Science Fellowship. The fellowship is funded by Intel and was announced at the high performance computing community’s SC conference in November of last year. Established to increase the diversity of students pursuing graduate degrees in data science and computational science, the fellowship […]The post 14 Students Awarded SIGHPC/Intel Computational and Data Science Fellowships appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NS7X)

The MVAPICH User Group (MUG) meeting has issued its Call for Participation and Student Travel Support. The event takes place August 15-17 in Columbus, Ohio. "Student travel grant support is available for all students (Ph.D./M.S./Undergrad) from U.S. academic institutions to attend MUG '16 through a funding from the U.S. National Science Foundation (NSF)."The post Call for Participation & Student Travel Support: MVAPICH User Group appeared first on insideHPC.

|

|

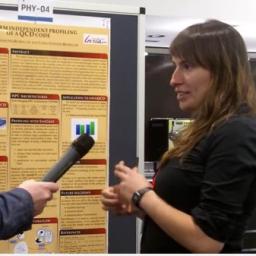

by Rich Brueckner on (#1NS25)

"We present a procedure of implementing the intermediate profiling for openQCD code that will enable the global reduction of the cost of profiling and optimizing this code commonly used in the lattice QCD community. Our approach is based on well-known SimGrid simulator, which allows for fast and accurate performance predictions of the codes on HPC architectures. Additionally, accurate estimations of the program behavior on some future machines, not yet accessible to us, are anticipated."The post Video: Platform Independent Profiling of a QCD Code appeared first on insideHPC.

|

|

by staff on (#1NRZ4)

"OpenPOWER is all about creating a broad ecosystem with opportunities to accelerate your workloads. For the Cognitive Cup, we provide two types of accelerators: GPUs and FPGAs. GPUs are used by the Deep Learning framework to train your neural network. When you want to use the neural network during the “classification†phase, you have a choice of Power CPUs, GPUs and FPGAs."The post Enter Your Machine Learning Code in the Cognitive Cup appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NRV2)

The University of Cincinnati is seeking a Computer & Information Analyst in our Job of the Week.The post Job of the Week: Computer & Information Analyst at University of Cincinnati appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NRSK)

In this video from ISC 2016, Dave Sundstrom from Hewlett Packard Enterprise describes the newly enhanced HPE Software Stack for High Performance Computing. "The HPE Core HPC Software Stack is a complete software set for the creation, optimization, and running of HPC applications. It includes development tools, runtime libraries, a workload scheduler, and cluster management, integrated and validated by Hewlett Packard Enterprise into a single software set. Core HPC Stack uses the included HPC Cluster Setup Tool to simplify and speed the installation of an HPC cluster built with HPE servers."The post Video: HPE Enhances Software Stack for High Performance Computing appeared first on insideHPC.

|

|

by MichaelS on (#1NRM8)

"Native execution is good for application that are performing operations that map to parallelism either in threads or vectors. However, running natively on the coprocessor is not ideal when the application must do a lot of I/O or runs large parts of the application in a serial mode. Offloading has its own issues. Asynchronous allocation, copies, and the deallocation of data can be performed but it complex. Another challenge with offloading is that it requires memory blocking. Overall, it is important to understand the application, the workflow within the application and how to use the Intel Xeon Phi coprocessor most effectively."The post Offloading vs Native Execution on Intel Xeon Phi Coprocessors appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NNQN)

"It's great to have these incredible servers and incredible processors, but if you don't have the people to run them - if you don't have the people that are passionate about supercomputing, we would never get there from here."Behind all of this magnificent technology are the fantastic faculty, researchers, interns, our corporate partners that are part of this, the National Science Foundation, there are people behind all of the success of the TACC. I think that's the point we can never forget."The post Podcast: UT Chancellor William McCraven on What Makes TACC Successful appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NNET)

"With our latest innovations incorporating Intel Xeon Phi processors in a performance and density optimized Twin architecture and 100Gbps OPA switch for high bandwidth connectivity, our customers can accelerate their applications and innovations to address the most complex real world problems."The post Supermicro Now Shipping Intel Xeon Phi Systems with Omni-Path appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NN7N)

Olaf Weber from SGI presented this talk at LUG 2016. "In collaboration with Intel, SGI set about creating support for multiple network connections to the Lustre filesystem, with multi-rail support. With Intel Omni-Path and EDR Infiniband driving to 200Gb/s or 25GB/s per connection, this capability will make it possible to start moving data between a single SGI UV node and the Lustre file system at over 100GB/s."The post Video: Matching the Speed of SGI UV with Multi-rail LNet for Lustre appeared first on insideHPC.

|

|

by MichaelS on (#1NN66)

Deep learning is a method of creating artificial intelligence systems that combine computer-based multi-layer neural networks with intensive training techniques and large data sets to enable analysis and predictive decision making. A fundamental aspect of deep learning environments is that they transcend finite programmable constraints to the realm of extensible and trainable systems. Recent developments in technology and algorithms have enabled deep learning systems to not only equal but to exceed human capabilities in the pace of processing vast amounts of information.The post The Industrialization of Deep Learning – Intro appeared first on insideHPC.

|

|

by staff on (#1NN4Y)

Today the FlyElephant team announced the release of the FlyElephant 2.0 platform for High Performance Computing. Versioin 2.0 enhancements include: internal expert community, collaboration on projects, public tasks, Docker and Jupyter support, a new file storage system and work with HPC clusters.The post FlyElephant 2.0 Improves HPC Collaboration Features appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NJ7C)

This week, the Helix Nebula Science Cloud (HNSciCloud) launched a €5.3 million tender for the establishment of a European hybrid cloud platform. The purpose of the platform is to support the deployment of high-performance computing and big-data capabilities for scientific research. "The European Cloud Initiative will unlock the value of big data by providing world-class supercomputing capability, high-speed connectivity and leading-edge data and software services for science, industry and the public sector,†said Günther H. Oettinger, Commissioner for the Digital Economy and Society.The post Seeking Bids for Helix Nebula Science Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NHMB)

In this CCTV video, Alberto Alonso describes his new supercomputer, Breogan, that has the ability to modernize Mexico's antiquated stock market.Since it was launched in February, the Breogan computer has generated $475,000 for his company GACS. The algorithm it uses is much faster than the computers in Mexico’s stock exchange. "What makes the Breogan computer so unique, is that it finds attractive opportunities in the market and it buys and sells automatically when it sees a trading opportunity."The post Video: Breogan Supercomputer Brings High Speed Trading to Mexico appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NHJV)

Today NERSC announced plans to host a new, data-centric event called Data Day. The main event will take place on August 22, followed by a half-day hackathon on August 23. The goal: to bring together researchers who use, or are interested in using, NERSC systems for data-intensive work.The post NERSC to Host Data Day on August 22 appeared first on insideHPC.

|

|

by staff on (#1NH9R)

Today Seagate unveiled a first-of-its kind, high-capacity drive that can help data centers more easily accommodate exponential data growth, while still maintaining high levels of computing power and performance. The two terabyte (TB) version of its Nytro XM1440 M.2 non-volatile memory express (NVMe) Solid State Drive (SSD) is the highest-capacity, enterprise-class M.2 NVMe SSD available today, making it well suited for demanding enterprise applications that require fast data access, capacity and processing. “This latest version of the Nytro XM1440 M.2 NVMe SSD is the first of its kind and pushes the boundaries for enterprises, so they don’t have to sacrifice speed for access and availability. They can continue to scale operations in an efficient manner and still get the most value from their data, but without the extra overhead.â€The post Seagate Announes Industry-First 2TB M.2 Enterprise SSD appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NGNK)

At ISC 2016, HPE introduced new high-performance computing solutions that aim to accelerate HPC adoption by enabling faster time-to-value and increased competitive differentiation through better parallel processing performance, reduced complexity, and faster deployment time. "By combining these latest advancements in Intel Scalable System Framework with the scalability, flexibility and manageability of the HPE Apollo portfolio, customers will gain new levels of performance, efficiency and reliability. In addition, customers will be able to run HPC applications in a massively parallel manner with minimal code modification."The post Video: Hewlett Packard Enterprise Moves Forward with HPC Strategy at ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NE6E)

Jeff Layton from Amazon presented this talk at the AWS Public Sector Summit. "Learn how to build HPC clusters on the fly, leverage Amazon’s Spot market pricing to minimize the cost of HPC jobs, and scale HPC jobs on a small budget, using all the same tools you use today, and a few new ones too."The post Video: Building HPC Clusters as Code in the (Almost) Infinite Cloud appeared first on insideHPC.

|

|

by staff on (#1NE2A)

Biologists and mathematicians from the Moscow Institute of Physics and Technology (MIPT) have accelerated the rate at which a computer can predict the structure of protein complexes in a cell. "The new method enables us to model the interaction of proteins at the genome level. This will give us a better understanding of how our cells function and may enable drug development for diseases caused by 'incorrect' protein interactions," commented Dima Kozakov, a professor at Stony Brook and adjunct professor at MIPT.The post MIPT in Moscow Develops New Method of Calculating Protein Interaction appeared first on insideHPC.

|

|

by staff on (#1NDZN)

Today Mellanox announced it has received the Award for Technology Innovation from Baidu, Inc. The award recognizes Mellanox's achievements in designing and delivering a high-performance, low latency interconnect technology solution that positively impacts Baidu's business. Mellanox Technologies received the award at the 2016 Baidu Datacenter Partner Conference, Baidu's annual gathering of key datacenter partners, and was the only interconnect provider in this category.The post Mellanox Receives Baidu Award for Innovation in Machine Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#1NDXJ)

"Inria teams have been developing runtime systems and compiler techniques for parallel programming over several decades.†says Olivier Aumage, researcher at Inria's team STORM, “By joining the OpenMP ARB today, Inria looks forward to contribute this expertise in making OpenMP meet the challenges of the Exascale eraâ€.The post Inria Joins OpenMP ARB appeared first on insideHPC.

|

|

by staff on (#1NDXM)

In this special guest feature, Rob Farber writes that a study done by Kyoto University Graduate School of Medicine shows that code modernization can help Intel Xeon processors outperform GPUs on machine learning code. "The Kyoto results demonstrate that modern multicore processing technology now matches or exceeds GPU machine-learning performance, but equivalently optimized software is required to perform a fair benchmark comparison. For historical reasons, many software packages like Theano lacked optimized multicore code as all the open source effort had been put into optimizing the GPU code paths."The post Superior Performance Commits Kyoto University to CPUs Over GPUs appeared first on insideHPC.

|

|

by Robert Jolly on (#1NAXT)

MSC Software has announced a partnership with the Campania Region Technological Aerospace District to launch the SIMULAB Project (Simulation Laboratory for the Development of Aeronautical Programs). MSC will join efforts with DAC (Campania Aerospace Cluster), Formit, Sixtema, and CIRA (an Italian aerospace research center) to accelerate the development of companies involved in the Italian Aerospace industry.The post SIMULAB Project to Advance Aerospace Simulation appeared first on insideHPC.

|

|

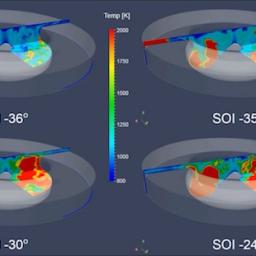

by staff on (#1NAWE)

Researchers are using Argonne supercomputers to jump-start internal-combustion engine designs in the name of conservation. “Improving engine efficiencies by even a few percentage points can take a big chunk out of our carbon footprint. We are working on a proof-of-concept to demonstrate how simulating several thousand engine configurations simultaneously can really help engineers zero in on the optimum engine designs and operating strategies to maximize efficiency while minimizing harmful emissions.â€The post Supercomputing Better Engines at Argonne appeared first on insideHPC.

|

|

by Rich Brueckner on (#1N8GN)

In this Intel Chip Chat podcast, Alyson Klein and Charlie Wuischpard describe Intel’s investment to break down walls to HPC adoption and move innovation forward by thinking at a system level. "Charlie discusses the announcement of the Intel Xeon Phi processor, which is a foundational element of Intel Scalable System Framework (Intel SSF), as well as Intel Omni-Path Fabric. Charlie also explains that these enhancements will make supercomputing faster, more reliable, and increase efficient power consumption; Intel has achieved this by combining the capabilities of various technologies and optimizing ways for them to work together."The post Podcast: Intel Scalable System Framework Moves HPC Forward at the System Level appeared first on insideHPC.

|

|

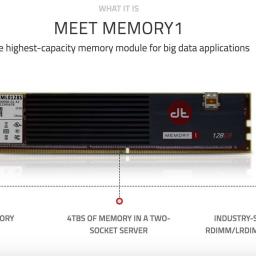

by Rich Brueckner on (#1N86Z)

"Memory1 provides the highest-capacity system memory solution on the market, enabling massive increases in server and application capability. Diablo’s JEDEC-compliant, flash-based DIMMs, interface seamlessly with existing server architectures and require no changes to hardware, operating systems, or applications. Memory1 is ideal for environments that require large memory footprints for workloads such as big data analytics, caching, and complex web applications."The post Diablo Technologies Memory1 Goes into Production appeared first on insideHPC.

|

|

by Rich Brueckner on (#1N5SM)

Today Deloitte Advisory and Cray introduced the first commercially available high-speed, supercomputing threat analytics service. Called Cyber Reconnaissance and Analytics, the subscription-based offering is designed to help organizations effectively discover, understand and take action to defend against cyber adversaries.The post Cray Teams With Deloitte to Combat Cybercrime appeared first on insideHPC.

|

|

by staff on (#1N5PK)

Dassault Systèmes, a provider of product lifecycle management (PLM) solutions, has announced that it will acquire Computer Simulation Technology (CST), a specialist in electromagnetic (EM) and electronics simulation, for approximately 220 million euros. With the acquisition of CST, based near Frankfurt, Germany, Dassault Systèmes will complement its industry solution experiences for realistic multiphysics simulation on its 3DEXPERIENCE platform with full spectrum EM simulation.The post Dassault to Acquire Computer Simulation Technology (CST) in Germany appeared first on insideHPC.

|

|

by staff on (#1N5N3)

Researchers at DKRZ are using supercomputers to better understand the movement of sea ice. "Sea ice is an important component of the Earth System, which is often being discussed in terms of integrated quantities such as Arctic sea ice extent and volume."The post Simulating Sea Ice Leads at DKRZ appeared first on insideHPC.

|

|

by Rich Brueckner on (#1N5J6)

"We are pioneering the area of virtualized clusters, specifically with SR-IOV,†said Philip Papadopoulos, SDSC’s chief technical officer. “This will allow virtual sub-clusters to run applications over InfiniBand at near-native speeds – and that marks a huge step forward in HPC virtualization. In fact, a key part of this is virtualization for customized software stacks, which will lower the entry barrier for a wide range of researchers by letting them project an environment they already know onto Comet.â€The post Video: Mellanox Powers Open Science Grid on Comet Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#1N5F7)

The first Joint International Workshop on Parallel Data Storage and Data Intensive Scalable Computing Systems (PDSW-DISCS’16) has issued its Call for Papers. As a one day event held in conjunction with SC16, the workshop will combine two overlapping communities to to address some of the most critical challenges for scientific data storage, management, devices, and processing infrastructure. To learn more, we caught up with workshop co-chairs Dean Hildebrand (IBM) and Shane Canon (LBNL).The post Interview: PDSW-DISCS’16 Workshop to Focus on Data-Intensive Computing at SC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1N2JF)

IDC has published the preliminary agenda for their next HPC User Forum. The event will take place Sept. 6-8 in Austin, Texas.The post Preliminary Agenda Posted for HPC User Forum in Austin, Sept. 6-8 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1N241)

In this Intel Chip Chat podcast with Allyson Klein, Cray CTO Steve Scott describes the collaboration between Cray and Intel on the Intel Xeon Phi Processor for supercomputer integration. Steve highlights that Cray chose to implement the new Intel Xeon Phi Processor for its supercomputers because of the potential to support a diverse array of customer needs and deliver the best performance per application. He emphasizes that Cray software tools are key to optimizing Intel Xeon Phi processor performance at the system level.The post Podcast: Steve Scott on How Intel Xeon Phi is Fueling HPC Innovation at Cray appeared first on insideHPC.

|

|

by staff on (#1N20D)

ARM processors will provide the computational muscle behind one of the most powerful supercomputers in the world, replacing the current K computer at the RIKEN Advanced Institute for Computational Science (AICS) in Japan. During the ISC conference, Fujitsu released details of the new system during a presentation with Fujitsu vice president Toshiyuki Shimizu. Shimizu stated that the "post K" system, which is set to go live in 2020, will have 100 times more application performance than the K supercomputer.The post ARM to Power New RIKEN Supercomputer appeared first on insideHPC.

|

|

by staff on (#1N1RP)

Today Mellanox announced that the University of Tokyo has selected the company's Switch-IB 2 EDR 100Gb/s InfiniBand Switches and ConnectX-4 adapters to accelerate its new supercomputer for computational science.The post University of Tokyo Selects Mellanox EDR InfiniBand appeared first on insideHPC.

|

|

by MichaelS on (#1N1ND)

Offloading to a coprocessor does need to be considered carefully, due to the memory transfer requirements. When the data that is to be worked on resides in the memory of the main system, that data must be transferred to the coprocessor’s memory. The challenge arises because memory is not physically shared between the main system and the coprocessor.

|

|

by Rich Brueckner on (#1N1J0)

Today DDN announced that The Scripps Research Institute (TSRI), one of the world’s largest independent organizations focusing on biomedical research, has deployed DDN’s end-to-end data management solutions, including high performance SFA7700X file storage automatically tiered to WOS object storage archive, to support fast analysis and cost-effective retention of research data produced by cryo-electron microscopy (Cryo-EM).The post Scripps Leverages DDN Storage to Research New Medical Treatments appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MYK9)

While the National Labs are known for their supercomputers, some are also tasked with helping US industry advance digital manufacturing. The 3D printed car and Jeep projects were done to demonstrate Oak Ridge’s Big Area Additive Manufacturing technology, which the lab says could bring a whole new meaning to the phrase “rapid prototyping.†A new report by a 3D printing service called Sculpteo offers some insight into who is using 3D printing. They surveyed 1,000 respondents from 19 different industry online from late January to late March 2016.The post 3D Printing Survey Provides Insight on First Adopters appeared first on insideHPC.

|

|

by staff on (#1MYB3)

"We saw a disconnect in the industry between rapidly growing organizations that could really benefit from HPC clusters and the solutions that were on the market," said Nor-Tech President and CEO David Bollig. "Our goal was to develop a powerful, scalable cluster that was in itself affordable, but also didn’t require hefty, recurring software licensing fees.â€The post Nor-Tech & ESI Partner on Affordable Clusters for OpenFOAM appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MY99)

In this video from ISC 2016, Greg Schmidt from Hewlett Packard Enterprise describes the new Apollo 6500 server. With up to eight high performance NVIDIA GPUs designed for maximum transfer bandwidth, the HPE Apollo 6500 is purpose-built for HPC and deep learning applications. Its high ratio of GPUs to CPUs, dense 4U form factor and efficient design enable organizations to run deep learning recommendation algorithms faster and more efficiently, significantly reducing model training time and accelerating the delivery of real-time results, all while controlling costs.The post Video: HPE Apollo 6500 Takes GPU Density to the Next Level appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MY62)

CoolIT Systems in New York is seeking an Enterprise Account Manager with experience in Data Center infrastructure in our Job of the Week.The post Job of the Week: Enterprise Account Manager at CoolIT Systems in New York appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MY2A)

In this slidecast, Alexander Lidow from EPC describes how the company is leading a technological revolution with Gallium Nitride (GaN). More efficient than silicon as a basis for electronics, GaN could save huge amounts of energy in the datacenter and has the potential to fuel the computer industry beyond Moore's Law. "Due to its superior switching speeds and smaller footprint, Texas Instruments is working with EPC to build a simpler topology that achieves better efficiency with smaller footprints and significantly lower cost."The post Slidecast: Rethinking Server Power Architecture in the Post-Silicon World appeared first on insideHPC.

|