Ed Catmull is legendary in the fields of computer graphics and animation; he was an important

researcher in 3D computer graphics in the '70s, became head of Lucasfilm's Pixar computer animation division, and has essentially remained in that role ever since through the sale/spinoff of Pixar to Steve Jobs in 1986, its years as an independent producer of feature-length animated films, and its acquisition by Disney in 2006. He's just published a

book on creative leadership.

While Catmull has lots of fans in Silicon Valley and beyond, he's emerging as a key figure in an antitrust lawsuit by employees over the 'gentlemen's agreement' by a handful of companies including Apple, Google, Intel, and Pixar, to

avoid recruiting each other's employees, thus avoiding a bidding war on talent. Emails recovered during the discovery phase of an ongoing class action lawsuit reveal that

Catmull was a zealous enforcer of the pact among digital animation studios, including Pixar, Lucasfilm/ILM, and Dreamworks; at one point, after Pixar was acquired by Disney, he even wrote an email persuading Disney Studios Chairman Dick Cook, to put the arm on a sister Disney studio that was poaching Dreamwork employees:

I know that Zemeckis' company will not target Pixar, however, by offering higher salaries to grow at the rate they desire, people will hear about it and leave. We have avoided wars up in Norther[n] California because all of the companies up here - Pixar, ILM [Lucasfilm], Dreamworks, and couple of smaller places [sic]- have conscientiously avoided raiding each other.

The Catmull emails also reveal that Sony was recruited to join the pact/cartel, but Sony refused to play ball. This seemed to raise Catmull's testosterone level a bit. Catmull to Cook again:

Just this last week, we did have a recruiter working for ILM [Lucasfilm] approach some of our people. We called to complain and the recruiter immediately stopped. This kind of relationship has helped keep the peace in the Bay Area and it is important that we continue to use restraint.

Now that Sony has announced their intentions with regard to selling part of their special effects business, and given Sony's extremely poor behavior in its recruiting practices, I would feel very good about aggressively going after Sony people.

In the deposition, Catmull said he never followed through with the threat to go after Sony's employees.

(I saw this story on

OSNews, which drew a fair number of comments).

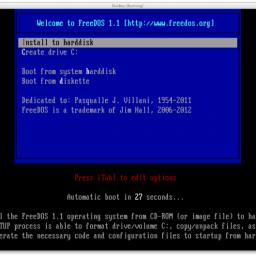

Who cares that it's 20 years old: FreeDOS is still around, fulfilling an interesting and valuable role in the world of tech, and what's more, is ardently supported and appreciated by a loyal core of users and developers. Sean Gallagher over at Ars Technica interviews the FreeDOS lead developer, Jim Hall, to find out why FreeDOS still fills a niche:

Who cares that it's 20 years old: FreeDOS is still around, fulfilling an interesting and valuable role in the world of tech, and what's more, is ardently supported and appreciated by a loyal core of users and developers. Sean Gallagher over at Ars Technica interviews the FreeDOS lead developer, Jim Hall, to find out why FreeDOS still fills a niche: