|

by Rich Brueckner on (#30Q9V)

"There were several other ways of doing generative models that had been popular for several years before I had the idea for GANs. And after I'd been working on all those other methods throughout most of my Ph.D., I knew a lot about the advantages and disadvantages of all the other frameworks like Boltzmann machines and sparse coding and all the other approaches that have been really popular for years. I was looking for something that avoid all these disadvantages at the same time."The post Heroes of Deep Learning: Andrew Ng interviews Ian Goodfellow from Google Brain appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 22:30 |

|

by staff on (#30N3A)

Today Embry-Riddle Aeronautical University announced it has deployed a Cray CS400 supercomputer. The four-cabinet system will power collaborative applied research with industry partners at the University’s new research facility – the John Mica Engineering and Aerospace Innovation Complex (“MicaPlexâ€) at Embry-Riddle Research Park.The post Embry-Riddle University Deploys Cray CS Supercomputer for Aerospace appeared first on insideHPC.

|

by Rich Brueckner on (#30M04)

Today ACM and IEEE announced that Shaden Smith of the University of Minnesota and Yang You of the University of California, Berkeley are the recipients of the 2017 ACM/IEEE-CS George Michael Memorial HPC Fellowships. Smith is being recognized for his work on efficient and parallel large-scale sparse tensor factorization for machine learning applications. You is being recognized for his work on designing accurate, fast, and scalable machine learning algorithms on distributed systems.The post Researchers in Machine Learning Awarded George Michael Memorial HPC Fellowships appeared first on insideHPC.

|

by Rich Brueckner on (#30M05)

The LAD'17 conference has posted their meeting agenda. Also known as the Lustre Administrator and Developer Workshop, the event takes place Oct. 4-5 in Paris. "EOFS and OpenSFS are organizing the seventh european lustre workshop. This will be a great opportunity for Lustre worldwide administrators and developers to gather and exchange their experiences, developments, tools, good practices and more."The post Agenda Posted for LAD’17 in Paris appeared first on insideHPC.

|

|

by staff on (#30KW3)

Researchers are tapping Argonne and NCSA supercomputers to tackle the unprecedented amounts of data involved with simulating the Big Bang. "Researchers performed cosmological simulations on the ALCF’s Mira supercomputer, and then sent huge quantities of data to UI’s Blue Waters, which is better suited to perform the required data analysis tasks because of its processing power and memory balance."The post Illinois Supercomputers Tag Team for Big Bang Simulation appeared first on insideHPC.

|

|

by Rich Brueckner on (#30KSC)

Today ScaleMP a leading provider of virtualization solutions for high-end computing, delivering technology for software-defined computing and software-defined memory products, today announced it has completed a $10 million funding round. The investment follows deeper and broader adoption of ScaleMP’s technology by server and storage OEMs. The funds will further accelerate the company’s growth, enhance the company’s products and technology, and expand ScaleMP’s support for its OEM partners.The post ScaleMP Completes $10M Funding Round appeared first on insideHPC.

|

|

by staff on (#30KKF)

HPC is becoming a competitive requirement as high performance data analysis (HPDA) joins multi-physics simulation as table stakes for successful innovation across a growing range of industries and research disciplines. Yet complexity remains a very real hurdle for both new and experienced HPC users. Learn how new Intel products, including the Intel HPC Orchestrator, can work to simplify some of the complexities and challenges that can arise in high performance computing environments.The post A Simpler Path to Reliable, Productive HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#30GZV)

AnandTech reports that Intel is discontinuing it's line of Knights Landing PCIe based Co-processors. The move is not a surprise given that the company has been producing bootable Xeon Phi processors since early in 2016. "At least for now, Intel does not want to compete against add-on PCIe compute accelerators with its Xeon Phi products. A big question is whether it actually needs to, given the stand-alone capabilities of Xeon Phi and its performance characteristics."The post Intel Discontinues Xeon Phi 7200-Series Knights Landing Coprocessors appeared first on insideHPC.

|

|

by Rich Brueckner on (#30GPJ)

Today VMware introduced vSphere Scale-Out Edition for Big Data and HPC Workloads, a new solution in the vSphere product line aimed at Big Data and HPC workloads. VMware vSphere Scale-Out edition includes the features and functions most useful to Big Data and HPC workloads such as those provided by the core vSphere hypervisor and the vSphere Distributed Switch. "This new solution also enables the ability to rapidly change and provision compute nodes. The solution will be offered at an attractive price point, optimized for Big Data and HPC environments."The post VMware Rolls Out vSphere Scale-Out Edition for Big Data and HPC Workloads appeared first on insideHPC.

|

|

by Rich Brueckner on (#30GJT)

In this video, Tuomas Sandholm from Strategic Machine, Inc. and Eng Lim Goh from HPE discuss how High Performance Computing is fast-becoming an integral part of our lives. "Our HPC solutions empower innovation at any scale, building on our purpose-built HPC systems and technologies solutions, applications and support services. The results? More power to differentiate your business, drive research and find answers like never before."The post Interview: How HPE is Democratizing High Performance Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#30GG5)

In this podcast, the Radio Free HPC team looks at some new developments in the Quantum Computing ecosystem. Last week, IEEE approved a new Standards Project for Quantum Computing Definitions. Meanwhile, Silicon Valley Startup Rigetti Computing has teamed up the CDL incubator to boost the Quantum Machine Learning Startup ecosystem.The post Radio Free HPC Catches up with Quantum Computing News appeared first on insideHPC.

|

|

by Rich Brueckner on (#30E8W)

Yale University is seeking a Sr. Linux System Administrator in our Job of the Week. "In this role, you will work as a Linux senior administrator in ITS Systems Administration. Provide leadership in Linux server administration, for mission-critical services in a dynamic, 24/7 production data center environment."The post Job of the Week: Senior Linux System Administrator at Yale appeared first on insideHPC.

|

|

by staff on (#30E4Q)

The Advances in Machine Learning to Improve Scientific Discovery at Exascale and Beyond (ASCEND) project aims to use deep learning to assist researchers in making sense of massive datasets produced at the world's most sophisticated scientific facilities. Deep learning is an area of machine learning that uses artificial neural networks to enable self-learning devices and platforms. The team, led by ORNL's Thomas Potok, includes Robert Patton, Chris Symons, Steven Young and Catherine Schuman.The post Oak Ridge Turns to Deep Learning for Big Data Problems appeared first on insideHPC.

|

|

by staff on (#30BXF)

"Modern HPC systems do some things very similar to ordinary IT computing, but they also have some significant differences. Two key security challenges are the notions that traditional security solutions often are not effective given the paramount priority of high-performance in HPC. In addition, the need to make some HPC environments as open as possible to enable broad scientific collaboration and interactive HPC also presents a challenge."The post Video: Security in HPC Environments appeared first on insideHPC.

|

|

by Rich Brueckner on (#30BXH)

HPE has posted the preliminary preliminary agenda for HP-CAST at SC17 in Denver. Now open for registration, the event takes place Nov. 10-11 at the Grand Hyatt hotel.

|

|

by Rich Brueckner on (#30980)

Dr. Erwin Laure from the PDC Center for HPC in Sweden presented this talk at the 4th Human Brain Project School in Austria. The 4th HBP School offered a comprehensive program covering all aspects of software, hardware, simulation, databasing, robotics, machine learning and theory relevant to the HBP research program.The post Video: Introduction to High Performance Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#3091P)

In this video from the Heroes of Deep Learning series, Andrew Ng interviews Pieter Abbeel from UC Berkeley. "Work in Artificial Intelligence in the EECS department at Berkeley involves foundational research in core areas of knowledge representation, reasoning, learning, planning, decision-making, vision, robotics, speech and language processing. There are also significant efforts aimed at applying algorithmic advances to applied problems in a range of areas, including bioinformatics, networking and systems, search and information retrieval."The post Heroes of Deep Learning: Andrew Ng interviews Pieter Abbeel appeared first on insideHPC.

|

|

by staff on (#308YV)

Today the National Science Foundation (NSF) announced $17.7 million in funding for 12 Transdisciplinary Research in Principles of Data Science (TRIPODS) projects, which will bring together the statistics, mathematics and theoretical computer science communities to develop the foundations of data science. Conducted at 14 institutions in 11 states, these projects will promote long-term research and training activities in data science that transcend disciplinary boundaries. "Data is accelerating the pace of scientific discovery and innovation," said Jim Kurose, NSF assistant director for Computer and Information Science and Engineering (CISE). "These new TRIPODS projects will help build the theoretical foundations of data science that will enable continued data-driven discovery and breakthroughs across all fields of science and engineering."The post NSF Announces $17.7 Million Funding for Data Science Projects appeared first on insideHPC.

|

|

by staff on (#308VE)

Even though SC17 is still more than two months away, this year’s Exhibition is already setting records.We are excited to report that we have already smashed the record for both net square feet of exhibit space sold as well as the total number of exhibitors,†says Bronis R. de Supinski, SC17 Exhibits Chair from Lawrence Livermore National Laboratory. “Whether industry or research – if you have anything to do with high performance computing, you need to have a presence at SC17. The SC exhibition floor is an exciting place to discover the latest innovations and make positive career-impacting connections.â€The post SC17 Exhibition Setting Records appeared first on insideHPC.

|

|

by Rich Brueckner on (#305TT)

In this video from AWS Summit in Tel Aviv, Adrian Cockcroft describes how AWS gives Superpowers to Startups. "AWS offers low cost, on-demand IT solutions to help you build and launch your applications quickly and easily with minimum costs. With no upfront investments and a pay-as-you-go price model, you only pay for what you use, when you use it, to build your minimum viable product (MVP), experiment and iterate, all at low cost."The post Adrian Cockcroft on how AWS Gives Superpowers to Startups appeared first on insideHPC.

|

|

by staff on (#305QW)

While practical Quantum Computing remains somewhere in the future, it is already starting to spark new Startup opportunities. "Quantum machine learning will power some of the most impactful and exciting near-term applications of quantum computing. The startups at the CDL are at the leading edge of this technology and will get early access to Rigetti's general-purpose quantum hardware and to Forest, one of the most sophisticated quantum programming environments in the world,†said Madhav Thattai, Chief Strategy Officer, Rigetti. “We’re looking forward to working with these pioneering individuals and teams to help create and accelerate the quantum application ecosystem.â€The post Rigetti Computing helps Foster Quantum Machine Learning Startup Ecosystem appeared first on insideHPC.

|

|

by staff on (#305KV)

The Ohio Supercomputer Center is help teachers like Sultana Nahar, Ph.D. conduct computational workshops as part of her lecture courses. Through physics and STEM courses and workshops, Nahar has been improving the computational skills of the scientific community one person at a time with the hope that more breakthroughs will be made. "One of the most important parts of STEM education and research is computation of parameters for solving real problems,†Nahar said. “It’s not easy to calculate and we need very high accuracy in our results, that can only be achieved through precise computations with high performance computer facilities."The post OSC Supercomputers Power STEM Learning Across the Globe appeared first on insideHPC.

|

|

by MichaelS on (#305GQ)

"While MPI was originally developed for general purpose CPUs and is widely used in the HPC space in this capacity, MPI applications can also be developed and then deployed with the Intel Xeon Phi Processor. With the understanding of the algorithms that are used for a specific application, tremendous performance can be achieved by using a combination of OpenMP and MPI."The post Internode Programming With MPI and Intel Xeon Phi Processor appeared first on insideHPC.

|

|

by MichaelS on (#2QK6B)

This is the fifth and final entry in an insideHPC series that explores the HPC transition to the cloud and how this move can help create seamless HPC. This series, compiled in a complete Guide, covers cloud computing for HPC, why the OS is important, OpenStack fundamentals and more.The post Moving Toward the Cloud & Seamless HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#3037M)

"While Quantum Computing is poised for significant growth and advancement, the emergent industry is currently fragmented and lacks a common communications framework,†said Whurley (William Hurley), chair, IEEE Quantum Computing Working Group. “IEEE P7130 marks an important milestone in the development of Quantum Computing by building consensus on a nomenclature that will bring the benefits of standardization, reduce confusion, and foster a more broadly accepted understanding for all stakeholders involved in advancing technology and solutions in the space.â€The post IEEE Approves Standards Project for Quantum Computing Definitions appeared first on insideHPC.

|

|

by Rich Brueckner on (#302Y0)

In this Chip Chat podcast, Dr. Naveen Rao describes his work at the Artificial Intelligence Products Group (AIPG) at Intel. "The Intel Nervana Platform includes what you need to design, develop, and deploy state-of-the-art deep learning models. There’s no need for you to download multiple frameworks and libraries and to troubleshoot complex integration issues. Everything you need is included and “just works†to make building AI solutions as easy as possible."The post Podcast: A Look at the Intel Nervana Platform for AI appeared first on insideHPC.

|

|

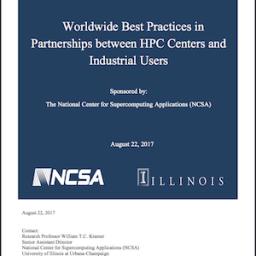

by staff on (#302J2)

Registration is now open for the 2017 NCSA Industry Conference. The event takes place Oct. 11-12 on the University of Illinois at Urbana-Champaign campus. "This year’s conference provides attendees with the opportunity to experience the transformative power of high performance computing and big data as we showcase how the NCSA Industry Program is giving industry and government a competitive edge. Attendees will also get an exclusive look at new resources and collaboration opportunities, the chance to network with business leaders and domain experts, meet the future generation of HPC experts at our student reception, and hear several ground-breaking success stories of how NCSA is enabling computational breakthroughs and changing the world again.The post NCSA Industry Conference to Showcase Supercomputing Partnerships appeared first on insideHPC.

|

|

by staff on (#302J4)

Today, Intel announced that its AI technology is being used by Microsoft to power their new accelerated deep learning platform, called Project Brainwave. "Project Brainwave achieves a major leap forward in both performance and flexibility for cloud-based serving of deep learning models. We designed the system for real-time AI, which means the system processes requests as fast as it receives them, with ultra-low latency. Real-time AI is becoming increasingly important as cloud infrastructures process live data streams, whether they be search queries, videos, sensor streams, or interactions with users."The post Intel FPGAs Accelerate Microsoft’s Project Brainwave appeared first on insideHPC.

|

|

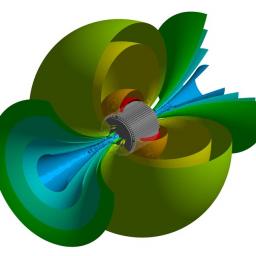

by staff on (#3024F)

Today ANSYS 18.2 was released. This latest release brings increased levels of accuracy, speed and ease-of-use – spurring more engineers to use simulation across every stage of the product lifecycle to design cutting-edge products more efficiently and economically. "Our customers rely on ANSYS engineering simulation technology to cut costs, limit late-stage design changes, and tame the toughest engineering challenges. This latest release continues to build upon the industry's most accurate simulation portfolio, offering enhanced speed and accuracy – enabling more users, no matter their level of experience, to reduce development time and increase product quality."The post ANSYS 18.2 Enhances Simulation Speed and Accuracy appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZZWN)

Today NCSA and Hyperion Research released a new study that examines HPC and Industry partnerships. Aimed at identifying and understanding best practices in partnerships between public high performance computing centers and private industry, the study aims to promote the vital transfer of scientific knowledge to industry and the important transfer of industrial experience to the scientific community.The post New Study Uncovers Best Practices for Effective Partnerships between Public HPC Centers and Industrial Users appeared first on insideHPC.

|

|

by staff on (#2ZZM1)

Today Atos in Europe announced a new reseller agreement in which Dell EMC will resell Atos’ high-end 8 to 16 sockets x86 Bullion servers. "Over the last few years, Dell EMC and Atos have been working together to combine Bullion servers and Dell EMC unified storage solutions to provide our customers with a leading solution for deployment of mission-critical SAP HANA projects," said Ravi Pendekanti, Senior Vice President at Dell EMC.The post Dell EMC Joins Atos Global Reseller Alliance appeared first on insideHPC.

|

|

by staff on (#2ZZ91)

Over at the SC17 Blog, Brian Ban begins his series of SC17 Session Previews with a look at a talk on High Performance Big Data. "Deep learning, using GPU clusters, is a clear example but many Machine Learning algorithms also need iteration, and HPC communication and optimizations."The post SC17 Invited Talk Preview: High Performance Machine Learning appeared first on insideHPC.

|

|

by staff on (#2ZZ26)

"Like the previous disruptions of clusters vs. monolithic systems or Linux vs. proprietary operating systems, cloud changes the status quo, takes us out of our comfort zone, and gives us a sense of lack of control. But the effect of price, the flexibility to dynamically change your system size and choose the best architecture for the job, the availability of applications, the ability to select system cost based on the needs of a particular workload, and the ability to provision and run immediately, will prove very attractive for HPC users."The post Gabriel Broner on Why Cloud is the Next Disruption in HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZYZC)

In this video, Piush Patel describes how Intel Xeon Scalable Processors speed up Altair RADIOSS structural simulations with Hyperworks. "RADIOSS is a leading structural analysis solver for highly non-linear problems under dynamic loadings. It is used across all industries worldwide to improve the crashworthiness, safety, and manufacturability of structural designs."The post Intel Xeon Scalable Processors Speed Altair RADIOSS with Hyperworks appeared first on insideHPC.

|

|

by Beth Harlen on (#2ZYW4)

Frameworks, applications, libraries and toolkits—journeying through the world of deep learning can be daunting. If you’re trying to decide whether or not to begin a machine or deep learning project, there are several points that should first be considered. This is the second article in a five-part series that covers the steps to take before launching a machine learning startup. This article covers popular machine learning technology.The post Machine Learning Technology: A Guide to Scaling Up and Out appeared first on insideHPC.

|

|

by staff on (#2ZYSC)

Despite the cloud hype, legacy HPC apps are alive and well. While it may seem like they can’t mix, the process of bursting these applications to the cloud is bringing these staples to cloud table. Avoiding rewrites can efficiently bring immediate HPC cloud benefits to organizations big and small. "Many technologies and solutions now available allow for the functional and highly efficient coordination and connection between legacy applications and the well-known advantages of the cloud."The post Unlike Oil and Water, Legacy and Cloud Mix Well appeared first on insideHPC.

|

|

by staff on (#2ZW1J)

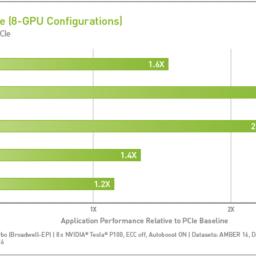

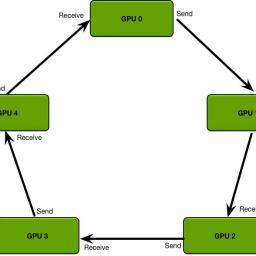

In this video, from Andrew Gibiansky from Baidu describes baidu-allreduce, a newly released C library that enables faster training of neural network models across many GPUs. The library demonstrates the allreduce algorithm, which you can embed into any MPI-enabled application. ""Neural networks have grown in scale over the past several years, and training can require a massive amount of data and computational resources. To provide the required amount of compute power, we scale models to dozens of GPUs using a technique common in high-performance computing but underused in deep learning."The post Video: Baidu Releases Fast Allreduce Library for Deep Learning appeared first on insideHPC.

|

|

by staff on (#2ZW1M)

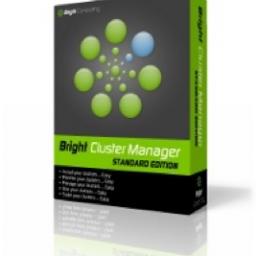

Fayetteville State University is using Bright Cluster Manager for ground-breaking Artificial Intelligence research. Bright Cluster Manager enables the University to deploy complete clusters over bare metal and manage them effectively. Managing the hardware, operating system, HPC software, and its users is done with an elegant easy-to-use graphical user interface. With Bright Cluster Manager, IT/system administrators can quickly get clusters up and running and keep them running reliably throughout their lifecycle.The post Fayetteville State University Leverages Bright Cluster Manager for AI Research appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZVTY)

PRACE is offering an online Supercomputing 101 course through the Future Learn program. This free online course will introduce you to what supercomputers are, how they are used and how we can exploit their full computational potential to make scientific breakthroughs. "Using supercomputers, we can now conduct virtual experiments that are impossible in the real world."The post PRACE Offers Supercomputing 101 Course appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZVB3)

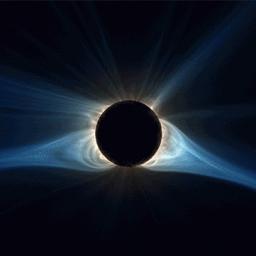

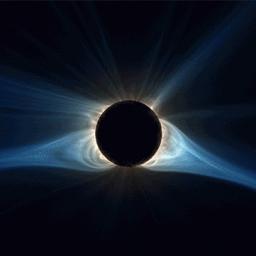

In this podcast, the Radio Free HPC team discusses the eclipse and how researchers are using supercomputers to simulate the corona of the sun at eclipse time. After that, look at the the top Technology stories in our Catch of the Week:The post Radio Free HPC Looks at Eclipse Simulations appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZS6J)

DownUnder GeoSolutions (America) is seeking a Senior HPC Administrator in our Job of the Week. "If you are passionate about leading edge technology you will love this role. What you will receive, along with a fantastic group of colleagues who will support and encourage you is the chance to be working on one of the largest private clusters in the world!"The post Job of the Week: Senior HPC Administrator at DownUnder GeoSolutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZS6M)

Geoffrey Hinton from the University of Toronto gave this talk at the Vector Institute. "What is Wrong with 'standard' Convolutional Neural Nets? They have too few levels of structure: Neurons, Layers, and Whole Nets. We need to group neurons in each layer in 'capsules' that do a lot of internal computation and then output a compact result."The post Video: What is Wrong with Convolutional Neural Nets? appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZPZX)

"Meeting the Enterprise Challenges of HPC System developers and users face obstacles deploying complex new HPC technologies, such as: energy efficiency, reliability and resiliency requirements, or developing software to exploit HPC hardware. All can delay HPC adoption. But the HPC Alliance will help. HPE and Intel will collaborate with you on workstream integration, solution sizing and software-to-hardware integration. We will help, whether you seek ease of everything, to mix workloads, or have fast-growing, increasingly complex systems."The post Video: Customers Leverage HPE & Intel Alliance for HPC appeared first on insideHPC.

|

|

by staff on (#2ZPYC)

The New Zealand Science Infrastructure (NeSI) is commissioning a new HPC system that will be colocated at two facilities. "The new systems, provide a step change in power to NeSI’s existing services, including a Cray XC50 Supercomputer and a Cray CS400 cluster High Performance Computer, both sharing the same high performance and offline storage systems."The post NeSI in New Zealand Installs Pair of Cray Supercomputers appeared first on insideHPC.

|

|

by staff on (#2ZMG6)

Predictive Sciences ran a large-scale simulation of the Sun’s surface in preparation for a prediction of what the solar corona will look like during the eclipse. "The Solar eclipse allows us to see levels of the solar corona not possible even with the most powerful telescopes and spacecraft,†said Niall Gaffney, a former Hubble scientist and director of Data Intensive Computing at the Texas Advanced Computing Center. “It also gives high performance computing researchers who model high energy plasmas the unique ability to test our understanding of magnetohydrodynamics at a scale and environment not possible anywhere else.â€The post Researchers Use TACC, SDSC and NASA Supercomputers to Forecast Corona of the Sun appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZM9F)

In this video, you'll learn how to start submitting deep neural network (DNN) training jobs in Azure by using Azure Batch to schedule the jobs to your GPU compute clusters. "Previously, few people had access to the computing power for these scenarios. With Azure Batch, that power is available to you when you need it."The post Video: Deep Learning on Azure with GPUs appeared first on insideHPC.

|

|

by staff on (#2ZM60)

"Now available as Open Source, PBS Pro software optimizes job scheduling and workload management in high-performance computing environments – clusters, clouds, and supercomputers – improving system efficiency and people’s productivity. Built by HPC people for HPC people, PBS Pro is fast, scalable, secure, and resilient, and supports all modern infrastructure, middleware, and applications."The post RCE Podcast Looks at PBS Professional Job Scheduler appeared first on insideHPC.

|

|

by Rich Brueckner on (#2ZM31)

SC17 will feature a panel discussion entitled How Serious Are We About the Convergence Between HPC and Big Data? "The possible convergence between the third and fourth paradigms confronts the scientific community with both a daunting challenge and a unique opportunity. The challenge resides in the requirement to support both heterogeneous workloads with the same hardware architecture. The opportunity lies in creating a common software stack to accommodate the requirements of scientific simulations and big data applications productively while maximizing performance and throughput.The post SC17 Panel Preview: How Serious Are We About the Convergence Between HPC and Big Data? appeared first on insideHPC.

|

|

by staff on (#2ZKZP)

Today SIGHPC announced that Ilkay Altintas is the inaugural winner of the ACM SIGHPC Emerging Woman Leader in Technical Computing Award. "I am thrilled that Dr. Altintas has been selected to receive the inaugural SIGHPC Emerging Woman Leader in Technical Computing Award,†commented Dr. Jeffrey Hollingsworth, interim CIO at the University of Maryland and Chair of SIGHPC, in reaction to the award committee’s decision. “Dr. Altintas' work enables technical collaboration among different groups; this is critical not only for high performance computing, but science in general. With leaders like Dr. Altintas, the future of our field is in good hands."The post Dr. Ilkay Altintas to recieve ACM SIGHPC Emerging Woman Leader in Technical Computing Award appeared first on insideHPC.

|

|

by staff on (#2ZHS1)

Oak Ridge National Laboratory is moving equipment into a new high-performance computing center this month which is anticipated to become one of the world’s premier resources for open science computing. "There were a lot considerations to be had when designing the facilities for Summit,†explained George Wellborn, Heery Project Architect. “We are essentially harnessing a small city’s worth of power into one room. We had to ensure the confined space was adaptable for the power and cooling that is needed to run this next generation supercomputer.â€The post ORNL Readies Facility for 200 Petaflop Summit Supercomputer appeared first on insideHPC.

|