|

by Rich Brueckner on (#346N9)

Internet2 will host its annual technical meeting, the Technology Exchange, for the research and education community from October 15-18 in San Francisco. The event will convene over 650 attendees from more than 250 institutions, 17 countries, and 46 states including network engineers, technologists, architects, scientists, operators, and administrators in the fields of advanced networking, trust and identity, information security, applications for research, and web-scale computing.The post Internet2 Technology Exchange Meeting Comes to San Francisco Oct. 15-18 appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 20:45 |

|

by staff on (#346NB)

ISC 2018 has issued their Call for Research Papers. "Submissions are now open for the ISC 2018 conference research paper sessions, which aim to provide first-class opportunities for engineers and scientists in academia, industry, and government to present and discuss issues, trends, and results that will shape the future of high performance computing. Submissions will be accepted through Dec. 22, 2017. The research paper sessions will be held from Monday, June 25, through Wednesday, June 27, 2018."The post Call For Research Papers: ISC 2018 appeared first on insideHPC.

|

|

by MichaelS on (#346EW)

The product design process has undergone a significant transformation with the availability of supercomputing power at traditional workstation prices. With over 100 threads available to an application in compact 2 socket servers, scalability of applications that are used as part of the product design and development process are just a keyboard away for a wide range of engineers.The post Parallel Applications Speed Up Manufacturing Product Development appeared first on insideHPC.

|

|

by staff on (#343DH)

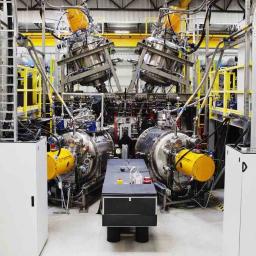

In this video from SC17, researchers discuss the role of HPC in the Nobel Prize-winning discovery of gravitational waves, originally theorized 100 years ago by Albert Einstein in his general theory of relativity. "We are only now beginning to hear the vibrations of space-time that are all around us—we just needed a better ear. And when we detect that, we’re detecting the vibrations of everything that has ever moved in the universe. This is real. This is really there, and we’ve never noticed it until now."The post SC17 Highlights Nobel Prize Winning LIGO Collaboration appeared first on insideHPC.

|

|

by staff on (#3436C)

A $1-million upgrade to Clemson University’s Palmetto Cluster is expected to help researchers quicken the pace of scientific discovery and technological innovation in a broad range of fields, from developing new medicines to creating advanced materials. "New hardware that could be in place as early as spring will add even more power to the Palmetto Cluster. Even before the upgrade, it rated eighth in the nation among academic supercomputers, according to the twice-annual TOP500 list of the world’s most powerful computers."The post Clemson to complete $1 million upgrade of Palmetto HPC Cluster appeared first on insideHPC.

|

|

by Rich Brueckner on (#3436E)

Hal Gerber from Shiloh Industries gave this talk at the HPC User Forum in Milwaukee. "Shiloh is the global leader in high-integrity, high-vacuum, high-pressure die castings, providing high ductility in aluminum and magnesium. Shiloh Industries is a global innovative solutions provider focusing on "lightweighting" technologies that provide environmental and safety benefits to the mobility market."The post HPC Powers High Pressure Casting Simulation at Shiloh Industries appeared first on insideHPC.

|

|

by staff on (#342ZY)

Today Argonne announced that the ALCF Data Science Program (ADSP) has awarded computing time to four new projects, bringing the total number of ADSP projects for 2017-2018 to eight. All four of the program’s inaugural projects were also renewed. "The new project award recipients include an industry-based deep learning project; a national laboratory-based cosmology workflow project; and two university-based projects: one that uses machine-learning for materials discovery, and a deep-learning computer science project."The post Argonne’s Data Science Program Doubles Down with New Projects appeared first on insideHPC.

|

|

by Rich Brueckner on (#34300)

Researchers are using new techniques with HPC to learn more about how the West Nile virus replicates inside the brain. "Over several years, Demeler has developed analysis software for experiments performed with analytical ultracentrifuges. The goal is to facilitate the extraction of all of the information possible from the available data. To do this, we developed very high-resolution analysis methods that require high performance computing to access this information," he said. "We rely on HPC. It's absolutely critical."The post Fighting the West Nile Virus with HPC & Analytical Ultracentrifugation appeared first on insideHPC.

|

|

by Rich Brueckner on (#3417P)

Over at the NVIDIA Blog, Kristin Bryson writes that the Oracle Bare Metal Cloud now offers Tesla P100 GPUs for technical computing. "The move underscores growing demand for public-cloud access to our GPU computing platform from an increasingly wide set of enterprise users. Oracle’s massive customer base means that a broad range of businesses across many industries will have access to accelerated computing to harness the power of AI, accelerated analytics and high performance computing."The post NVIDIA Tesla GPUs Come to Oracle Bare Metal Cloud appeared first on insideHPC.

|

|

by staff on (#3404A)

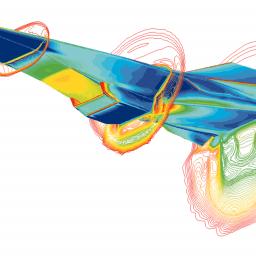

Associate Professor Jonathan Poggie and his team from Purdue have received a large research grant from the U.S. Department of Defense for supercomputing resources. The award enables science and technology research that would not be possible without extraordinary computer resources. "Poggie is the principal investigator for a new U.S. Department of Defense high-performance computing modernization program beginning in October, entitled “Prediction of Hypersonic Laminar-Turbulent Transition through Direct Numerical Simulation.†The project is focused on making conventional hypersonic wind tunnels more useful for vehicle design by helping designers work through the noise and turbulence present in the tunnels and allowing them to more accurately interpret the results of the wind tunnel tests."The post Jonathan Poggie from Purdue Wins DoD Computing Award appeared first on insideHPC.

|

|

by staff on (#33ZXT)

Today Engility announced $14 million in task order awards from NOAA’s Geophysical Fluid Dynamics Laboratory. Engility scientists will conduct HPC software development and optimization, help users gain scientific insights, and maintain cyber security controls on NOAA’s R&D High Performance Computing System. These services assist NOAA GFDL in enhancing and advancing their HPC capability to explore and understand climate and weather. "As we saw with Hurricanes Harvey and Irma, a deeper understanding of climate and weather are critical to America’s preparedness, infrastructure and security stance,†said Lynn Dugle, CEO of Engility. “Engility has been at the forefront of leveraging HPC to advance scientific discovery and solve the toughest engineering problems. HPC is, and will continue to be, an area of high interest and value among our customers as they seek to analyze huge and ever-expanding data sets.â€The post Engility To Provide NOAA With HPC Expertise appeared first on insideHPC.

|

|

by Rich Brueckner on (#33ZV4)

The rise of AI could potentially spur huge growth for the High Performance Computing market, but what kinds of results are your peers already getting right now? There is one way to find out--by taking our HPC & AI Survey. In return, we'll send you a free report with the results and enter your name in a drawing to win one of two Echo Show devices with Amazon Alexa technology.The post Take Our HPC & AI Survey and Win an Echo Show Device appeared first on insideHPC.

|

|

by Rich Brueckner on (#33ZQ9)

Rick Stevens gave this talk at the recent ATPESC training program. "The ATPESC program provides intensive, two weeks of training on the key skills, approaches, and tools to design, implement, and execute computational science and engineering applications on current high-end computing systems and the leadership-class computing systems of the future. As a bridge to that future, this two-week program fills the gap that exists in the training computational scientists typically receive through formal education or other shorter courses."The post A Vision for Exascale: Simulation, Data and Learning appeared first on insideHPC.

|

|

by staff on (#33ZME)

High Performance Computing is extending its reach into new areas. Not only are modeling and simulation being used more widely, but deep learning and other high performance data analytics (HPDA) applications are becoming essential tools across many disciplines. This sponsored post from Intel explores how Plymouth University's High Performance Computer Centre (HPCC) used Intel HPC Orchestrator to support diverse workloads as it recently deployed a new 1,500-core cluster.The post Supporting Diverse HPC Workloads on a Single Cluster appeared first on insideHPC.

|

|

by staff on (#33X8D)

Today, Intel announced a comprehensive hardware and software platform solution to enable faster deployment of customized field programmable gate array (FPGA)-based acceleration of networking, storage and computing workloads. "Intel is making it easier for server equipment makers such as Dell EMC to exploit FPGA technology for data acceleration as a ready-to-use platform,†said Dan McNamara, corporate vice president and general manager of Intel’s Programmable Solutions Group. “With our ecosystem partners, we are enabling the industry with point solutions with a substantial boost in performance while preserving power and cost budgets.â€The post FPGAs Power New Intel Programmable Acceleration Cards appeared first on insideHPC.

|

|

by Rich Brueckner on (#33WRM)

In this special guest feature, Jon Bashor from LBNL writes that Exascale computing will accelerate the push toward clean fusion energy. "Turning this from a promising technology into a mainstream scientific tool depends critically on high-performance, high-fidelity modeling of complex processes that develop over a wide range of space and time scales."The post Exascale Computing to Accelerate Clean Fusion Energy appeared first on insideHPC.

|

|

by staff on (#33WRP)

Today the IEEE Computer Society announced the winners of the IEEE-CS Technical Consortium on HPC Award for Excellence for Early Career Researchers in High Performance Computing. The TCHPC Award recognizes up to three individuals who have made outstanding, influential, and potentially long-lasting contributions in the field of high performance computing within five years of receiving their PhD degree as of January 1 of the year of the award.The post IEEE Recognizes Three Early Career Researchers in HPC appeared first on insideHPC.

|

|

by staff on (#33WFV)

In this special guest feature, Rosemary Dr Rosemary Francis from Ellexus describes why the customized nature of HPC is not a sustainable path forward for the next generation. "The downside is that many of our systems and tools are inaccessible to non-expert users. For example, deep learning is bringing more and more scientists closer towards HPC, but while they bring their knowledge, they also bring their high expectations for what they believe IT can do and not necessarily an understanding of how it works."The post How Can We Bring Apps to Racks? appeared first on insideHPC.

|

|

by Rich Brueckner on (#33WFX)

"This talk will explain the motivation behind dataflow computing to escape the end of frequency scaling in the push to exascale machines, introduce the Maxeler dataflow ecosystem including MaxJ code and DFE hardware, and demonstrate the application of dataflow principles to a specific HPC software package (Quantum ESPRESSO)."The post Multiscale Dataflow Computing: Competitive Advantage at the Exascale Frontier appeared first on insideHPC.

|

|

by Rich Brueckner on (#33SYP)

Vijay Nagarajan from the University of Edinburgh gave this talk at the ARM Research Summit. "The second annual Arm Research Summit is an academic summit to discuss future trends and disruptive technologies across all sectors of computing. The Summit includes talks from the leaders in their research fields, demonstrations, networking opportunities and the chance to interact and discuss projects with members of Arm Research."The post Scaling Up and Out with ARM Architectures appeared first on insideHPC.

|

|

by staff on (#33SWJ)

The High Performance Computing for Manufacturing (HPC4Mfg) program in the Energy Department’s Advanced Manufacturing Office (AMO) announced today their intent to issue their fifth solicitation in January 2018 to fund projects that allow manufacturers to use high-performance computing resources at the Department of Energy’s national laboratories to tackle major manufacturing challenges.The post HPC4Mfg Program Seeks New Projects appeared first on insideHPC.

|

|

by staff on (#33QCW)

"Computing is one of the least diverse science, technology, engineering, and mathematics (STEM) fields, with an under-representation of women and minorities, including African Americans and Hispanics. Leveraging this largely untapped talent pool will help address our nation’s growing demand for data scientists. Computational approaches for extracting insights from big data require the creativity, innovation, and collaboration of a diverse workforce."The post Bringing Diversity to Computational Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#33Q8F)

Karl Schulz from Intel gave this talk at the MVAPICH User Group. "There is a growing sense within the HPC community for the need to have an open community effort to more efficiently build, test, and deliver integrated HPC software components and tools. To address this need, OpenHPC launched as a Linux Foundation collaborative project in 2016 with combined participation from academia, national labs, and industry. The project's mission is to provide a reference collection of open-source HPC software components and best practices in order to lower barriers to deployment and advance the use of modern HPC methods and tools."The post OpenHPC: Project Overview and Updates appeared first on insideHPC.

|

|

by staff on (#33MGJ)

Today Bright Computing announced a reseller agreement with San Diego-based SingleParticle.com. The company specializes in turn-key HPC infrastructure designed for high performance and low total cost of ownership (TCO), serving the global research community of cryo-electron microscopy (cryoEM). "With Bright, the management of an HPC cluster becomes very straightforward, empowering end users to administer their workloads, rather than relying on HPC experts," said Dr. Clara Cai, Manager at SingleParticle.com. "We are confident that with Bright’s technology, our customers can maintain our turn-key cryoEM cluster with little to no prior HPC experience.â€The post Bright Computing Powers SingleParticle.com for cryo-EM appeared first on insideHPC.

|

|

by staff on (#33MD4)

Tim Barr from Cray gave this talk at the HPC User Forum in Milwaukee. "Cray’s unique history in supercomputing and analytics has given us front-line experience in pushing the limits of CPU and GPU integration, network scale, tuning for analytics, and optimizing for both model and data parallelization. Particularly important to machine learning is our holistic approach to parallelism and performance, which includes extremely scalable compute, storage and analytics."The post A Perspective on HPC-enabled AI appeared first on insideHPC.

|

|

by staff on (#33M9S)

Today Supermicro announced support for NVIDIA Tesla V100 PCI-E and V100 SXM2 GPUs on its industry leading portfolio of GPU server platforms. With our latest innovations incorporating the new NVIDIA V100 PCI-E and V100 SXM2 GPUs in performance-optimized 1U and 4U systems with next-generation NVLink, our customers can accelerate their applications and innovations to help solve the world’s most complex and challenging problems.â€The post Supermicro steps up with Optimized Systems for NVIDIA Tesla V100 GPUs appeared first on insideHPC.

|

|

by staff on (#33M6N)

"Berkeley Lab’s tradition of team science, as well as its proximity to UC Berkeley and Silicon Valley, makes it an ideal place to work on quantum computing end-to-end,†says Jonathan Carter, Deputy Director of Berkeley Lab Computing Sciences. “We have physicists and chemists at the lab who are studying the fundamental science of quantum mechanics, engineers to design and fabricate quantum processors, as well as computer scientists and mathematicians to ensure that the hardware will be able to effectively compute DOE science.â€The post Sowing Seeds of Quantum Computation at Berkeley Lab appeared first on insideHPC.

|

|

by staff on (#33GY3)

Today Penguin Computing announced strategic support for the field of artificial intelligence through availability of its servers based on the highly-advanced NVIDIA Tesla V100 GPU accelerator, powered by the NVIDIA Volta GPU architecture. "Deep learning, machine learning and artificial intelligence are vital tools for addressing the world’s most complex challenges and improving many aspects of our lives,†said William Wu, Director of Product Management, Penguin Computing. “Our breadth of products covers configurations that accelerate various demanding workloads – maximizing performance, minimizing P2P latency of multiple GPUs and providing minimal power consumption through creative cooling solutions.â€The post Penguin Computing Launches NVIDIA Tesla V100-based Servers appeared first on insideHPC.

|

|

by staff on (#33GTC)

Today AMAX.AI launched the [SMART]Rack AI Machine Learning cluster, an all-inclusive rackscale platform is maximized for performance featuring up to 96x NVIDIA Tesla P40, P100 or V100 GPU cards, providing well over 1 PetaFLOP of compute power per rack. "The [SMART]Rack AI is revolutionary to Deep Learning data centers," said Dr. Rene Meyer, VP of Technology, AMAX. "Because it not only provides the most powerful application-based computing power, but it expedites DL model training cycles by improving efficiency and manageability through integrated management, network, battery and cooling all in one enclosure."The post AMAX.AI Unveils [SMART]Rack Machine Learning Cluster appeared first on insideHPC.

|

|

by staff on (#33GQ8)

Today IBM announced the Integrated Analytics System, a new unified data system designed to give users fast, easy access to advanced data science capabilities and the ability to work with their data across private, public or hybrid cloud environments. "Today’s announcement is a continuation of our aggressive strategy to make data science and machine learning more accessible than ever before and to help organizations like AMC, begin harvesting their massive data volumes – across infrastructures – for insight and intelligence,†said Rob Thomas, General Manager, IBM Analytics.The post IBM Moves Data Science Forward with Integrated Analytics System appeared first on insideHPC.

|

|

by Rich Brueckner on (#33GKW)

Adam Moody from LLNL presented this talk at the MVAPICH User Group. "High-performance computing is being applied to solve the world's most daunting problems, including researching climate change, studying fusion physics, and curing cancer. MPI is a key component in this work, and as such, the MVAPICH team plays a critical role in these efforts. In this talk, I will discuss recent science that MVAPICH has enabled and describe future research that is planned. I will detail how the MVAPICH team has responded to address past problems and list the requirements that future work will demand."The post Video: How MVAPICH & MPI Power Scientific Research appeared first on insideHPC.

|

|

by Richard Friedman on (#33GFP)

This year, OpenMP*, the widely used API for shared memory parallelism supported in many C/C++ and Fortran compilers, turns 20. OpenMP is a great example of how hardware and software vendors, researchers, and academia, volunteering to work together, can successfully design a specification that benefits the entire developer community.The post OpenMP at 20 Moving Forward to 5.0 appeared first on insideHPC.

|

|

by Rich Brueckner on (#33DD1)

In this video from the 2017 CGSF Review Meeting, Barbara Helland from the Department of Energy presents: With Exascale Looming, this is an Exciting Time for Computational Science. "Helland was also a presenter this week at the ASCR Advisory Committee Meeting, where she disclosed that the Aurora 21 Supercomputer coming to Argonne in 2021 will indeed be an exascale machine."The post With Exascale Looming, this is an Exciting Time for Computational Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#33D6S)

Today NVIDIA and its systems partners Dell EMC, Hewlett Packard Enterprise, IBM and Supermicro today unveiled more than 10 servers featuring NVIDIA Volta architecture-based Tesla V100 GPU accelerators -- the world's most advanced GPUs for AI and other compute-intensive workloads. "Volta systems built by our partners will ensure that enterprises around the world can access the technology they need to accelerate their AI research and deliver powerful new AI products and services," said Ian Buck, vice president and general manager of Accelerated Computing at NVIDIA.The post Server Vendors Announce NVIDIA Volta Systems for Accelerated AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#33D6T)

Today EPSRC in the UK announced the 10 winners of the recent ARCHER Best-Use Travel Competition. The competition aimed to identify the best scientific use of ARCHER, the UK’s national supercomputing facility, within the arena of the engineering and physical sciences. "As we see the increasing need for high performance computing to tackle today’s complex scientific questions, we recognize the need to encourage today’s young researchers to bring their skills to the world," said Dr. Eddie Clarke, EPSRC’s Contract Manager for ARCHER. "The winners of these awards have shown ability, enthusiasm and real skill in their research and these prizes will help them work together with partners overseas to benefit science in the UK.â€The post EPSRC Recognizes Young Scientists using ARCHER Supercomputing Facility appeared first on insideHPC.

|

|

by Rich Brueckner on (#33D3E)

In this special guest feature, Brad McCredie from IBM writes that launch of Volta GPUs from NVIDIA heralds a new era of AI. "We’re excited about the launch of NVIDIA’s Volta GPU accelerators. Together with the NVIDIA NVLINK “information superhighway†at the core of our IBM Power Systems, it provides what we believe to be the closest thing to an unbounded platform for those working in machine learning and deep learning and those dealing with very large data sets."The post No speed limit on NVIDIA Volta with rise of AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#33D0F)

Vineeth Ram from HPE gave this talk at the HPC User Forum in Milwaukee. "Organizations across all sectors are putting Big Data to work. They are optimizing their IT operations and enhancing the way they communicate, learn, and grow their businesses in order to harness the full power of artificial intelligence (AI). Backed by high performance computing technologies, AI is revolutionizing the world as we know it—from web searches, digital assistants, and translations; to diagnosing and treating diseases; to powering breakthroughs in agriculture, manufacturing, and electronic design automation."The post Accelerate Innovation and Insights with HPC and AI appeared first on insideHPC.

|

|

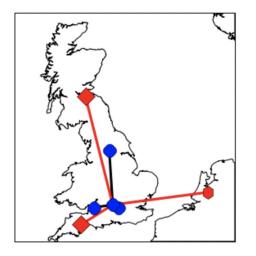

by staff on (#33A2S)

Today Panasas announced that the Science and Technology Facilities Council’s (SFTC) Rutherford Appleton Laboratory (RAL) in the UK has expanded its JASMIN super-data-cluster with an additional 1.6 petabytes of Panasas ActiveStor storage, bringing total storage capacity to 20PB. This expansion required the formation of the largest realm of Panasas storage worldwide, which is managed by a single systems administrator. Thousands of users worldwide find, manipulate and analyze data held on JASMIN, which processes an average of 1-3PB of data every day.The post Panasas Upgrades JASMIN Super-Data-Cluster Facility to 20PB appeared first on insideHPC.

|

|

by staff on (#339Z7)

Today Bright Computing announced that Bright Cluster Manager 8.0 now integrates with IBM Power Systems. "The integration of Bright Cluster Manager 8.0 with IBM Power Systems has created an important new option for users running complex workloads involving high-performance data analytics,†said Sumit Gupta, VP, HPC, AI & Machine Learning, IBM Cognitive Systems. “Bright Computing’s emphasis on ease-of-use for Linux-based clusters within public, private and hybrid cloud environments speaks to its understanding that while data is becoming more complicated, the management of its workloads must remain accessible to a changing workforce.â€The post Bright Computing Announces Integration with IBM Power Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#339SD)

In this RichReport slidecast, James Coomer from DDN presents an overview of the Infinite Memory Engine IME. "IME is a scale-out, flash-native, software-defined, storage cache that streamlines the data path for application IO. IME interfaces directly to applications and secures IO via a data path that eliminates file system bottlenecks. With IME, architects can realize true flash-cache economics with a storage architecture that separates capacity from performance."The post Infinite Memory Engine: HPC in the FLASH Era appeared first on insideHPC.

|

|

by Rich Brueckner on (#339NT)

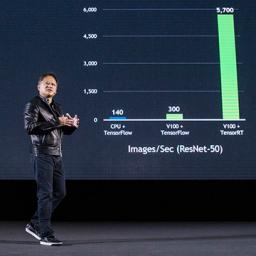

Today GTC China, NVIDIA made a series of announcements around Deep Learning, and GPU-accelerated computing for Hyperscale datacenters. "Demand is surging for technology that can accelerate the delivery of AI services of all kinds. And NVIDIA’s deep learning platform — which the company updated Tuesday with new inferencing software — promises to be the fastest, most efficient way to deliver these services."The post NVIDIA Brings Deep Learning to Hyperscale at GTC China appeared first on insideHPC.

|

|

by staff on (#339B7)

Cloud adoption is accelerating at the blink of an eye, easing the burden of managing data-rich workloads for enterprises big and small. Yet, common myths and misconceptions about the hybrid cloud are delaying enterprises from reaping the benefits. "In this article, we will debunk five of the top most commonly believed myths that keep companies from strengthening their infrastructure with a hybrid approach."The post Common Myths Stalling Organizations From Cloud Adoption appeared first on insideHPC.

|

|

by staff on (#336CG)

Today Nimbix announced the immediate availability of a new high-performance storage platform in the Nimbix Cloud specifically designed for the demands of artificial intelligence and deep learning applications and workflows. "As enterprises, researchers and startups begin to invest in GPU-accelerated artificial intelligence technologies and workflows, they are realizing that data is a big part of this challenge,†said Steve Hebert, CEO of Nimbix. “With the new storage platform, we are helping our customers achieve performance that breaks through the bottlenecks of commodity or traditional platforms and does so with a turnkey deep learning cloud offering.â€The post Nimbix Launches High Speed Cloud Storage for AI and Deep Learning appeared first on insideHPC.

|

|

by staff on (#3374W)

Today Cray announced it has completed the previously announced transaction and strategic partnership with Seagate centered around the addition of the ClusterStor high-performance storage business. “As a pioneer in providing large-scale storage systems for supercomputers, it’s fitting that Cray will take over the ClusterStor line.â€The post Cray Assimilates ClusterStor from Seagate appeared first on insideHPC.

|

|

by staff on (#336Z8)

The CENATE Proving Ground for HPC Technologies at PNNL has named Kevin Barker as their new Director. "The goal of CENATE is to evaluate innovative and transformational technologies that will enable future DOE leadership class computing systems to accelerate scientific discovery," said PNNL's Laboratory Director Steven Ashby. "We will partner with major computing companies and leading researchers to co-design and test the leading-edge components and systems that will ultimately be used in future supercomputing platforms."The post Kevin Barker to Lead CENATE Proving Ground for HPC Technologies appeared first on insideHPC.

|

|

by staff on (#336CE)

Scalability of scientific applications is a major focus of the Department of Energy’s Exascale Computing Project (ECP) and in that vein, a project known as IDEAS-ECP, or Interoperable Design of Extreme-scale Application Software, is also being scaled up to deliver insight on software development to the research community.The post IDEAS Program Fostering Better Software Development for Exascale appeared first on insideHPC.

|

|

by staff on (#3361M)

Computing Pioneer Gordon Bell will share insights and inspiration at SC17 in Denver. "We are honored to have the legendary Gordon Bell speak at SC17," said Conference Chair Bernd Mohr, from Germany's Jülich Supercomputing Centre. "The prize he established has helped foster the rapid adoption of new paradigms, given recognition for specialized hardware, as well as rewarded the winners' tremendous efforts and creativity - especially in maximizing the application of the ever-increasing capabilities of parallel computing systems. It has been a beacon for discovery and making the 'might be possible' an actual reality."The post Computing Pioneer Gordon Bell to Present at SC17 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3361P)

UC Berkeley professor Kathy Yelick presented this talk at the 2017 ACM Europe Conference. "Yelick's keynote lecture focused on the exciting opportunities that High Performance Computing presents, the need for advanced in algorithms and mathematics to advance along with the system performance, and how the variety of workloads will stress the different aspects of exascale hardware and software systems."The post Kathy Yelick Presents: Breakthrough Science at the Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#335YE)

In this podcast, the Radio Free HPC team looks at China’s massive upgrade of the Tianhe-2A supercomputer to 95 Petaflops peak performance. "As detailed in a new 21-page report by Jack Dongarra from the University of Tennessee, the upgrade should nearly double the performance of the system, which is currently ranked at #2 on TOP500."The post Radio Free HPC Looks at China’s 95 Petaflop Tianhe-2A Supercomputer appeared first on insideHPC.

|

|

by staff on (#33368)

"Understanding and predicting material performance under extreme environments is a foundational capability at Los Alamos,†said David Teter, Materials Science and Technology division leader at Los Alamos. “We are well suited to apply our extensive materials capabilities and our high-performance computing resources to industrial challenges in extreme environment materials, as this program will better help U.S. industry compete in a global market.â€The post LANL Steps Up to HPC for Materials Program appeared first on insideHPC.

|