|

by Rich Brueckner on (#31XSR)

Natalia Vassilieva from HP Labs gave this talk at the HPC User Forum in Milwaukee. "Our Deep Learning Cookbook is based on a massive collection of performance results for various deep learning workloads on different hardware/software stacks, and analytical performance models. This combination enables us to estimate the performance of a given workload and to recommend an optimal hardware/software stack for that workload. Additionally, we use the Cookbook to detect bottlenecks in existing hardware and to guide the design of future systems for artificial intelligence and deep learning."The post Video: Characterization and Benchmarking of Deep Learning appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 22:30 |

|

by staff on (#31XJR)

For many deep learning startups out there, buying AI hardware and a large quantity of powerful GPUs is not feasible. So many of these startup companies are turning to cloud GPU computing to crunch their data and run their algorithms. Katie Rivera, of One Stop Systems, explores some of the AI hardware challenges that can arise, as well as the new tools designed to tackle these issues.The post Solving AI Hardware Challenges appeared first on insideHPC.

|

|

by Rich Brueckner on (#31TT8)

The inaugural Supercomputing Asia conference has issued its Call for Technical Papers. The event takes place March 26-29, 2018 in Singapore.The post Call for Submissions: Supercomputing Asia 2018 in Singapore appeared first on insideHPC.

|

|

by staff on (#31TQJ)

MIT researchers have developed a new general-purpose technique sheds light on inner workings of neural nets trained to process language. "During training, a neural net continually readjusts thousands of internal parameters until it can reliably perform some task, such as identifying objects in digital images or translating text from one language to another. But on their own, the final values of those parameters say very little about how the neural net does what it does."The post MIT Paper Sheds Light on How Neural Networks Think appeared first on insideHPC.

|

|

by Rich Brueckner on (#31TM5)

Doug Koethe from ORNL gave this talk at the HPC User Forum in Milwaukee. “The Exascale Computing Project (ECP) was established with the goals of maximizing the benefits of high-performance computing (HPC) for the United States and accelerating the development of a capable exascale computing ecosystem."The post Video: The DOE Exascale Computing Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#31TDE)

In this Radio Free HPC podcast, Dan Olds and Shahin Khan from OrionX describe their new AI Survey. "OrionX Research has completed one the most comprehensive surveys to date of Artificial Intelligence, Machine Learning, and Deep Learning. With over 300 respondents in North America, representing 13 industries, our model indicates a confidence level of 95% and a margin of error of 6%. Covering 144 questions/data points, it provides a comprehensive view of what customers are doing and planning to do with AI/ML/DL."The post New OrionX Survey: Insights in Artificial Intelligence appeared first on insideHPC.

|

|

by Rich Brueckner on (#31QMJ)

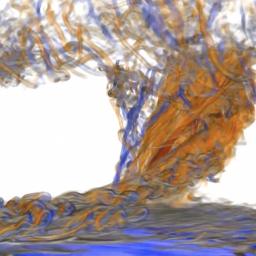

"What makes this work significant is the use of supercomputing resources to produce simulations of supercells where data is saved with extremely high spatial and temporal resolution, and the use of visualization techniques (such as volume rendering and trajectory clouds) to produce video that exposes the highly variable flow features that occur throughout the life of the simulated storms. Some of these simulations contain long lived tornadoes producing near-surface winds exceeding 300 mph."The post HPC Challenges in Simulating the World’s Most Powerful Tornados appeared first on insideHPC.

|

|

by Rich Brueckner on (#31QJH)

The GPU Technology Conference (GTC 2018) has issued their Call for Participation. The event takes place March 26-29 in San Jose, California. "Don’t miss this unique opportunity to participate in the world’s most important GPU event, NVIDIA’s GPU Technology Conference (GTC 2018). Sign up to present a talk, poster, or lab on how GPUs power the most dynamic areas in computing today—including AI and deep learning, big data analytics, healthcare, smart cities, IoT, HPC, VR, and more."The post Call for Participation: GTC 2018 in San Jose appeared first on insideHPC.

|

|

by Rich Brueckner on (#31NE8)

"In the last few years DNA sequencing technologies have become extremely cheap enabling us to quickly generate terabytes of data for a few thousand dollars. Analysis of this data has become the new bottleneck. Novel compute-intensive streaming approaches that leverage this data without the time-costly step of genome assembly and how UWA’s Edwards group leveraged these approaches to find new breeding targets in crop species are presented."The post Video: The State of Bioinformatics in HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#31N9T)

The Pawsey Supercomputing Centre in Australia is seeking an HPC System Administrator in our Job of the Week. "Pawsey’s Supercomputing Team is responsible for configuring the infrastructure to fulfil the needs of the Australian research community and to engage with that community to make best use of the infrastructure. The incumbent, as part of this highly skilled team of professional specialists and system administrators, will ensure that Pawsey’s high performance computing resources and related services meet its service expectations."The post Job of the Week: HPC System Administrator at Pawsey Supercomputing Centre appeared first on insideHPC.

|

|

by Rich Brueckner on (#31JWF)

In this video from the HPC User Forum in Milwaukee, Earl Joseph and Steve Conway from Hyperion Research present and update on HPC, AI, and Storage markets. "Hyperion Research forecasts that the worldwide HPC server-based AI market will expand at a 29.5% CAGR to reach more than $1.26 billion in 2021, up more than three-fold from $346 million in 2016."The post Trends in the Worldwide HPC Market appeared first on insideHPC.

|

|

by staff on (#31JJ2)

In this TechNative Podcast, Mark Fernandez from HPE describes the company’s collaboration with NASA to send a supercomputer into orbit. "“By NASA’s rules, not just any computer can go into space. Their components must be radiation hardened, especially the CPUs,†reports HPE Insights. “Otherwise, they tend to fail due to the effects of ionizing radiation."The post Podcast: HPE Sends a Supercomputer into Orbit appeared first on insideHPC.

|

|

by Rich Brueckner on (#31JC0)

Today Rescale announced plans to offer X2 Firebird CAE software on the company’s ScaleX cloud HPC platform. X2 Firebird will be deployed as the first on-demand, cloud-only numerical simulation software for scientists and engineers on Rescale. X2 Firebird leverages the latest in systems programming and parallel computing, enabling real-time team collaboration on complex technical projects. This […]The post X2 Firebird CAE Software Comes to Rescale HPC Cloud appeared first on insideHPC.

|

|

by staff on (#31J8D)

Today Asetek announced an order from a new channel partner to service demand from a new OEM customer for an undisclosed HPC installation. All parties remain undisclosed at this time. "Adding OEMs has been a key goal for our datacenter business and it is particularly satisfying to add another significant OEM to our portfolio of customers," said André Sloth Eriksen, CEO and founder of Asetek. "This order confirms our ability to leverage our leading position in the HPC segment to attract new customers as well as end-users."The post Asetek lands new OEM, Channel Partner, and HPC Installation appeared first on insideHPC.

|

|

by Rich Brueckner on (#31J2J)

"This talk will provide an overview of the MVAPICH project (past, present and future). Future roadmap and features for upcoming releases of the MVAPICH2 software family (including MVAPICH2-X, MVAPICH2-GDR, MVAPICH2-Virt, MVAPICH2-EA and MVAPICH2-MIC) will be presented. Current status and future plans for OSU INAM, OEMT and OMB will also be presented."The post Overview of the MVAPICH Project and Future Roadmap appeared first on insideHPC.

|

|

by staff on (#31GAQ)

With support from CoolIT Systems with a custom Direct Liquid Cooling solution, the CHIME telescope will map out the entire northern sky each day, aiming to constrain the properties and evolution of Dark Energy over a broad swath of cosmic history. "The liquid cooled system consists of 256 rack-mounted General Technics GT0180 custom 4u servers housed in 26 racks managed by CoolIT Systems Rack DCLC CHx40 Heat Exchange Modules. The custom direct contact cooling loops manage 100% of heat generated by the single Intel Xeon E5 2620v3 CPUs and the Dual AMD FirePro S9300x2 GPUs, while simultaneously pulling heat from the ambient air into the liquid coolant loops."The post Canada’s Biggest Radio Telescope to use CoolIT Systems Liquid Cooling appeared first on insideHPC.

|

|

by staff on (#31F44)

Today IBM announced a 10-year, $240 million investment to create the MIT–IBM Watson AI Lab. "The combined MIT and IBM talent dedicated to this new effort will bring formidable power to a field with staggering potential to advance knowledge and help solve important challenges.â€The post Announcing the New MIT–IBM Watson AI Lab appeared first on insideHPC.

|

|

by staff on (#31F0K)

Over at the NVIDIA Blog, Abdul Hamid Halabi writes that the Center for Clinical Data Science (CCDS) today received the world’s first NVIDIA DGX systems with Volta. "Soon, Boston-area radiologists will have AI “assistants†integrated into their daily workflows, helping them more quickly and accurately diagnose disease from MRIs, CAT scans, X-rays and more. The trained neural networks residing on DGX-1 systems in CCDS’s data center are in a constant state of learning, continually ingesting countless medical images worldwide."The post NVIDIA Volta GPUs to Help CDDS Advance Medicine with AI appeared first on insideHPC.

|

|

by MichaelS on (#31F0N)

"Understanding how the pipeline slots are being utilized can greatly increase the performance of the application. If pipeline slots are blocked for some reason, performance will suffer. Likewise, getting an understanding of the various cache misses can lead to a better organization of the data. This can increase performance while reducing latencies of memory to CPU."The post The Internet of Things and Tuning appeared first on insideHPC.

|

|

by staff on (#31EXB)

Today announced that the Company has signed a solutions provider agreement with Vanguard Infrastructures in order for Vanguard to develop, market, and sell on premise and cloud-as-a-service cybersecurity solutions that fuse supercomputing technologies with an open, enterprise-ready software framework for big data analytics. Vanguard has also purchased a Cray Urika-GX system.The post Vanguard Infrastructures Teams with Cray for Cybersecurity appeared first on insideHPC.

|

|

by Rich Brueckner on (#31ESZ)

Leonardo Flores from the European Commission gave this talk at the HPC User Forum in Milwaukee. "High-Performance Computing is a strategic resource for Europe's future as it allows researchers to study and understand complex phenomena while allowing policy makers to make better decisions and enabling industry to innovate in products and services. The European Commission funds projects to address these needs."The post Video: Europe’s HPC Strategy appeared first on insideHPC.

|

|

by Rich Brueckner on (#31BX1)

Open OnDemand is an NSF-funded project to develop a widely shareable web portal that provides HPC centers with advanced web and graphical interface capabilities. Through OnDemand, HPC clients can upload and download files, create, edit, submit and monitor jobs, run GUI applications and connect via SSH, all via a web browser, with no client software to install and configure.The post Ohio Supercomputer Center open-sources HPC Access Portal appeared first on insideHPC.

|

|

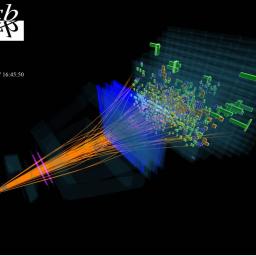

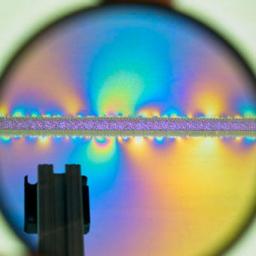

by staff on (#31BSQ)

EPFL’s physicists are moving forward in their efforts to solve the mysteries of the universe. A particle detector made up of 10,000 kilometers of scintillating fiber is under construction and will be added onto CERN's particle accelerator.The post EPFL Building New Particle Detector for Large Hadron Collider appeared first on insideHPC.

|

|

by Rich Brueckner on (#31BSS)

In this guest feature, Jeffrey Schwab describes his role as SCinet Chair at SC17 and how his team of volunteers is building the fastest and most powerful volunteer-built network in the world. "I believe the main attraction for SCinet volunteers is the opportunity to build something unique on a scale and timetable that is challenging in its own right."The post Interview: Jeffrey Schwab on Building the World’s Fastest Network at SC17 appeared first on insideHPC.

|

|

by staff on (#31BP5)

Today, 16 organizations met at the Barcelona Supercomputer Centre to mark the start of the EuroEXA project and the commencement of the execution in the next stage of EU investment towards realizing Exa-Scale computing in Europe. "In EuroEXA, we have taken a holistic approach to break-down the inefficiencies of the historic abstractions and bring significant innovation and co-design across the entire computing stack.â€The post Announcing the EuroEXA Project for Exascale appeared first on insideHPC.

|

|

by staff on (#31BK5)

Like many other HPC workloads, deep learning is a tightly coupled application that alternates between compute-intensive number-crunching and high-volume data sharing. Intel explores how the Intel Scalable System can act as a solution for a high performance computing platform that can run deep learning workloads and more.The post The Intel Scalable System Framework: Kick-Starting the AI Revolution appeared first on insideHPC.

|

|

by staff on (#318QW)

Today Atos announced it has signed a channel partner agreement with OCF DATA Limited to resell Atos Bullion servers. OCF will sell the Bullion devices to higher education, manufacturing, and utilities sectors in the UK.The post OCF DATA to sell Bullion High-end Servers appeared first on insideHPC.

|

|

by staff on (#318MT)

Researchers at LANL are using Machine Learning to predict earthquakes. “The novelty of our work is the use of machine learning to discover and understand new physics of failure, through examination of the recorded auditory signal from the experimental setup. I think the future of earthquake physics will rely heavily on machine learning to process massive amounts of raw seismic data. Our work represents an important step in this direction."The post Predicting Earthquakes with Machine Learning appeared first on insideHPC.

|

|

by staff on (#318AT)

Researchers from across the University of Exeter can now benefit from a new HPC machine - Isca - that was configured and integrated by OCF to give the university a larger capacity for computational research. "We’ve seen in the last few years a real growth in interest in High-Performance Computing from life sciences, particularly with the availability of new high-fidelity genome sequencers, which have heavy compute requirements, and that demand will keep going up."The post OCF Builds Isca Supercomputer for Life Sciences at University of Exeter appeared first on insideHPC.

|

|

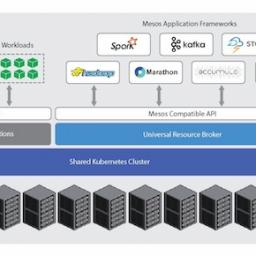

by staff on (#3185P)

Today Univa announced the open source availability of the Universal Resource Broker (URB) adapter for Kubernetes. This new URB adapter will be of interest for organizations running Apache Mesos frameworks that are looking to deploy Kubernetes and Mesos workloads on a common infrastructure. The release of the URB adapter for Kubernetes marks a significant open source contribution by Univa and demonstrates its commitment to the Kubernetes community and to helping users get the most from their infrastructure investments.The post Univa Open Sources Universal Resource Broker for Kubernetes appeared first on insideHPC.

|

|

by Rich Brueckner on (#3185R)

In this podcast, the Radio Free HPC team looks at Dan’s recent talk on High Performance Interconnects. "When it comes to choosing an interconnect for your HPC cluster, what is the best way to go? Is offloading better than onloading? You can find out more by watching Dan’s talk from the HPC Advisory Council Australia conference."The post Radio Free HPC Looks at High Performance Interconnects appeared first on insideHPC.

|

|

by staff on (#315YM)

The Barcelona Supercomputing Center will host the ACM Europe Conference this week, bringing together over 300 computer scientists from around the world. With main themes centering on Cybersecurity and High Performance Computing, the event takes place Sept. 7-8 in Barcelona.The post ACM Europe to Focus on Cybersecurity and HPC this week in Barcelona appeared first on insideHPC.

|

|

by Rich Brueckner on (#315SS)

"This talk will reflect on prior analysis of the challenges facing high-performance interconnect technologies intended to support extreme-scale scientific computing systems, how some of these challenges have been addressed, and what new challenges lay ahead. Many of these challenges can be attributed to the complexity created by hardware diversity, which has a direct impact on interconnect technology, but new challenges are also arising indirectly as reactions to other aspects of high-performance computing, such as alternative parallel programming models and more complex system usage models."The post Challenges and Opportunities for HPC Interconnects and MPI appeared first on insideHPC.

|

|

by staff on (#313P8)

Today One Stop Systems announced that the company ranks in the top half of the Inc. 5000 list of Fastest Growing Private Companies. This is the 7th time OSS has been on the Inc. 5000 list. Of the tens of thousands of companies that have applied to the Inc. 5000 over the years, only a fraction have made the list more than once. A mere two percent have made the list seven times. "We’re proud to be part of the small fraction of companies that have made the Inc. 5000 list seven times," said Steve Cooper, CEO of OSS. "One Stop Systems continues to provide leading edge technology products to the high performance computing market, propelling its growth."The post One Stop Systems on Inc. 5000 List of Fastest Growing Private Companies appeared first on insideHPC.

|

|

by Rich Brueckner on (#313MQ)

Alan Williams from NCI presented this talk at the HPC Advisory Council Australia Conference. "Explore the services and technology at the National Computational Infrastructure (NCI), based in Canberra, Australia, with Allan Williams, and the delivery of HPC, Cloud and Storage services to Australian researchers around the country."The post HPC vs. Cloud – The Return on Investment in eResearch appeared first on insideHPC.

|

|

by staff on (#311BT)

"To meet the ambitious targets for exascale computing, many cooling companies are exploring optimizations and innovative methods that will redefine cooling architectures for the next generation of HPC systems. Here, some of the prominent cooling technology providers give their views on the current state and future prospects of cooling technology in HPC."The post What Lies Ahead for HPC Cooling? appeared first on insideHPC.

|

|

by Rich Brueckner on (#3117P)

Kitware is seeking a Scientific Visualization Developer in our Job of Week. "Kitware is seeking to hire highly skilled Research and Development Engineers (R&D Engineers) to join our Scientific Computing team and contribute to our scientific and information visualization efforts. Candidates will work to develop and improve leading visualization software solutions."The post Job of the Week: Scientific Visualization Developer at Kitware appeared first on insideHPC.

|

|

by Marvyn on (#310EQ)

Sponsored Post Researchers at Google and Intel recently collaborated to extract the maximum performance from Intel® Xeon and Intel® Xeon Phi processors running TensorFlow*, a leading deep learning and machine learning framework. This effort resulted in significant performance gains and leads the way for ensuring similar gains from the next generation of products from Intel.The post TensorFlow Copy appeared first on insideHPC.

|

|

by staff on (#30YX4)

Applications are now being accepted for the ISC 2018 Student Cluster Competition. Entering its 33rd year, ISC High Performance offers students a unique opportunity to learn how HPC influences our world and day-to-day learning. "The ISC-HPCAC Student Cluster Competition encourages international teams of university students to showcase their expertise in a friendly yet spirited competition that builds critical skills, professional relationships, competitive spirits, and lifelong friendship."The post Enter Your Team in the ISC 2018 Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#30YPZ)

Advanced Clustering Technologies has installed a new supercomputer at the University of Southern Mississippi. Called Magnolia, the system will support research and training in computational and data-enabled science and engineering.The post Magnolia Supercomputer Powers Research at University of Southern Mississippi appeared first on insideHPC.

|

|

by Rich Brueckner on (#30YK7)

Today XTREME Design Inc. announced that Dr. David Barkai has joined the company as an adviser. As a Japanese startup offering cloud-based, virtual supercomputing-on-demand, XTREME Design hopes to tap Dr. Barkai's extensive HPC expertise in their efforts to enter US market for Cloud HPC. "XTREME Design IaaS computing services deliver an easy-to-use customer experience through a robust UI/UX and cloud management features."The post David Barkai Joins XTREME Design as the company Looks to Bring Cloud HPC to U.S. appeared first on insideHPC.

|

|

by Rich Brueckner on (#30YDT)

Today the Universities Space Research Association (USRA) announced it has upgraded its current quantum annealing computer to a D-Wave 2000Q system. The computer offers the promise for solving challenging problems in a variety of applications including machine learning, scheduling, diagnostics, medicine and biology among others.The post USRA Upgrades D-Wave Quantum Computer to 2000 Qubits appeared first on insideHPC.

|

|

by Rich Brueckner on (#30YAG)

Justin Glen and Daniel Richards from DDN presented this talk at the HPC Advisory Council Australia Conference. "Burst Buffer was originally created to checkpoint-restart applications and has evolved to help accelerate applications & file systems and make HPC clusters more predictable. This presentation explores regional use cases, recommendations on burst buffer sizing and investment and where it is best positioned in a HPC workflow."The post Video: DDN Burst Buffer appeared first on insideHPC.

|

|

by staff on (#30V0K)

The Exascale Computing Project (ECP) is working with a number of National Labs on the development of supercomputers with 50x better performance than today's systems. In this special guest feature, ECP describes how Argonne is stepping up to the challenge. "Given the complexity and importance of exascale, it is not anything a single lab can do,†said Argonne's Rick Stevens.The post Argonne Steps up to the Exascale Computing Project appeared first on insideHPC.

|

|

by staff on (#30V0M)

Ace Computers and BeeGFS have teamed to deliver a complete parallel file system solving storage access speed issues that slow down even the fastest supercomputers. BeeGFS eliminates the gap between compute speed and the limited speed of storage access for these clusters--stalling on disk access while reading input data or writing the intermediate or final simulation results. "We are building clusters that are more and more powerful," said Ace Computers CEO John Samborski. "So we recognized that storage access speed was becoming an issue. BeeGFS has proven to be an excellent, cost-effective solution for our clients and a valuable addition to our portfolio of partners.â€The post Ace Computers Teams with BeeGFS for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#30TWY)

"As part of its ongoing inclusivity efforts, SC17 will once again offer on-site child care during the conference. Available to registered attendees and exhibitors for a small fee, SC17 day care is available for children between the ages of 6 months through 12 years old are eligible to participate."The post Bringing Your Kids to SC17 in Denver? appeared first on insideHPC.

|

|

by Richard Friedman on (#30TJZ)

Researchers at Google and Intel recently collaborated to extract the maximum performance from Intel® Xeon and Intel® Xeon Phi processors running TensorFlow*, a leading deep learning and machine learning framework. This effort resulted in significant performance gains and leads the way for ensuring similar gains from the next generation of products from Intel. Optimizing Deep Neural Network (DNN) models such as TensorFlow presents challenges not unlike those encountered with more traditional High Performance Computing applications for science and industry.The post TensorFlow Deep Learning Optimized for Modern Intel Architectures appeared first on insideHPC.

|

|

by staff on (#30R14)

Today Globus.org announced general availability of Globus for Google Drive, a new capability that lets users seamlessly connect Google Drive with their existing storage ecosystem, enabling a single interface for data transfer, sharing and publication across all storage systems. "Our researchers wanted to use Google Drive for data storage, but found that they had to babysit the data transfers,†said Krishna Muriki, Computer Systems Engineer, HPC Services, at Lawrence Berkeley National Laboratory. “They were already familiar with using Globus so we thought it would make a good interface for Google Drive; that’s why we partnered with Globus to develop this connector. Now our researchers have a familiar, web-based interface across all their storage resources, including Google Drive, so it is painless to move data where they need it and share results with collaborators. Globus manages all the data movement and authorization, improving security and reliability as well."The post Globus for Google Drive Provides Unified Interface for Collaborative Research appeared first on insideHPC.

|

|

by staff on (#30QGG)

In this AI Podcast, Paul Wigley from the Australian National University describes how his team of scientists applied AI to an experiment to create a Bose-Einstein condensate. And in doing so they had a question: if we can use AI as a tool in this experiment, can we use AI as its own novel, scientist, to explore different parts of physics and different parts of science?The post Podcast: Could an AI Win the Nobel Prize? appeared first on insideHPC.

|

|

by Rich Brueckner on (#30QCS)

"This talk will focus on the role MVAPICH has played at the Texas Advanced Computing Center over multiple generations of multiple technologies of interconnect, and why it has been critical not only in maximizing interconnect performance, but overall system performance as well. The talk will include examples of how poorly tuned interconnects can mean the difference between success and failure for large systems, and how the MVAPICH software level continues to provide significant performance advantages across a range of applications and interconnects."The post Video: MVAPICH at Petascale on the Stampede System appeared first on insideHPC.

|