|

by staff on (#1XE08)

Over at Cluster Monkey, Douglas Eadline writes that the "free lunch" performance boost of Moore's Law may indeed be back with the 1024-core Epiphany-V chip that will hit the market in the next few months.The post Is Free Lunch Back? Douglas Eadline Looks at the Epiphany-V Processor appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 08:45 |

|

by staff on (#1XDXJ)

Scientists at Brookhaven National Laboratory will play major roles in two of the 15 fully funded application development proposals recently selected by the DOE's Exascale Computing Project (ECP) in its first-round funding of $39.8 million. "The team at Brookhaven will develop algorithms, language environments, and application codes that will enable scientists to perform lattice quantum chromodynamics (QCD) calculations on next-generation supercomputers."The post Brookhaven Lab to Develop ECP Exascale Software appeared first on insideHPC.

|

|

by staff on (#1XAZ0)

Computer scientists at LLNL and Norwegian researchers are collaborating to apply high performance computing to the analysis of medical data to improve screening for cervical cancer. The team is developing a flexible, extendable model that incorporates new data such as other biomolecular markers, genetics and lifestyle factors to individualize risk assessment, according to Abdulla. "We want to identify the optimal interval for screening each patient."The post LLNL Collaboration to Improve Cancer Screening appeared first on insideHPC.

|

|

by Rich Brueckner on (#1XAVV)

Pete Beckman presented this talk at the Argonne Training Program on Extreme-Scale Computing. "Here is the Parallel Platform Paradox: The average time required to implement a moderate-sized application on a parallel computer architecture is equivalent to the half-life of the latest parallel supercomputer.â€The post Video: Introduction to Parallel Supercomputing appeared first on insideHPC.

|

|

by staff on (#1XAPF)

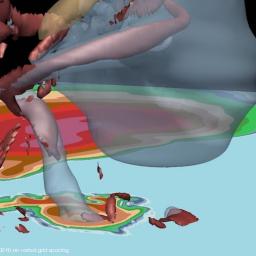

"We're trying to make high resolution simulations of super cell storms, or tornadoes," McGovern said. "What we get with the simulations are the fundamental variables of whatever our resolution is — we've been doing 100 meter x 100 meter cubes — there's no way you can get that kind of data without doing simulations. We're getting the fundamental variables like pressure, temperature and wind, and we're doing that for a lot of storms, some of which will generate tornadoes and some that won't. The idea is to do data mining and visualization to figure out what the difference is between the two."The post Supercomputing Tornadogenesis appeared first on insideHPC.

|

|

by staff on (#1XAJZ)

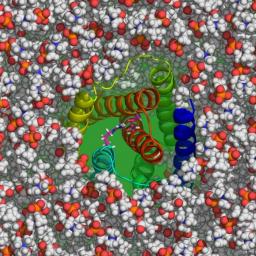

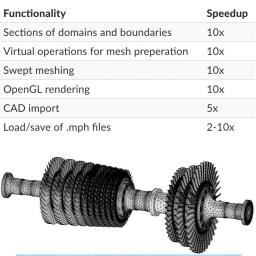

Today COMSOL announced updates to their software solutions for multiphysics modeling, simulation, app design and deployment. The latest update of the COMSOL software features major performance increases and the release of the Rotordynamics Module, which is now available as an add-on product to the Structural Mechanics Module.The post COMSOL Speeds Multiphysics Modeling & Simulation appeared first on insideHPC.

|

|

by staff on (#1XAF6)

Today General Atomics announced the next generation of Nirvana – a premier metadata, data placement and data management software solution for the most demanding workflows in Life Sciences, Scientific Research, Media & Entertainment and Energy Exploration. "Nirvana 5.0 reduces storage costs up to 75% by turning geographically dispersed, multiple vendor storage silos into a single global namespace that automatically moves infrequently-accessed data to lower-cost storage or to the cloud."The post General Atomics Releases Next-Gen Nirvana Data Management Software appeared first on insideHPC.

|

|

by staff on (#1X7AF)

"Our solutions ultimately make data readily available for users, applications and analytics, helping to facilitate faster results and better decisions,†said Gary Lyng, senior director of marketing, Data Center Systems at Western Digital. “We are excited to be working with Panasas as the volume, velocity, variety and value of data generated by modern lab equipment along with varying application and workflow requirements make implementing the right solution all the more challenging - and we have the right solution.â€The post Panasas & Western Digital to Power Life Science Research with iRODS appeared first on insideHPC.

|

|

by staff on (#1X73F)

Today International Computer Concepts (ICC) announced the release of a new line of overclocked servers: ICC Alpha. These systems were designed specifically for high-frequency trading (HFT) applications, where sequential processing speed is critical.

|

|

by staff on (#1X715)

Today Russia's RSC Group announced a business cooperation agreement with M Computers to market and deploy RSC's HPC solutions in the Czech Republic. "We believe that partnership with M Computers will help RSC to offer its innovative supercomputing and data center solutions for European clients, which could recognize such advantages of our equipment as the ultimate computing and power density, energy-efficiency, compactness, reliability, ease to manage and maintain."The post RSC Group to Market HPC Solutions in Czech Republic appeared first on insideHPC.

|

|

by staff on (#1X6YW)

Today Bright Computing announced expanded collaboration with Huawei across EMEA.Huawei places win-win collaboration at the heart of our business in Europe," said Jaco Pesschier, Senior Channel Sales Manager, Huawei West Europe Enterprise Business. "We’re committed to building a partner ecosystem which enables end-users to make the most of new ICT to become leaders in their markets. As our relationship with Bright Computing grows we look forward to a productive and optimistic future working together.â€The post Bright Broadens Partnership with Huawei in EMEA appeared first on insideHPC.

|

|

by staff on (#1X6VX)

RENCI’s Dell-powered supercomputer is working overtime to model the storm surge that Hurricane Matthew could bring to communities along the Eastern Seaboard. Named Hatteras, the 150-node M610 Dell cluster runs the ADCIRC storm surge model every six hours when a hurricane is active. "We are working on doing storm surge predictions the same way that meteorologists develop predictions for rain and wind speeds.â€The post Dell Powers RENCI Supercomputer Tracking Hurricane Matthew appeared first on insideHPC.

|

|

by Rich Brueckner on (#1X6KP)

"Science problems are becoming increasingly complex in all areas from physics and bioinformatics to engineering," said Siegfried Hoefinger, High Performance Computing Specialist at VSC explains. "Bigger is better, but inefficiency will always limit what you can achieve. The Allinea tools will enable us to quickly establish the root cause of bottlenecks and understand the markers for inefficient code. By doing so we’re helping to prove the case for modernization, can start to eliminate inefficiencies and exploit latent capacity to its full effect.â€The post Allinea Tools Advance Research at VSC in Austria appeared first on insideHPC.

|

|

by Rich Brueckner on (#1X3TS)

Today ACM and IEEE Computer Society named Bill Gropp from NCSA as the recipient of the 2016 ACM/IEEE Computer Society Ken Kennedy Award for highly influential contributions to the programmability of high performance parallel and distributed computers. The award will be presented at SC16 in Salt Lake City.The post Bill Gropp to Receive Ken Kennedy Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#1X3C1)

Robotics and Deep Learning applications were front and center at GTC Japan this week, where 2600 attendees lined up to hear the latest on GPU technologies. The age of AI is here,†said Jen-Hsun Huang, founder and CEO of NVIDIA. “‎GPU deep learning ignited this new wave of computing where software learns and machines reason. […]The post AI & Robotics Front and Center at GTC Japan appeared first on insideHPC.

|

|

by Rich Brueckner on (#1X367)

Over at the Parallella Blog, Andreas Olafsson from Adapteva writes that the company has reached an important milestone on its next-generation Epiphany-V chip. "Thanks to a generous grant from DARPA, we just taped out a 16nm chip with 1024 64-bit processor cores. To give a comparison, our 4.5B transistor chip is smaller than Apple's latest A10 chip and has 256 times as many processors. The chip offers an 80x processor density advantage over high performance chips from Intel and Nvidia."The post Parallella Tapes Out 1024-core Epiphany-V Chip appeared first on insideHPC.

|

|

by staff on (#1X32J)

Businesses could dramatically cut the time taken to bring new products and services to market with help from a new SGI supercomputer at EPCC, the UK's leading supercomputing center based at the University of Edinburgh. "This newly installed computing power - in tandem with EPCC's in-house expertise - means we are well placed to help businesses meet many of the computational challenges associated with developing new products and services," said George Graham, Commercial Manager of EPCC.The post SGI Powers New Cirrus Supercomputer at EPCC appeared first on insideHPC.

|

|

by staff on (#1X2X9)

Today the Barcelona Supercomputing Center presented a big data roadmap that it has coordinated on behalf of the European Commission. As part of the RETHINK Big Project, the presentation was given as part of the Big Data Congress and BSC used it to highlight the need for Europe to carry out research on new solutions for hardware and software for big data use.The post BSC Lays out European RETHINK Big Roadmap appeared first on insideHPC.

|

|

by staff on (#1X2ST)

Today’s emerging workloads like machine and deep learning, artificial intelligence, accelerated databases, and high performance data analytics require incredible speed through accelerated computing,†said Sumit Gupta, Vice President, High Performance Computing and Data Analytics, IBM. “Delivering the capabilities of the new IBM POWER8 with NVIDIA NVLink-based system through the Nimbix cloud expands the horizons of HPC and brings a highly differentiated accelerated computing platform to a whole new set of users.â€The post Power8 Systems with NVLink Come to Nimbix HPC Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WZ2X)

Ozalp Babaoglu from the University of Bologna presented this Google Talk. "At exascale, failures and errors will be frequent, with many instances occurring daily. This fact places resilience squarely as another major roadblock to sustainability. In this talk, I will argue that large computer systems, including exascale HPC systems, will ultimately be operated based on predictive computational models obtained through data-science tools, and at that point, the intervention of humans will be limited to setting high-level goals and policies rather than performing “nuts-and-bolts†operations."The post Video: Sustainable High-Performance Computing through Data Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WYZ1)

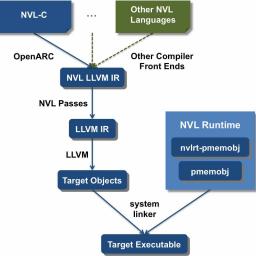

Researchers at the Future Technologies Group at Oak Ridge National Laboratory (ORNL) have developed a novel programming system that extends C with intuitive, language-level support for programming NVM as persistent, high-performance main memory; the prototype system is named NVL-C.The post ORNL Creates Programming System for NVM Main Memory Systems appeared first on insideHPC.

|

|

by staff on (#1WYX5)

Today NVIDIA announced APAC's first deployment of NVIDIA DGX-1 deep learning supercomputers CSIRO in Australia. "There is a growing interest from research groups to adopt machine learning techniques to support their projects,†said Angus Macoustra, executive manager for Scientific Computing at CSIRO. “CSIRO research projects are already using the DGX-1 systems, and in time, it is expected that machine learning will have applicability across all our areas of research and be used by hundreds of researchers.â€The post CSIRO Deploys NVIDIA DGX-1 Deep Learning Supercomputers Down Under appeared first on insideHPC.

|

|

by veronicahpc1 on (#1WYES)

Supercomputing developers and experts from around the globe will converge on Salt Lake City, Utah for the 2016 Intel® HPC Developer Conference on November 12-13 – just prior to SC ‘16. Conference attendance is free, however, those interested in attending should register quickly as Intel is expecting a big response, reflecting the broadening demand for HPC learning opportunities among technical developers. road on to learn about the incredible presenter lineup this year.The post 2016 Intel HPC Developer Conference Addresses In-Demand Topics appeared first on insideHPC.

|

|

by staff on (#1WYP9)

This may indeed be the year of artificial intelligence, when the technology came into its own for mainstream businesses. "But will other companies understand if AI has value for them? Perhaps a better question is "Why now?" This question centers on both the opportunity and why many companies are scared about missing out."The post insideHPC Readers: Weigh in on Why AI is Taking Off Now appeared first on insideHPC.

|

|

by staff on (#1WVB6)

Two University of Wyoming graduate students earned a trip to the SC16 conference in November by virtue of winning the poster contest at the recent Rocky Mountain Advanced Computing Consortium (RMACC) High Performance Computing Symposium. "I hope to receive good exposure to the most recent advancements in the field of high-performance computing,†Kommera says.The post Winning Posters on GPU Programming Send UW Students to SC16 appeared first on insideHPC.

|

|

by staff on (#1WV6N)

The Ohio Supercomputer Center has joined the CaRC Consortium, an NSF-funded research coordination network.The post OSC Joins CaRC Research Coordination Network appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WV2Y)

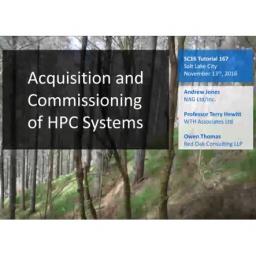

"This tutorial, part of the SC16 State of the Practice, will guide attendees through the process of purchasing and deploying a HPC system. It will cover the whole process from engaging with stakeholders in securing funding, requirements capture, market survey, specification of the tender/request for proposal documents, engaging with suppliers, evaluating proposals, and managing the installation. Attendees will learn how to specify what they want, yet enable the suppliers to provide innovative solutions beyond their specification both in technology and in the price; how to demonstrate to stakeholders that the solution selected is best value for money; and the common risks, pitfalls and mitigation strategies essential to achieve an on-time and on-quality installation process."The post Preview: SC16 Tutorial on How to Buy a Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WTZ3)

Today Mellanox announced the availability of standard Linux kernel driver for the company Open Ethernet, Spectrum switch platforms. Developed within the large Linux community, the new driver enables standard Linux Operating Systems and off-the-shelf Linux-based applications to operate on the switch, including L2 and L3 switching. Open Ethernet provides data centers with the flexibility to choose the best hardware platform and the best software platform, resulting in optimized data center performance and higher return on investment.The post Mellanox Deploys Standard Linux Operating Systems over Ethernet Switches appeared first on insideHPC.

|

|

by MichaelS on (#1WTES)

From Megaflops to Gigaflops to Teraflops to Petaflops and soon to be Exaflops, the march in HPC is always on and moving ahead. This whitepaper details some of the technical challenges that will need to be addressed in the coming years in order to get to exascale computing.The post Exascale – A Race to the Future of HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WQXB)

Registration is now open for the Dell HPC Community event at SC16. The event takes place Nov. 12 at the Radisson Hotel in Salt Lake City. "The Dell HPC Community events feature keynote presentations by HPC experts and working group sessions to discuss best practices in the use of Dell HPC Systems."The post Registration Opens for Dell HPC Community Event at SC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WQTT)

"We are still in the first minutes of the first day of the Intelligence revolution. In this keynote, Dr. Joseph Sirosh will present 5 solutions (and their implementations) that the intelligent cloud delivers. Sirosh shares five cloud AI patterns that his team and presented at the Summit. These five patterns are really about ways to bring data and learning together in cloud services, to infuse intelligence."The post Video: Azure – the Cloud Supercomputer for AI appeared first on insideHPC.

|

|

by staff on (#1WN8D)

"It's often a challenge to test the scalability of system software components before a large deployment, particularly if you need low level hardware access", said Dan Stanzione, Executive Director at TACC and a Co-PI on the Chameleon project. "Chameleon was designed for just these sort of cases – when your local test hardware is inadequate, and you are testing something that would be difficult to test in the commercial cloud – like replacing the available file system. Projects like Slash2 can use Chameleon to make tomorrow's cloud systems better than today's."The post Chameleon Testbed Blazes New Trails for Cloud HPC at TACC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WN5Z)

Adrian Jackson from EPCC at the University of Edinburgh presented this tutorial to ARCHER users. "We have been working for a number of years on porting computational simulation applications to the KNC, with varying successes. We were keen to test this new processor with its promise of 3x serial performance compared to the KNC and 5x memory bandwidth over normal processors (using the high-bandwidth, MCDRAM, memory attached to the chip)."The post Video: Intel Xeon Phi (KNL) Processor Overview appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WJAY)

Sure, your code seems fast, but how do you know if you are leaving potential performance on the table? Recognized HPC experts Georg Hager and Gerhard Wellein will teach a tutorial on Node-Level Performance Engineering at SC16. The session will take place 8:30-5:00pm on Sunday, Nov. 13 in Salt Lake City.The post SC16 Tutorial to Focus on Node-Level Performance Engineering appeared first on insideHPC.

|

|

by staff on (#1WJ82)

"Our high-performance computing solutions enable deep learning, engineering, and scientific fields to scale out their compute clusters to accelerate their most demanding workloads and achieve fastest time-to-results with maximum performance per watt, per square foot, and per dollar,†said Charles Liang, President and CEO of Supermicro. “With our latest innovations incorporating the new NVIDIA P100 processors in a performance and density optimized 1U and 4U architectures with NVLink, our customers can accelerate their applications and innovations to address the most complex real world problems.â€The post Supermicro Rolls Out New Servers with Tesla P100 GPUs appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WJ16)

In this video from the 2016 Argonne Training Program on Extreme-Scale Computing, Mark Miller from LLNL leads a panel discussion on Experiences in eXtreme Scale in HPC with FASTMATH team members. "The FASTMath SciDAC Institute is developing and deploying scalable mathematical algorithms and software tools for reliable simulation of complex physical phenomena and collaborating with U.S. Department of Energy (DOE) domain scientists to ensure the usefulness and applicability of our work. The focus of our work is strongly driven by the requirements of DOE application scientists who work extensively with mesh-based, continuum-level models or particle-based techniques."The post Video: Experiences in eXtreme Scale HPC appeared first on insideHPC.

|

|

by staff on (#1WHX5)

Today XSEDE announced it has awarded 30,000 core-hours of supercomputing time on the Bridges supercomputer to the North Carolina School of Science and Mathematics (NCSSM). Funded with a $9.65M NSF grant, Bridges contains a large number of research-grade software packages for science and engineering, including codes for computational chemistry, computational biology, and computational physics, along with specialty codes such as computational fluid dynamics. "NCSSM research students often pursue interdisciplinary research projects that involve computational and/or laboratory work in chemistry, physics, and other fields," said Jon Bennett, instructor of physics and faculty mentor for physics research. "The availability of supercomputer computational resources would greatly expand the range and depth of projects that are possible for these students.â€The post Bridges Supercomputer to Power Research at North Carolina School of Science and Mathematics appeared first on insideHPC.

|

|

by staff on (#1WHKQ)

Today Amazon Web Services announced the availability of P2 instances, a new GPU instance type for Amazon Elastic Compute Cloud designed for compute-intensive applications that require massive parallel floating point performance, including artificial intelligence, computational fluid dynamics, computational finance, seismic analysis, molecular modeling, genomics, and rendering. With up to 16 NVIDIA Tesla K80 GPUs, P2 instances are the most powerful GPU instances available in the cloud.The post GPUs Power New AWS P2 Instances for Science & Engineering in the Cloud appeared first on insideHPC.

|

|

by staff on (#1WE7W)

Men still outnumber women in STEM training and employment, and engineering leaders are working to bring awareness to that diversity gap and the opportunities it presents. SC16 is calling upon all organizations to look at the diversity landscape and publish that data. “Of course, we are supporting programs that empower more girls to study and pursue STEM degrees and careers. Getting more girls through the educational and training pipeline is a great first step, but it’s just the beginning.â€The post Supercomputing Experts Lend Expertise to Address STEM Gender Gap appeared first on insideHPC.

|

|

by staff on (#1WE61)

A huge barrier in converting cellulose polymers to biofuel lies in removing other biomass polymers that subvert this chemical process. To overcome this hurdle, large-scale computational simulations are picking apart lignin, one of those inhibiting polymers, and its interactions with cellulose and other plant components. The results point toward ways to optimize biofuel production and […]The post Supercomputing Plant Polymers for Biofuels appeared first on insideHPC.

|

|

by staff on (#1WDXN)

Today Penguin Computing announced Scyld Cloud Workstation 3.0, a 3D-accelerated remote desktop solution which provides true multi-user remote desktop collaboration for cloud-based Linux and Windows desktops. "Unlike other remote desktop solutions, collaboration via Scyld Cloud Workstation is more like sitting in-person with other engineers because a user can hand off control of their desktop to simplify collaboration on a project,†said Victor Gregorio, Vice President and General Manager, Cloud Services, Penguin Computing. “Scyld Cloud Workstation brings collaboration to life, providing a much more thorough and proficient interaction among researchers and engineers working together on a remote desktop. Ultimately, this allows customers a more efficient means to leverage cloud-based desktop solutions.â€The post Penguin Computing Adds Remote Desktop Collaboration to Scyld Cloud Workstation appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WDXQ)

SC16 will continue its HPC Matters Plenary session series this year with a panel discussion on HPC and Precision Medicine. The event will take place at 5:30 pm on Monday, Nov 14 just prior to the exhibits opening gala. "The success of all of these research programs hinge on harnessing the power of HPC to analyze volumes of complex genomics and other biological datasets that simply can’t be processed by humans alone. The challenge for our community will be to develop the computing tools and services needed to transform how we think about disease and bring us closer to the precision medicine future."The post SC16 Plenary Session to Focus on HPC and Precision Medicine appeared first on insideHPC.

|

|

by MichaelS on (#1WDP5)

With the introduction of the Intel Scalable System Framework, the Intel Xeon Phi processor can speed up Finite Element Analysis significantly. Using highly tuned math libraries such as the Intel Math Kernel Library (Intel MKL), FEA applications can execute math routines in parallel on the Intel Xeon Phi processor.The post Accelerating Finite Element Analysis with Intel Xeon Phi appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WE3Y)

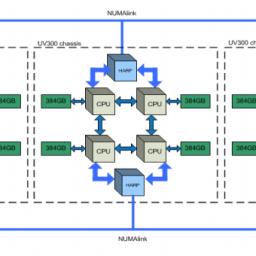

Nikos Trikoupis from the City University of New York gave this talk at the HPC User Forum in Austin. "We focus on measuring the aggregate throughput delivered by 12 Intel SSD DC P3700 for NVMe cards installed on the SGI UV 300 scale-up system in the CUNY High Performance Computing Center. We establish a performance baseline for a single SSD. The 12 SSDs are assembled into a single RAID-0 volume using Linux Software RAID and the XVM Volume Manager. The aggregate read and write throughput is measured against different configurations that include the XFS and the GPFS file systems."The post Video: Analysis of SSDs on SGI UV 300 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WAG4)

"The POWER8 with NVIDIA NVLink processor enables incredible speed of data transfer between CPUs and GPUs ideal for emerging workloads like AI, machine learning and advanced analyticsâ€, said Rick Newman, Director of OpenPOWER Strategy & Market Development Europe. “The open and collaborative spirit of innovation within the OpenPOWER Foundation enables companies like E4 to leverage new technology and build cutting edge solutions to help clients grappling with the massive amounts of data in today’s technology environment.â€The post E4 Computer Engineering Rolls Out GPU-accelerated OpenPOWER server appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WAEP)

Today Nvidia announced the general availability of CUDA 8 toolkit for GPU developers. "A crucial goal for CUDA 8 is to provide support for the powerful new Pascal architecture, the first incarnation of which was launched at GTC 2016: Tesla P100,†said Nvidia’s Mark Harris in a blog post. “One of NVIDIA’s goals is to support CUDA across the entire NVIDIA platform, so CUDA 8 supports all new Pascal GPUs, including Tesla P100, P40, and P4, as well as NVIDIA Titan X, and Pascal-based GeForce, Quadro, and DrivePX GPUs.â€The post Nvidia Releases Cuda 8 appeared first on insideHPC.

|

|

by staff on (#1WA9V)

Today Allinea Software announces availability of its new software release, version 6.1, which offers full support for programming parallel code on the Pascal GPU architecture, CUDA 8 from Nvidia. "The addition of Allinea tools into the mix is an exciting one, enabling teams to accurately measure GPU utilization, employ smart optimization techniques and quickly develop new CUDA 8 code that is bug and bottleneck free,†said Mark O’Connor, VP of Product Management at Allinea.The post Allinea Adds CUDA 8 Support for GPU Developers appeared first on insideHPC.

|

|

by Rich Brueckner on (#1WA41)

Today at GTC Europe, Nvidia unveiled Xavier, an all-new SoC based on the company's next-gen Volta GPU, which will be the processor in future self-driving cars. According to Huang, the ARM-based Xavier will feature unprecedented performance and energy efficiency, while supporting deep-learning features important to the automotive market. A single Xavier-based AI car supercomputer will be able to replace today’s fully configured DRIVE PX 2 with two Parker SoCs and two Pascal GPUs.The post Video: Nvidia Unveils ARM-Powered SoC with Volta GPU appeared first on insideHPC.

|

|

by staff on (#1W9YF)

"Our customers are looking for a highly integrated server adapter that solves their pressing need for network performance, efficiency and security,†said Gilad Shainer, vice president of marketing, Mellanox Technologies. “The Innova adapter provides IPsec offload to deliver complete end-to-end security for traffic moving within the data center. Combined with the intelligent network offload and acceleration engines, Innova IPsec is the ideal solution for cloud, telecommunication, Web 2.0, high-performance compute and storage infrastructures.â€The post Mellanox Roll Out New Innova IPsec 10/40G Ethernet Adapters appeared first on insideHPC.

|

|

by Rich Brueckner on (#1W9WC)

In this video from LUG 2016 in Australia, Chakravarthy Nagarajan from Intel presents: An Optimized Entry Level Lustre Solution in a Small Form Factor. "Our goal was to provide an entry level Lustre storage solution in a high density form factor, with a low cost, small footprint, all integrated with Intel Enterprise Edition for Lustre* software."The post Video: An Optimized Entry Level Lustre Solution in a Small Form Factor appeared first on insideHPC.

|