|

by Rich Brueckner on (#1TZDR)

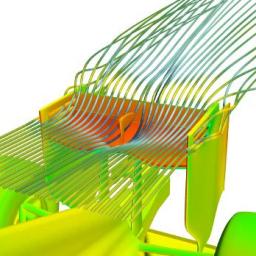

"The integration of FLOW-3D with CAESES creates a powerful design environment for our users. FLOW-3D’s inherent ease of modifying geometry is even more potent when combined with an optimization tool like CAESES, which specializes in optimizing for geometry as well as other parametric studies," said Flow Science Vice President of Sales and Business Development, Amir Isfahani.The post Flow Science Partners with FRIENDSHIP SYSTEMS for Optimizing Simulation appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 10:30 |

|

by Rich Brueckner on (#1TZDT)

“DDN has a long history of technological innovation, a great team and a phenomenal market opportunity,†said Triendl. “I am excited to help realize what I believe is tremendous potential to extend our unmatched delivery of performance and capacity at scale – far beyond what most can even imagine.â€The post DDN Names Robert Triendl SVP Global Sales, Marketing and Field Services appeared first on insideHPC.

|

|

by staff on (#1TW2R)

Loyola University Maryland has been awarded a $280,120 grant from the National Science Foundation (NSF) to build an HPC cluster that will exponentially expand research opportunities for faculty and students across disciplines.The post Loyola University Maryland to Build HPC Cluster with NSF Grant appeared first on insideHPC.

|

|

by staff on (#1TVYF)

"Companies are awash in data, but struggle to take advantage of it to build better predictive models and gain deeper insights,†says David Rich, MATLAB marketing director, MathWorks. “With R2016b, we’ve lowered the bar to allow domain experts to work with more data, more easily. This leads to improved system design, performance, and reliability.â€The post MathWorks Release 2016b Makes it Easier to Work with Big Data appeared first on insideHPC.

|

|

by staff on (#1TVTX)

"The drive towards Exascale computing requires cooling the next generation of extremely hot CPUs, while staying within a manageable power envelope,†said Bob Bolz, HPC and Data Center Business development at Aquila. “Liquid cooling holds the key. Aquarius is designed from the ground up to meet reliability and the feature-specific demands of high performance and high density computing. Our design goal was to reduce the cost of cooling server resources to well under 5% of overall data center usage.â€The post Aquila Launches Liquid Cooled OCP Server Platform appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TVPH)

"AMD has been away from the HPC space for a while, but now they are coming back in a big way with an open software approach to GPU computing. The Radeon Open Compute Platform (ROCm) was born from the Boltzmann Initiative announced last year at SC15. Now available on GitHub, the ROCm Platform bringing a rich foundation to advanced computing by better integrating the CPU and GPU to solve real-world problems."The post Slidecast: For AMD, It’s Time to ROCm! appeared first on insideHPC.

|

|

by Douglas Eadline on (#1TVM8)

The big data analytics market has seen rapid growth in recent years. Part of this trend includes the increased use of machine learning (Deep Learning) technologies. Indeed, machine learning speed has been drastically increased though the use of GPU accelerators. The issues facing the HPC market are similar to the analytics market — efficient use of the underlying hardware. A position paper from the third annual Big Data and Extreme Computing conference (2015) illustrates the power of co-design in the analytics market.The post Co-design for Data Analytics And Machine Learning appeared first on insideHPC.

|

by MichaelS on (#1TVEC)

Vectorization and threading are critical to using such innovative hardware product such as the Intel Xeon Phi processor. Using tools early in the design and development processor that identify where vectorization can be used or improved will lead to increased performance of the overall application. Modern tools can be used to determine what might be blocking compiler vectorization and the potential gain from the work involved.The post Better Software For HPC through Code Modernization appeared first on insideHPC.

|

by staff on (#1TRQ8)

ESI Group has signed agreement with Huawei to collaborate on on High Performance Computing and cloud computing for industrial manufacturing solutions for customers in China and worldwide. "The ongoing digital transformation of industrial manufacturing demands enterprise-level IT solutions that are more intelligent, efficient, and convenient, especially in the HPC domain," said Zheng Yelai, President, Huawei IT Product Line.The post Huawei and ESI to Collaborate on HPC for Manufacturing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TR1D)

Over at the Nvidia Blog, Jamie Beckett writes that the company's is expanding its Deep Learning Institute with Microsoft and Coursera. The institute provides training to help people apply deep learning to solve challenging problems.The post Nvidia Expands Deep Learning Institute appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TQXE)

Gary Paek from Intel presented this talk at the HPC User Forum in Austin. "Traditional high performance computing is hitting a performance wall. With data volumes exploding and workloads becoming increasingly complex, the need for a breakthrough in HPC performance is clear. Intel Scalable System Framework provides that breakthrough. Designed to work for small clusters to the world’s largest supercomputers, Intel SSF provides scalability and balance for both compute- and data intensive applications, as well as machine learning and visualization. The design moves everything closer to the processor to improve bandwidth, reduce latency and allow you to spend more time processing and less time waiting."The post Video: Intel Scalable System Framework appeared first on insideHPC.

|

|

by staff on (#1TQRS)

PRACE has announced the winners of its 13th Call for Proposals for PRACE Project Access. Selected proposals will receive allocations to the following PRACE HPC resources: Marconi and MareNostrum.The post PRACE Awards Time on Marconi Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TQJX)

"We’re currently installing the most powerful supercomputer in the history of the center, but it’s just a roomful of hardware without fast and easy access for our clients,†said David Hudak, interim executive director of OSC. “OnDemand 3.0 provides them with seamless, flexible access to all our computer and storage services.â€The post OnDemand 3.0 Portal to Power Owens Supercomputer at OSC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TM0Y)

In this Intel Chip Chat podcast, Dr. Julie Krugler Hollek, co-organizer of PyLadies San Francisco and Data Scientist at Twitter, joins Allyson Klein to discuss efforts to democratize participation in open source communities and the future of data science. "PyLadies helps people who identify as women become participants in open source Python projects like The SciPy Stack, a specification that provides access to machine learning and data visualization tools."The post Podcast: How PyLadies are Increasing Diversity in Coding and Data Science appeared first on insideHPC.

|

|

by staff on (#1TKYY)

"SGI and Bright Computing have been working together for the last year to provide our joint customers with enterprise level clustered infrastructure management software for production supercomputing,†said Gabriel Broner, vice president and general manager of HPC, SGI. “By partnering with Bright Computing, our customers are able to select the cluster management tool that best suits their needs.â€The post Bright Computing Announces Reseller Agreement with SGI appeared first on insideHPC.

|

|

by staff on (#1TKVG)

Today the Energy Department’s Advanced Manufacturing Office announced up to $3 million in available funding for manufacturers to use high-performance computing resources at the Department's national laboratories to tackle major manufacturing challenges. The High Performance Computing for Manufacturing (HPC4Mfg) program enables innovation in U.S. manufacturing through the adoption of high performance computing (HPC) to advance applied science and technology in manufacturing, with an aim of increasing energy efficiency, advancing clean energy technology, and reducing energy’s impact on the environment.The post HPC4mfg Seeks New Proposals to Advance Energy Technologies appeared first on insideHPC.

|

|

by staff on (#1TKSJ)

"The growing number of use cases that object storage can satisfy represents a huge opportunity for DDN – especially as cases like collaboration and active archive for large and 'forever' data sets are concentrated in DDN customer sites and well-established DDN markets," said Molly Rector, CMO, executive vice president product management and worldwide marketing at DDN. "WOS' differentiated benefits give it a strong competitive advantage for current and emerging use cases, and with multiple appliance and software-only options customers have complete architectural flexibility and choice."The post DDN Appliance Speeds WOS Object Storage appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TKP9)

Nvidia's GPU platforms have been widely used on the training side of the Deep Learning equation for some time now. Today the company announced a new Pascal-based GPU tailor-made for the inferencing side of Deep Learning workloads. "With the Tesla P100 and now Tesla P4 and P40, NVIDIA offers the only end-to-end deep learning platform for the data center, unlocking the enormous power of AI for a broad range of industries," said Ian Buck, general manager of accelerated computing at NVIDIA."The post Nvidia Unveils World’s First GPU Design for Inferencing appeared first on insideHPC.

|

|

by staff on (#1TKCT)

The prevalency of cloud computing has changed the HPC landscape necessaiting HPC management tools that can manage and simplify complex enviornments in order to optimize flexibility and speed. Altair’s new solution PBS Cloud Manager makes it easy to build and manage HPC application stacks.The post The Future of HPC Application Management in a Post Cloud World appeared first on insideHPC.

|

|

by staff on (#1TG8A)

"More than just building bigger and faster computers, high-performance computing is about how to build the algorithms and applications that run on these computers,†said School of Computational Science and Engineering (CSE) Associate Professor Edmond Chow. “We've brought together the top people in the U.S. with expertise in asynchronous techniques as well as experience needed to develop, test, and deploy this research in scientific and engineering applications.â€The post DOE Funds Asynchronous Supercomputing Research at Georgia Tech appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TG44)

Andrew Jones from NAG presented this talk at the HPC User Forum in Austin. "This talk will discuss why it is important to measure High Performance Computing, and how to do so. The talk covers measuring performance, both technical (e.g., benchmarks) and non-technical (e.g., utilization); measuring the cost of HPC, from the simple beginnings to the complexity of Total Cost of Ownership (TCO) and beyond; and finally, the daunting world of measuring value, including the dreaded Return on Investment (ROI) and other metrics. The talk is based on NAG HPC consulting experiences with a range of industry HPC users and others. This is not a sales talk, nor a highly technical talk. It should be readily understood by anyone involved in using or managing HPC technology."The post Measuring HPC: Performance, Cost, & Value appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TG0V)

BSC researcher Xevi Roca will receive a one of the European Union’s most prestigious research grants for a project to create new simulation methods to respond to the aviation industry’s most pressing challenges. Roca, who has been working on geometry for aeronautical simulation since 2004, is proposing to integrate time as a dimension into the geometries of simulations. The aim is to improve the efficiency, accuracy and robustness of the aerodynamic performance simulations carried out on supercomputers such as BSC’s MareNostrum.The post EU Funds BSC Researcher to Develop New Aircraft Simulation Tools appeared first on insideHPC.

|

|

by MichaelS on (#1TFXV)

Humans are very good at visual pattern recognition especially when it comes to facial features and graphic symbols and identifying a specific person or associating a specific symbol with an associated meaning. It is in these kinds of scenarios where deep learning systems excel. Clearly identifying each new person or symbol is more efficiently achieved by a training methodology than by needing to reprogram a conventional computer or explicitly update database entries.The post Examples of Deep Learning Industrialization appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TFW8)

"Our collaborative role in these exascale applications projects stems from our laboratory’s long-term strategy in co-design and our appreciation of the vital role of high-performance computing to address national security challenges,†said John Sarrao, associate director for Theory, Simulation and Computation at Los Alamos National Laboratory. “The opportunity to take on these scientific explorations will be especially rewarding because of the strategic partnerships with our sister laboratories.â€The post Funding Boosts Exascale Research at LANL appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TFRF)

In this podcast, the Radio Free HPC team discusses the recent news that Intel has sold its controlling stake in McAfee and that NSF has funded the next generation of XSEDE.The post Radio Free Looks at Exascale Application Challenges in the Wake of XSEDE 2.0 Funding appeared first on insideHPC.

|

|

by staff on (#1TCYM)

A consortium of European researchers and technology companies recently completed the EU-funded SAVE project, aimed at simplifying the execution data-intensive applications on complex hardware architectures. Funded by the European Commission’s Seventh Framework Programme (FP7), the project was launched in 2013, under the project name ‘Self-Adaptive Virtualization-Aware High-Performance/Low-Energy Heterogeneous System Architectures’ (SAVE). The project, which was completed at the start of this month, has led to innovations in hardware, software and operating system (OS) components.The post European SAVE Project Streamlines Data Intensive Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1TCPH)

Leonardo Flores from the European Commission presented this talk at the HPC User Forum. "The Cloud Initiative will make it easier for researchers, businesses and public services to fully exploit the benefits of Big Data by making it possible to move, share and re-use data seamlessly across global markets and borders, and among institutions and research disciplines. Making research data openly available can help boost Europe's competitiveness, especially for start-ups, SMEs and companies who can use data as a basis for R&D and innovation, and can even spur new industries."The post EU HPC Strategy and the European Cloud Initiative appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T9WE)

Yutaka Ishikawa from Riken AICS presented this talk at the HPC User Forum. "Slated for delivery sometime around 2022, the ARM-based Post-K Computer has a performance target of being 100 times faster than the original K computer within a power envelope that will only be 3-4 times that of its predecessor. RIKEN AICS has been appointed as the main organization for leading the development of the Post-K."The post Video: Japan’s Post K Computer appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T9T1)

Coming to SC16? Intel is hosting a one-day Lustre training session on Friday November 18th, 2016 at the Sheraton in Salt Lake City. "You can expect the top Lustre experts to spend the day with on the training topics that you care about."The post Lustre Training Day Coming to SC16 in Salt Lake City appeared first on insideHPC.

|

|

by staff on (#1T691)

Supermicro’s density optimized 4U SuperServer 4028GR-TR(T)2 supports up to 10 PCI-E Tesla P100 accelerators for up to 210 TFLOPS FP16 peak performance with GPU Direct RDMA support. Supermicro’s innovative and GPU optimized single root complex PCI-E design is proven to dramatically improve GPU peer-to-peer communication efficiency over QPI and PCI-E links, with up to 21% higher QPI throughput and 60% lower latency compared to previous generation products. These 4U SuperServers support dual Intel Xeon processor E5-2600 v4/v3 product families, up to 3TB DDR4-2400MHz memory, optional dual onboard 10GBase-T ports, and redundant Titanium Level (96%) digital power supplies.The post Supermicro Shipping Servers with NVIDIA Tesla P100 GPUs appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T65R)

Today Juniper Networks announced it has been selected by the National Center for Atmospheric Research (NCAR) to provide the networking infrastructure for a new supercomputer that will be used by researchers to predict climate patterns and assess the effects of global warming.The post Juniper Networks Powers Climate Research at NCAR appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T644)

"Nimbis was founded in 2008 by HPC industry veterans Robert Graybill and Brian Schott to act as the first nationwide brokerage clearinghouse for a broad spectrum of integrated cloud-based HPC platforms and applications. Our fully integrated online Technical Computing Marketplace comprises several stores hosting modeling and simulation applications on HPC platforms in the cloud."The post Video: HPC in the Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T61W)

"With the Yottabyte Research Cloud, researchers will be able to ask more questions, faster, of the ever-expanding and massive sets of data collected for their work," said Yottabyte CEO Paul Hodges. "We are very pleased to be a part of the diverse and challenging research environment at U-M. This partnership is a great opportunity to develop and refine computing tools that will increase the productivity of U-M's world class researchers."The post Yottabyte Powers Data-Intensive Research at University of Michigan appeared first on insideHPC.

|

|

by staff on (#1T3D2)

Today One Stop Systems (OSS) announced that its High Density Compute Accelerator (HDCA) and its Express Box 3600 (EB3600) are now available for purchase with the NVIDIA Tesla P100 for PCIe GPU. These high-density platforms deliver teraflop performance with greatly reduced cost and space requirements. The HDCA supports up to 16 Tesla P100s and the EB3600 supports up to 9 Tesla P100s. The Tesla P100 provides 4.7 TeraFLOPS of double-precision performance, 9.3 TeraFLOPS of single-precision performance and 18.7 TeraFLOPS of half-precision performance with NVIDIA GPU BOOST technology.The post One Stop Systems Shipping Platforms with NVIDIA Tesla P100 for PCIe appeared first on insideHPC.

|

|

by staff on (#1T2SX)

Today Fujitsu announced that the company has received an order for an experiment-analysis system from Kamioka Observatory, part of the Institute for Cosmic Ray Research (ICRR) at the University of Tokyo. The system is destined for Kamioka Observatory's Super-Kamiokande facility, which is helping to shed light on the workings of the universe through the observation of neutrinos, and is scheduled to go operational in March 2017.The post Fujitsu Cluster to Power Super-Kamiokande Neutrino Experiments appeared first on insideHPC.

|

|

by MichaelS on (#1T2N6)

"An environment that assists in deep learning usually consists of algorithms that can draw conclusions from data that is run at very high speeds. Processors such as the Intel Xeon Phi Processor that contain a significant number of processing cores and operate in a SIMD mode are critical to these new environments. With the Intel Xeon Phi processor, new insights can be discovered from either existing data or new data sources."The post Deep Learning with the Intel Xeon Phi Processor appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T2J3)

Today Argonne announced that the Lab is leading a pair of newly funded applications projects for the Exascale Computing Project (ECP). The announcement comes on the heels of news that ECP has funded a total of 15 application development proposals for full funding and seven proposals for seed funding, representing teams from 45 research and academic organizations.The post Argonne to Develop Applications for ECP Exascale Computing Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T2FW)

Ed Turkel and Percy Tzelnic from Dell Technologies presented this pair of talks at the HPC User Forum in Austin. "This week, Dell Technologies announced completion of the acquisition of EMC Corporation, creating a unique family of businesses that provides the essential infrastructure for organizations to build their digital future, transform IT and protect their most important asset, information. This combination creates a $74 billion market leader with an expansive technology portfolio."The post Dell & EMC in HPC – The Journey so far and the Road Ahead appeared first on insideHPC.

|

|

by Rich Brueckner on (#1T2BB)

“The University’s researchers are making landmark discoveries in fields spanning human heritable disease, cancer, agriculture and biofuels manufacture – and they depend on our IT team to provide them with the fastest, most efficient data storage and compute systems to support their data-heavy work,†said Professor David Abramson, University of Queensland Research Computing Center director. “Our IBM, SGI (DMF) and DDN-based data fabric allows us to deliver ultra-fast multi-site data access without requiring any extra intervention from researchers and helps us to ensure our scientists can focus their time on potentially life-saving discoveries.â€The post DDN Powers High Performance Data Storage Fabric at University of Queensland appeared first on insideHPC.

|

|

by staff on (#1T27E)

Today IBM unveiled a series of new servers designed to help propel cognitive workloads and to drive greater data center efficiency. Featuring a new chip, the Linux-based lineup incorporates innovations from the OpenPOWER community that deliver higher levels of performance and greater computing efficiency than available on any x86-based server. "Collaboratively developed with some of the world’s leading technology companies, the new Power Systems are uniquely designed to propel artificial intelligence, deep learning, high performance data analytics and other compute-heavy workloads, which can help businesses and cloud service providers save money on data center costs."The post New OpenPOWER Servers Accelerate Deep Learning with NVLink appeared first on insideHPC.

|

|

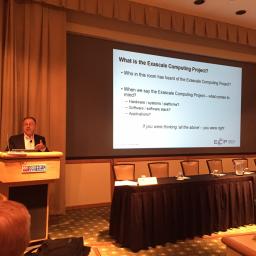

by Rich Brueckner on (#1SZSR)

Paul Messina presented this talk at the HPC User Forum in Austin. "The Exascale Computing Project (ECP) is a collaborative effort of the Office of Science (DOE-SC) and the National Nuclear Security Administration (NNSA). As part of President Obama’s National Strategic Computing initiative, ECP was established to develop a new class of high-performance computing systems whose power will be a thousand times more powerful than today’s petaflop machines."The post Video: The ECP Exascale Computing Project appeared first on insideHPC.

|

|

by staff on (#1SZE1)

Today Dell Technologies announced completion of the acquisition of EMC Corporation, creating a unique family of businesses that provides the essential infrastructure for organizations to build their digital future, transform IT and protect their most important asset, information. This combination creates a $74 billion[i] market leader with an expansive technology portfolio that solves complex problems for customers in the industry’s fast-growing areas of hybrid cloud, software-defined data center, converged infrastructure, platform-as-a-service, data analytics, mobility and cybersecurity.The post It’s “Day 1†for Dell Technologies with New Branding appeared first on insideHPC.

|

|

by Douglas Eadline on (#1SZA2)

Achieving better scalability and performance at Exascale will require full data reach. Without this capability, onload architectures force all data to move to the CPU before allowing any analysis. The ability to analyze data everywhere means that every active component in the cluster will contribute to the computing capabilities and boost performance. In effect, the interconnect will become its own “CPU†and provide in-network computing capabilities.The post Network Co-design as a Gateway to Exascale appeared first on insideHPC.

|

|

by staff on (#1SZ8A)

Today Lawrence Berkeley National Laboratory announced that LBNL scientists will lead or play key roles in developing 11 critical research applications for next-generation supercomputers as part of DOE's Exascale Computing Project (ECP).The post Berkeley Lab to Develop Key Applications for ECP Exascale Computing Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SZ4V)

Earl Joseph presented this talk at the HPC User Forum in Austin. "HPC is still expected to be a strong growth market going forward. IDC is forecasting a 7.7 percent growth from 2015 to 2019. We're projecting the 2019 HPC Market will exceed $15 Billion."The post Video: IDC HPC Market Update appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SYHZ)

"Aligning ourselves with an organization like Microsoft further supports our mission of enabling companies to manage their application workloads whether on-premise or in a hybrid or cloud context like Microsoft Azure,†said Gary Tyreman, CEO, Univa. “Univa is working hard to bridge the gap from traditional IT environments to the new hybrid world by helping enterprises reduce complexity and run workloads more efficiently in the cloud.â€The post Univa Joins up with Microsoft Enterprise Cloud Alliance appeared first on insideHPC.

|

|

by staff on (#1SYCY)

"These application development awards are a major first step toward achieving mission critical application readiness on the path to exascale,†said ECP director Paul Messina. “A key element of the ECP’s mission is to deliver breakthrough HPC modeling and simulation solutions that confidently deliver insight and predict answers to the most critical U.S. problems and challenges in scientific discovery, energy assurance, economic competitiveness, and national security,†Messina said. “Application readiness is a strategic aspect of our project and foundational to the development of holistic, capable exascale computing environments.â€The post Exascale Computing Project (ECP) Awards $39.8 million for Application Development appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SWEH)

Hailing from Norway, big-memory appliance maker Numascale has been a fixture at the ISC conference since the company’s formation in 2008. At ISC 2016, Numascale was noticeably absent from the show and the word on the street was that the company was retooling their NumaConnect™ technology around NVMe. To learn more, we caught up with Einar Rustad, Numascale’s CTO.The post Interview: Numascale to Partner with OEMs on Big Memory Server Technology appeared first on insideHPC.

|

|

by staff on (#1SW3Z)

Submissions are now open for the ISC 2017 conference Research Paper Sessions, which aim to provide first-class opportunities for engineers and scientists in academia, industry and government to present research that will shape the future of high performance computing. Submissions will be accepted through December 2, 2016.The post Call for Research Papers: ISC 2017 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1SW08)

Today Movidius announced that the company is being acquired by Intel. As a deep learning startup, Movidius's mission is to give the power of sight to machines.The post Intel Acquires Movidius to Bolster AI appeared first on insideHPC.

|